Note

Go to the end to download the full example code.

to_onnx and a model with a loop (scan)¶

Control flow cannot be exported with a change.

The code of the model can be changed or patched

to introduce function torch.ops.higher_order.scan().

Pairwise Distance¶

We appy loops to the pairwise distances (torch.nn.PairwiseDistance).

import scipy.spatial.distance as spd

import torch

from onnx_array_api.plotting.graphviz_helper import plot_dot

from experimental_experiment.helpers import pretty_onnx

from experimental_experiment.torch_interpreter import to_onnx, ExportOptions

class ModuleWithControlFlowLoop(torch.nn.Module):

def forward(self, x, y):

dist = torch.empty((x.shape[0], y.shape[0]), dtype=x.dtype)

for i in range(x.shape[0]):

sub = y - x[i : i + 1]

d = torch.sqrt((sub * sub).sum(axis=1))

dist[i, :] = d

return dist

model = ModuleWithControlFlowLoop()

x = torch.randn(3, 4)

y = torch.randn(5, 4)

pwd = spd.cdist(x.numpy(), y.numpy())

expected = torch.from_numpy(pwd)

print(f"shape={pwd.shape}, discrepancies={torch.abs(expected - model(x,y)).max()}")

shape=(3, 5), discrepancies=1.6672823122121372e-07

torch.export.export() works because it unrolls the loop.

It works if the input size never change.

ep = torch.export.export(model, (x, y))

print(ep.graph)

graph():

%x : [num_users=3] = placeholder[target=x]

%y : [num_users=3] = placeholder[target=y]

%empty : [num_users=4] = call_function[target=torch.ops.aten.empty.memory_format](args = ([3, 5],), kwargs = {dtype: torch.float32, device: cpu, pin_memory: False})

%slice_1 : [num_users=1] = call_function[target=torch.ops.aten.slice.Tensor](args = (%x, 0, 0, 1), kwargs = {})

%sub : [num_users=1] = call_function[target=torch.ops.aten.sub.Tensor](args = (%y, %slice_1), kwargs = {})

%mul : [num_users=1] = call_function[target=torch.ops.aten.mul.Tensor](args = (%sub, %sub), kwargs = {})

%sum_1 : [num_users=1] = call_function[target=torch.ops.aten.sum.dim_IntList](args = (%mul, [1]), kwargs = {})

%sqrt : [num_users=1] = call_function[target=torch.ops.aten.sqrt.default](args = (%sum_1,), kwargs = {})

%select : [num_users=1] = call_function[target=torch.ops.aten.select.int](args = (%empty, 0, 0), kwargs = {})

%slice_2 : [num_users=1] = call_function[target=torch.ops.aten.slice.Tensor](args = (%select, 0, 0, 9223372036854775807), kwargs = {})

%copy_ : [num_users=0] = call_function[target=torch.ops.aten.copy_.default](args = (%slice_2, %sqrt), kwargs = {})

%slice_3 : [num_users=1] = call_function[target=torch.ops.aten.slice.Tensor](args = (%x, 0, 1, 2), kwargs = {})

%sub_1 : [num_users=1] = call_function[target=torch.ops.aten.sub.Tensor](args = (%y, %slice_3), kwargs = {})

%mul_1 : [num_users=1] = call_function[target=torch.ops.aten.mul.Tensor](args = (%sub_1, %sub_1), kwargs = {})

%sum_2 : [num_users=1] = call_function[target=torch.ops.aten.sum.dim_IntList](args = (%mul_1, [1]), kwargs = {})

%sqrt_1 : [num_users=1] = call_function[target=torch.ops.aten.sqrt.default](args = (%sum_2,), kwargs = {})

%select_1 : [num_users=1] = call_function[target=torch.ops.aten.select.int](args = (%empty, 0, 1), kwargs = {})

%slice_4 : [num_users=1] = call_function[target=torch.ops.aten.slice.Tensor](args = (%select_1, 0, 0, 9223372036854775807), kwargs = {})

%copy__1 : [num_users=0] = call_function[target=torch.ops.aten.copy_.default](args = (%slice_4, %sqrt_1), kwargs = {})

%slice_5 : [num_users=1] = call_function[target=torch.ops.aten.slice.Tensor](args = (%x, 0, 2, 3), kwargs = {})

%sub_2 : [num_users=1] = call_function[target=torch.ops.aten.sub.Tensor](args = (%y, %slice_5), kwargs = {})

%mul_2 : [num_users=1] = call_function[target=torch.ops.aten.mul.Tensor](args = (%sub_2, %sub_2), kwargs = {})

%sum_3 : [num_users=1] = call_function[target=torch.ops.aten.sum.dim_IntList](args = (%mul_2, [1]), kwargs = {})

%sqrt_2 : [num_users=1] = call_function[target=torch.ops.aten.sqrt.default](args = (%sum_3,), kwargs = {})

%select_2 : [num_users=1] = call_function[target=torch.ops.aten.select.int](args = (%empty, 0, 2), kwargs = {})

%slice_6 : [num_users=1] = call_function[target=torch.ops.aten.slice.Tensor](args = (%select_2, 0, 0, 9223372036854775807), kwargs = {})

%copy__2 : [num_users=0] = call_function[target=torch.ops.aten.copy_.default](args = (%slice_6, %sqrt_2), kwargs = {})

return (empty,)

However, with dynamic shapes, that’s another story.

x_rows = torch.export.Dim("x_rows")

y_rows = torch.export.Dim("y_rows")

dim = torch.export.Dim("dim")

try:

ep = torch.export.export(

model, (x, y), dynamic_shapes={"x": {0: x_rows, 1: dim}, "y": {0: y_rows, 1: dim}}

)

print(ep.graph)

except Exception as e:

print(e)

Constraints violated (x_rows)! For more information, run with TORCH_LOGS="+dynamic".

- You marked x_rows as dynamic but your code specialized it to be a constant (3). Either remove the mark_dynamic or use a less strict API such as maybe_mark_dynamic or Dim.AUTO.

Suggested fixes:

x_rows = 3

The error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.

Suggested Patch¶

We need to rewrite the module with function

torch.ops.higher_order.scan().

def dist(y: torch.Tensor, scanned_x: torch.Tensor):

sub = y - scanned_x.reshape((1, -1))

sq = sub * sub

rd = torch.sqrt(sq.sum(axis=1))

# clone --> UnsupportedAliasMutationException:

# Combine_fn might be aliasing the input!

return [y.clone(), rd]

class ModuleWithControlFlowLoopScan(torch.nn.Module):

def forward(self, x, y):

carry, out = torch.ops.higher_order.scan(dist, [y], [x], additional_inputs=[])

return out

model = ModuleWithControlFlowLoopScan()

print(f"shape={pwd.shape}, discrepancies={torch.abs(expected - model(x,y)).max()}")

shape=(3, 5), discrepancies=1.6672823122121372e-07

That works. Let’s export again.

graph():

%x : [num_users=1] = placeholder[target=x]

%y : [num_users=1] = placeholder[target=y]

%scan_combine_graph_0 : [num_users=1] = get_attr[target=scan_combine_graph_0]

%scan : [num_users=2] = call_function[target=torch.ops.higher_order.scan](args = (%scan_combine_graph_0, [%y], [%x], ()), kwargs = {})

%getitem : [num_users=0] = call_function[target=operator.getitem](args = (%scan, 0), kwargs = {})

%getitem_1 : [num_users=1] = call_function[target=operator.getitem](args = (%scan, 1), kwargs = {})

return (getitem_1,)

The graph shows some unused results and this might confuse the exporter.

We need to run torch.export.ExportedProgram.run_decompositions().

ep = ep.run_decompositions({})

print(ep.graph)

graph():

%x : [num_users=1] = placeholder[target=x]

%y : [num_users=1] = placeholder[target=y]

%scan_combine_graph_0 : [num_users=1] = get_attr[target=scan_combine_graph_0]

%scan : [num_users=1] = call_function[target=torch.ops.higher_order.scan](args = (%scan_combine_graph_0, [%y], [%x], ()), kwargs = {})

%getitem_1 : [num_users=1] = call_function[target=operator.getitem](args = (%scan, 1), kwargs = {})

return (getitem_1,)

Let’s export again with ONNX.

opset: domain='' version=18

opset: domain='local_functions' version=1

input: name='x' type=dtype('float32') shape=['x_rows', 'dim']

input: name='y' type=dtype('float32') shape=['y_rows', 'dim']

Scan(y, x, body=G1, num_scan_inputs=1, scan_input_directions=[0], scan_output_axes=[0], scan_output_directions=[0]) -> scan#0, output_0

output: name='output_0' type=dtype('float32') shape=['x_rows', 'y_rows']

----- subgraph ---- Scan - scan - att.body=G1 -- level=1 -- init_0_y,scan_0_x -> output_0,output_1

input: name='init_0_y' type='NOTENSOR' shape=None

input: name='scan_0_x' type='NOTENSOR' shape=None

Constant(value=[1]) -> init7_s1_12

Constant(value=[1, -1]) -> init7_s2_1_-12

Reshape(scan_0_x, init7_s2_1_-12) -> view2

Sub(init_0_y, view2) -> sub_42

Mul(sub_42, sub_42) -> mul_72

ReduceSum(mul_72, init7_s1_12, keepdims=0) -> sum_12

Sqrt(sum_12) -> output_1

Identity(init_0_y) -> output_0

output: name='output_0' type='NOTENSOR' shape=None

output: name='output_1' type='NOTENSOR' shape=None

We can also inline the local function.

opset: domain='' version=18

opset: domain='local_functions' version=1

input: name='x' type=dtype('float32') shape=['x_rows', 'dim']

input: name='y' type=dtype('float32') shape=['y_rows', 'dim']

Scan(y, x, body=G1, num_scan_inputs=1, scan_input_directions=[0], scan_output_axes=[0], scan_output_directions=[0]) -> scan#0, output_0

output: name='output_0' type=dtype('float32') shape=['x_rows', 'y_rows']

----- subgraph ---- Scan - scan - att.body=G1 -- level=1 -- init_0_y,scan_0_x -> output_0,output_1

input: name='init_0_y' type='NOTENSOR' shape=None

input: name='scan_0_x' type='NOTENSOR' shape=None

Constant(value=[1]) -> init7_s1_12

Constant(value=[1, -1]) -> init7_s2_1_-12

Reshape(scan_0_x, init7_s2_1_-12) -> view2

Sub(init_0_y, view2) -> sub_42

Mul(sub_42, sub_42) -> mul_72

ReduceSum(mul_72, init7_s1_12, keepdims=0) -> sum_12

Sqrt(sum_12) -> output_1

Identity(init_0_y) -> output_0

output: name='output_0' type='NOTENSOR' shape=None

output: name='output_1' type='NOTENSOR' shape=None

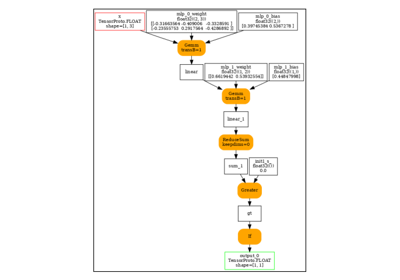

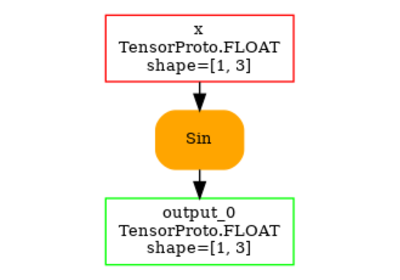

And visually.

Total running time of the script: (0 minutes 1.220 seconds)

Related examples

torch.onnx.export and a custom operator registered with a function