Note

Go to the end to download the full example code.

Check the exporter on a dummy from HuggingFace¶

Every conversion task must be tested on a large scale. One huge source of model is HuggingFace. We focus on the model Tiny-LLM. To avoid downloading any weigths, we write a function creating a random model based on the same architecture.

Guess the cache dimension¶

The first step is to guess the dummy inputs. Let’s use the true model for that. We use the dummy example from the model page.

from typing import Any, Dict

import torch

import transformers

from onnx_diagnostic.helpers import string_type

from onnx_diagnostic.helpers.cache_helper import make_dynamic_cache

MODEL_NAME = "arnir0/Tiny-LLM"

tokenizer = transformers.AutoTokenizer.from_pretrained(MODEL_NAME)

model = transformers.AutoModelForCausalLM.from_pretrained(MODEL_NAME)

Loading weights: 0%| | 0/12 [00:00<?, ?it/s]

Loading weights: 8%|▊ | 1/12 [00:00<00:00, 11618.57it/s, Materializing param=lm_head.weight]

Loading weights: 8%|▊ | 1/12 [00:00<00:00, 3724.96it/s, Materializing param=lm_head.weight]

Loading weights: 17%|█▋ | 2/12 [00:00<00:00, 179.37it/s, Materializing param=model.embed_tokens.weight]

Loading weights: 17%|█▋ | 2/12 [00:00<00:00, 173.62it/s, Materializing param=model.embed_tokens.weight]

Loading weights: 25%|██▌ | 3/12 [00:00<00:00, 248.30it/s, Materializing param=model.layers.0.input_layernorm.weight]

Loading weights: 25%|██▌ | 3/12 [00:00<00:00, 243.88it/s, Materializing param=model.layers.0.input_layernorm.weight]

Loading weights: 33%|███▎ | 4/12 [00:00<00:00, 312.49it/s, Materializing param=model.layers.0.mlp.down_proj.weight]

Loading weights: 33%|███▎ | 4/12 [00:00<00:00, 305.10it/s, Materializing param=model.layers.0.mlp.down_proj.weight]

Loading weights: 42%|████▏ | 5/12 [00:00<00:00, 367.12it/s, Materializing param=model.layers.0.mlp.gate_proj.weight]

Loading weights: 42%|████▏ | 5/12 [00:00<00:00, 363.09it/s, Materializing param=model.layers.0.mlp.gate_proj.weight]

Loading weights: 50%|█████ | 6/12 [00:00<00:00, 419.86it/s, Materializing param=model.layers.0.mlp.up_proj.weight]

Loading weights: 50%|█████ | 6/12 [00:00<00:00, 413.30it/s, Materializing param=model.layers.0.mlp.up_proj.weight]

Loading weights: 58%|█████▊ | 7/12 [00:00<00:00, 454.69it/s, Materializing param=model.layers.0.post_attention_layernorm.weight]

Loading weights: 58%|█████▊ | 7/12 [00:00<00:00, 450.07it/s, Materializing param=model.layers.0.post_attention_layernorm.weight]

Loading weights: 67%|██████▋ | 8/12 [00:00<00:00, 505.12it/s, Materializing param=model.layers.0.self_attn.k_proj.weight]

Loading weights: 67%|██████▋ | 8/12 [00:00<00:00, 496.24it/s, Materializing param=model.layers.0.self_attn.k_proj.weight]

Loading weights: 75%|███████▌ | 9/12 [00:00<00:00, 417.70it/s, Materializing param=model.layers.0.self_attn.o_proj.weight]

Loading weights: 75%|███████▌ | 9/12 [00:00<00:00, 415.01it/s, Materializing param=model.layers.0.self_attn.o_proj.weight]

Loading weights: 83%|████████▎ | 10/12 [00:00<00:00, 438.84it/s, Materializing param=model.layers.0.self_attn.q_proj.weight]

Loading weights: 83%|████████▎ | 10/12 [00:00<00:00, 432.93it/s, Materializing param=model.layers.0.self_attn.q_proj.weight]

Loading weights: 92%|█████████▏| 11/12 [00:00<00:00, 469.50it/s, Materializing param=model.layers.0.self_attn.v_proj.weight]

Loading weights: 92%|█████████▏| 11/12 [00:00<00:00, 468.09it/s, Materializing param=model.layers.0.self_attn.v_proj.weight]

Loading weights: 100%|██████████| 12/12 [00:00<00:00, 485.69it/s, Materializing param=model.norm.weight]

Loading weights: 100%|██████████| 12/12 [00:00<00:00, 477.27it/s, Materializing param=model.norm.weight]

Loading weights: 100%|██████████| 12/12 [00:00<00:00, 469.35it/s, Materializing param=model.norm.weight]

We rewrite the forward method to print the cache dimension.

def _forward_(*args, _f=None, **kwargs):

assert _f is not None

if hasattr(torch.compiler, "is_exporting") and not torch.compiler.is_exporting():

# torch.compiler.is_exporting requires torch>=2.7

print(string_type([args, kwargs], with_shape=True))

return _f(*args, **kwargs)

keep_model_forward = model.forward

model.forward = lambda *args, _f=keep_model_forward, **kwargs: _forward_(*args, _f=_f, **kwargs)

Let’s run the model.

prompt = "Continue: it rains..."

inputs = tokenizer.encode(prompt, return_tensors="pt")

outputs = model.generate(

inputs, max_length=50, temperature=1, top_k=50, top_p=0.95, do_sample=True

)

generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(generated_text)

#2[(),dict(cache_position:T7s8,past_key_values:DynamicCache(key_cache=#0[], value_cache=#0[]),input_ids:T7s1x8,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x8x96], value_cache=#1[T1s1x1x8x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x9x96], value_cache=#1[T1s1x1x9x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x10x96], value_cache=#1[T1s1x1x10x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x11x96], value_cache=#1[T1s1x1x11x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x12x96], value_cache=#1[T1s1x1x12x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x13x96], value_cache=#1[T1s1x1x13x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x14x96], value_cache=#1[T1s1x1x14x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x15x96], value_cache=#1[T1s1x1x15x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x16x96], value_cache=#1[T1s1x1x16x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x17x96], value_cache=#1[T1s1x1x17x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x18x96], value_cache=#1[T1s1x1x18x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x19x96], value_cache=#1[T1s1x1x19x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x20x96], value_cache=#1[T1s1x1x20x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x21x96], value_cache=#1[T1s1x1x21x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x22x96], value_cache=#1[T1s1x1x22x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x23x96], value_cache=#1[T1s1x1x23x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x24x96], value_cache=#1[T1s1x1x24x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x25x96], value_cache=#1[T1s1x1x25x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x26x96], value_cache=#1[T1s1x1x26x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x27x96], value_cache=#1[T1s1x1x27x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x28x96], value_cache=#1[T1s1x1x28x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x29x96], value_cache=#1[T1s1x1x29x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x30x96], value_cache=#1[T1s1x1x30x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x31x96], value_cache=#1[T1s1x1x31x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x32x96], value_cache=#1[T1s1x1x32x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x33x96], value_cache=#1[T1s1x1x33x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x34x96], value_cache=#1[T1s1x1x34x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x35x96], value_cache=#1[T1s1x1x35x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x36x96], value_cache=#1[T1s1x1x36x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x37x96], value_cache=#1[T1s1x1x37x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x38x96], value_cache=#1[T1s1x1x38x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x39x96], value_cache=#1[T1s1x1x39x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x40x96], value_cache=#1[T1s1x1x40x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x41x96], value_cache=#1[T1s1x1x41x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x42x96], value_cache=#1[T1s1x1x42x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x43x96], value_cache=#1[T1s1x1x43x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x44x96], value_cache=#1[T1s1x1x44x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x45x96], value_cache=#1[T1s1x1x45x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x46x96], value_cache=#1[T1s1x1x46x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x47x96], value_cache=#1[T1s1x1x47x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

#2[(),dict(cache_position:T7s1,past_key_values:DynamicCache(key_cache=#1[T1s1x1x48x96], value_cache=#1[T1s1x1x48x96]),input_ids:T7s1x1,inputs_embeds:None,use_cache:bool,return_dict:bool)]

Continue: it rains...

So then, the more I would have to think again?

Bernes on the way, just don't take it out, because I love them!

I'm sure it's

Let’s restore the forward as it was.

model.forward = keep_model_forward

The model creation¶

Let’s create an untrained model.

def get_tiny_llm(

batch_size: int = 2,

input_cache: bool = True,

common_dynamic_shapes: bool = True,

dynamic_rope: bool = False,

**kwargs,

) -> Dict[str, Any]:

"""

Gets a non initialized model.

:param batch_size: batch size

:param input_cache: generate data for this iteration with or without cache

:param kwargs: to overwrite the configuration, example ``num_hidden_layers=1``

:param common_dynamic_shapes: if True returns dynamic shapes as well

:param dynamic_rope: use dynamic rope (see :class:`transformers.LlamaConfig`)

:return: dictionary

"""

import transformers

config = {

"architectures": ["LlamaForCausalLM"],

"bos_token_id": 1,

"eos_token_id": 2,

"hidden_act": "silu",

"hidden_size": 192,

"initializer_range": 0.02,

"intermediate_size": 1024,

"max_position_embeddings": 1024,

"model_type": "llama",

"num_attention_heads": 2,

"num_hidden_layers": 1,

"num_key_value_heads": 1,

"pretraining_tp": 1,

"rms_norm_eps": 1e-05,

"rope_scaling": {"rope_type": "dynamic", "factor": 10.0} if dynamic_rope else None,

"tie_word_embeddings": False,

"torch_dtype": "float32",

"transformers_version": "4.31.0.dev0",

"use_cache": True,

"vocab_size": 32000,

}

config.update(**kwargs)

conf = transformers.LlamaConfig(**config)

model = transformers.LlamaForCausalLM(conf)

model.eval()

# now the inputs

cache_last_dim = 96

sequence_length = 30

sequence_length2 = 3

num_key_value_heads = 1

max_token_id = config["vocab_size"] - 1

n_layers = config["num_hidden_layers"]

batch = torch.export.Dim("batch", min=1, max=1024)

seq_length = torch.export.Dim("seq_length", min=1, max=4096)

cache_length = torch.export.Dim("cache_length", min=1, max=4096)

shapes = {

"input_ids": {0: batch, 1: seq_length},

"attention_mask": {

0: batch,

1: torch.export.Dim.DYNAMIC, # cache_length + seq_length

},

"past_key_values": [

[{0: batch, 2: cache_length} for _ in range(n_layers)],

[{0: batch, 2: cache_length} for _ in range(n_layers)],

],

}

inputs = dict(

input_ids=torch.randint(0, max_token_id, (batch_size, sequence_length2)).to(torch.int64),

attention_mask=torch.ones((batch_size, sequence_length + sequence_length2)).to(

torch.int64

),

past_key_values=make_dynamic_cache(

[

(

torch.randn(batch_size, num_key_value_heads, sequence_length, cache_last_dim),

torch.randn(batch_size, num_key_value_heads, sequence_length, cache_last_dim),

)

for i in range(n_layers)

]

),

)

return dict(inputs=inputs, model=model, dynamic_shapes=shapes)

Let’s get the model, inputs and dynamic shapes.

experiment = get_tiny_llm()

model, inputs, dynamic_shapes = (

experiment["model"],

experiment["inputs"],

experiment["dynamic_shapes"],

)

expected_output = model(**inputs)

print("result type", type(expected_output))

result type <class 'transformers.modeling_outputs.CausalLMOutputWithPast'>

It works.

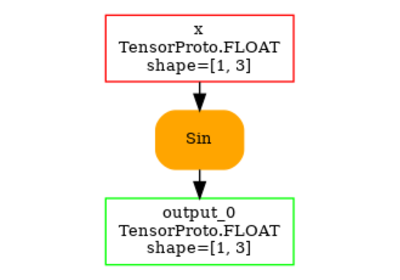

ExportedProgram¶

try:

ep = torch.export.export(model, (), inputs, dynamic_shapes=dynamic_shapes)

print("It worked:")

print(ep)

except Exception as e:

# To work, it needs at least PRs:

# * https://github.com/huggingface/transformers/pull/36311

# * https://github.com/huggingface/transformers/pull/36652

print("It failed:", e)

It failed: Cannot associate shape [[{0: Dim('batch', min=1, max=1024), 2: Dim('cache_length', min=1, max=4096)}], [{0: Dim('batch', min=1, max=1024), 2: Dim('cache_length', min=1, max=4096)}]] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None)

For more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation

The error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.

Total running time of the script: (1 minutes 2.335 seconds)

Related examples

Export Phi-3.5-mini-instruct with report_exportability

Export Phi-3.5-mini-instruct with report_exportability