Note

Go to the end to download the full example code.

Export Phi-3.5-mini-instruct piece by piece¶

torch.export.export() often breaks on big models because there

are control flows or instructions breaking the propagation of

dynamic shapes (see …). The function usually gives an indication where

the model implementation can be fixed but in case, that is not possible,

we can try to export the model piece by piece: every module

is converted separately from its submodule. A model can be exported even

if one of its submodules cannot.

Model¶

import pprint

from typing import Any, Dict

import torch

import torch._export.tools

import transformers

from onnx_diagnostic.helpers.cache_helper import make_dynamic_cache

from experimental_experiment.helpers import string_type

from experimental_experiment.torch_interpreter.piece_by_piece import (

trace_execution_piece_by_piece,

)

def get_phi35_untrained(batch_size: int = 2, **kwargs) -> Dict[str, Any]:

"""

Gets a non initialized model with two sets of inputs and different shapes.

:param batch_size: batch size

:param kwargs: to overwrite the configuration, example ``num_hidden_layers=1``

:return: dictionary

See `Phi-3.5-mini-instruct/config.json

<https://huggingface.co/microsoft/Phi-3.5-mini-instruct/blob/main/config.json>`_.

"""

config = {

"_name_or_path": "Phi-3.5-mini-instruct",

"architectures": ["Phi3ForCausalLM"],

"attention_dropout": 0.0,

"auto_map": {

"AutoConfig": "configuration_phi3.Phi3Config",

"AutoModelForCausalLM": "modeling_phi3.Phi3ForCausalLM",

},

"bos_token_id": 1,

"embd_pdrop": 0.0,

"eos_token_id": 32000,

"hidden_act": "silu",

"hidden_size": 3072,

"initializer_range": 0.02,

"intermediate_size": 8192,

"max_position_embeddings": 131072,

"model_type": "phi3",

"num_attention_heads": 32,

"num_hidden_layers": 32,

"num_key_value_heads": 32,

"original_max_position_embeddings": 4096,

"pad_token_id": 32000,

"resid_pdrop": 0.0,

"rms_norm_eps": 1e-05,

"rope_scaling": {

"long_factor": [

1.0800000429153442,

1.1100000143051147,

1.1399999856948853,

1.340000033378601,

1.5899999141693115,

1.600000023841858,

1.6200000047683716,

2.620000123977661,

3.2300000190734863,

3.2300000190734863,

4.789999961853027,

7.400000095367432,

7.700000286102295,

9.09000015258789,

12.199999809265137,

17.670000076293945,

24.46000099182129,

28.57000160217285,

30.420001983642578,

30.840002059936523,

32.590003967285156,

32.93000411987305,

42.320003509521484,

44.96000289916992,

50.340003967285156,

50.45000457763672,

57.55000305175781,

57.93000411987305,

58.21000289916992,

60.1400032043457,

62.61000442504883,

62.62000274658203,

62.71000289916992,

63.1400032043457,

63.1400032043457,

63.77000427246094,

63.93000411987305,

63.96000289916992,

63.970001220703125,

64.02999877929688,

64.06999969482422,

64.08000183105469,

64.12000274658203,

64.41000366210938,

64.4800033569336,

64.51000213623047,

64.52999877929688,

64.83999633789062,

],

"short_factor": [

1.0,

1.0199999809265137,

1.0299999713897705,

1.0299999713897705,

1.0499999523162842,

1.0499999523162842,

1.0499999523162842,

1.0499999523162842,

1.0499999523162842,

1.0699999332427979,

1.0999999046325684,

1.1099998950958252,

1.1599998474121094,

1.1599998474121094,

1.1699998378753662,

1.2899998426437378,

1.339999794960022,

1.679999828338623,

1.7899998426437378,

1.8199998140335083,

1.8499997854232788,

1.8799997568130493,

1.9099997282028198,

1.9399996995925903,

1.9899996519088745,

2.0199997425079346,

2.0199997425079346,

2.0199997425079346,

2.0199997425079346,

2.0199997425079346,

2.0199997425079346,

2.0299997329711914,

2.0299997329711914,

2.0299997329711914,

2.0299997329711914,

2.0299997329711914,

2.0299997329711914,

2.0299997329711914,

2.0299997329711914,

2.0299997329711914,

2.0799996852874756,

2.0899996757507324,

2.189999580383301,

2.2199995517730713,

2.5899994373321533,

2.729999542236328,

2.749999523162842,

2.8399994373321533,

],

"type": "longrope",

},

"rope_theta": 10000.0,

"sliding_window": 262144,

"tie_word_embeddings": False,

"torch_dtype": "bfloat16",

"use_cache": True,

"attention_bias": False,

"vocab_size": 32064,

}

config.update(**kwargs)

conf = transformers.Phi3Config(**config)

model = transformers.Phi3ForCausalLM(conf)

model.eval()

cache = make_dynamic_cache(

[

(torch.randn(batch_size, 32, 30, 96), torch.randn(batch_size, 32, 30, 96))

for i in range(config["num_hidden_layers"])

]

)

cache2 = make_dynamic_cache(

[

(torch.randn(batch_size + 1, 32, 31, 96), torch.randn(batch_size + 1, 32, 31, 96))

for i in range(config["num_hidden_layers"])

]

)

inputs = dict(

input_ids=torch.randint(0, 32064, (batch_size, 3)).to(torch.int64),

attention_mask=torch.ones((batch_size, 33)).to(torch.int64),

past_key_values=cache,

)

inputs2 = dict(

input_ids=torch.randint(0, 32064, (batch_size + 1, 4)).to(torch.int64),

attention_mask=torch.ones((batch_size + 1, 35)).to(torch.int64),

past_key_values=cache2,

)

return dict(inputs=inputs, model=model, inputs2=inputs2)

data = get_phi35_untrained(num_hidden_layers=2)

model, inputs, inputs2 = data["model"], data["inputs"], data["inputs2"]

print(string_type(inputs, with_shape=True))

dict(input_ids:T7s2x3,attention_mask:T7s2x33,past_key_values:DynamicCache(key_cache=#2[T1s2x32x30x96,T1s2x32x30x96], value_cache=#2[T1s2x32x30x96,T1s2x32x30x96]))

Dynamic Shapes¶

We want to infer the dynamic shapes from the two sets of inputs we gave. For that, we use a function to trace the execution of the model including its submodules. It is going to execute the model twice with the two sets of inputs and stores every intermediate input and output.

diag = trace_execution_piece_by_piece(model, [inputs, inputs2], verbose=2)

[_trace_forward_execution] -trace- M:__main__-Phi3ForCausalLM.forward

[_trace_forward_execution] -trace- .. M:model-Phi3Model.forward

[_trace_forward_execution] -trace- .... M:embed_tokens-Embedding.forward

[_trace_forward_execution] -trace- .... M:layers[0]-Phi3DecoderLayer.forward

[_trace_forward_execution] -trace- ...... M:self_attn-Phi3Attention.forward

[_trace_forward_execution] -trace- ........ M:o_proj-Linear.forward

[_trace_forward_execution] -trace- ........ M:qkv_proj-Linear.forward

[_trace_forward_execution] -trace- ...... M:mlp-Phi3MLP.forward

[_trace_forward_execution] -trace- ........ M:gate_up_proj-Linear.forward

[_trace_forward_execution] -trace- ........ M:down_proj-Linear.forward

[_trace_forward_execution] -trace- ........ M:activation_fn-SiLUActivation.forward

[_trace_forward_execution] -trace- ...... M:input_layernorm-Phi3RMSNorm.forward

[_trace_forward_execution] -trace- ...... M:post_attention_layernorm-Phi3RMSNorm.forward

[_trace_forward_execution] -trace- ...... M:resid_attn_dropout-Dropout.forward

[_trace_forward_execution] -trace- ...... M:resid_mlp_dropout-Dropout.forward

[_trace_forward_execution] -trace- .... M:layers[1]-Phi3DecoderLayer.forward

[_trace_forward_execution] -trace- ...... M:self_attn-Phi3Attention.forward

[_trace_forward_execution] -trace- ........ M:o_proj-Linear.forward

[_trace_forward_execution] -trace- ........ M:qkv_proj-Linear.forward

[_trace_forward_execution] -trace- ...... M:mlp-Phi3MLP.forward

[_trace_forward_execution] -trace- ........ M:gate_up_proj-Linear.forward

[_trace_forward_execution] -trace- ........ M:down_proj-Linear.forward

[_trace_forward_execution] -trace- ........ M:activation_fn-SiLUActivation.forward

[_trace_forward_execution] -trace- ...... M:input_layernorm-Phi3RMSNorm.forward

[_trace_forward_execution] -trace- ...... M:post_attention_layernorm-Phi3RMSNorm.forward

[_trace_forward_execution] -trace- ...... M:resid_attn_dropout-Dropout.forward

[_trace_forward_execution] -trace- ...... M:resid_mlp_dropout-Dropout.forward

[_trace_forward_execution] -trace- .... M:norm-Phi3RMSNorm.forward

[_trace_forward_execution] -trace- .... M:rotary_emb-Phi3RotaryEmbedding.forward

[_trace_forward_execution] -trace- .. M:lm_head-Linear.forward

[trace_execution_piece_by_piece] run with dict(args:(),kwargs:dict(input_ids:T7s2x3,attention_mask:T7s2x33,past_key_values:DynamicCache(key_cache=#2[T1s2x32x30x96,T1s2x32x30x96], value_cache=#2[T1s2x32x30x96,T1s2x32x30x96])))

[__main__:Phi3ForCausalLM] > **dict(input_ids:T7r2,attention_mask:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]))

[model:Phi3Model] > **dict(input_ids:T7r2,attention_mask:T7r2,position_ids:None,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),inputs_embeds:None,use_cache:None,cache_position:None)

[embed_tokens:Embedding] > T7r2

[embed_tokens:Embedding] < T1r3

[rotary_emb:Phi3RotaryEmbedding] > *(T1r3,), **dict(position_ids:T7r2)

[rotary_emb:Phi3RotaryEmbedding] < *(T1r3,T1r3)

[layers[0]:Phi3DecoderLayer] > *(T1r3,), **dict(attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[input_layernorm:Phi3RMSNorm] > T1r3

[input_layernorm:Phi3RMSNorm] < T1r3

[self_attn:Phi3Attention] > **dict(hidden_states:T1r3,attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[qkv_proj:Linear] > T1r3

[qkv_proj:Linear] < T1r3

[o_proj:Linear] > T1r3

[o_proj:Linear] < T1r3

[self_attn:Phi3Attention] < *(T1r3,None)

[resid_attn_dropout:Dropout] > T1r3

[resid_attn_dropout:Dropout] < T1r3

[post_attention_layernorm:Phi3RMSNorm] > T1r3

[post_attention_layernorm:Phi3RMSNorm] < T1r3

[mlp:Phi3MLP] > T1r3

[gate_up_proj:Linear] > T1r3

[gate_up_proj:Linear] < T1r3

[activation_fn:SiLUActivation] > T1r3

[activation_fn:SiLUActivation] < T1r3

[down_proj:Linear] > T1r3

[down_proj:Linear] < T1r3

[mlp:Phi3MLP] < T1r3

[resid_mlp_dropout:Dropout] > T1r3

[resid_mlp_dropout:Dropout] < T1r3

[layers[0]:Phi3DecoderLayer] < T1r3

[layers[1]:Phi3DecoderLayer] > *(T1r3,), **dict(attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[input_layernorm:Phi3RMSNorm] > T1r3

[input_layernorm:Phi3RMSNorm] < T1r3

[self_attn:Phi3Attention] > **dict(hidden_states:T1r3,attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[qkv_proj:Linear] > T1r3

[qkv_proj:Linear] < T1r3

[o_proj:Linear] > T1r3

[o_proj:Linear] < T1r3

[self_attn:Phi3Attention] < *(T1r3,None)

[resid_attn_dropout:Dropout] > T1r3

[resid_attn_dropout:Dropout] < T1r3

[post_attention_layernorm:Phi3RMSNorm] > T1r3

[post_attention_layernorm:Phi3RMSNorm] < T1r3

[mlp:Phi3MLP] > T1r3

[gate_up_proj:Linear] > T1r3

[gate_up_proj:Linear] < T1r3

[activation_fn:SiLUActivation] > T1r3

[activation_fn:SiLUActivation] < T1r3

[down_proj:Linear] > T1r3

[down_proj:Linear] < T1r3

[mlp:Phi3MLP] < T1r3

[resid_mlp_dropout:Dropout] > T1r3

[resid_mlp_dropout:Dropout] < T1r3

[layers[1]:Phi3DecoderLayer] < T1r3

[norm:Phi3RMSNorm] > T1r3

[norm:Phi3RMSNorm] < T1r3

[model:Phi3Model] < *BaseModelOutputWithPast(last_hidden_state:T1r3,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]))

[lm_head:Linear] > T1r3

[lm_head:Linear] < T1r3

[__main__:Phi3ForCausalLM] < *CausalLMOutputWithPast(logits:T1r3,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]))

[trace_execution_piece_by_piece] run with dict(args:(),kwargs:dict(input_ids:T7s3x4,attention_mask:T7s3x35,past_key_values:DynamicCache(key_cache=#2[T1s3x32x31x96,T1s3x32x31x96], value_cache=#2[T1s3x32x31x96,T1s3x32x31x96])))

[__main__:Phi3ForCausalLM] > **dict(input_ids:T7r2,attention_mask:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]))

[model:Phi3Model] > **dict(input_ids:T7r2,attention_mask:T7r2,position_ids:None,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),inputs_embeds:None,use_cache:None,cache_position:None)

[embed_tokens:Embedding] > T7r2

[embed_tokens:Embedding] < T1r3

[rotary_emb:Phi3RotaryEmbedding] > *(T1r3,), **dict(position_ids:T7r2)

[rotary_emb:Phi3RotaryEmbedding] < *(T1r3,T1r3)

[layers[0]:Phi3DecoderLayer] > *(T1r3,), **dict(attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[input_layernorm:Phi3RMSNorm] > T1r3

[input_layernorm:Phi3RMSNorm] < T1r3

[self_attn:Phi3Attention] > **dict(hidden_states:T1r3,attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[qkv_proj:Linear] > T1r3

[qkv_proj:Linear] < T1r3

[o_proj:Linear] > T1r3

[o_proj:Linear] < T1r3

[self_attn:Phi3Attention] < *(T1r3,None)

[resid_attn_dropout:Dropout] > T1r3

[resid_attn_dropout:Dropout] < T1r3

[post_attention_layernorm:Phi3RMSNorm] > T1r3

[post_attention_layernorm:Phi3RMSNorm] < T1r3

[mlp:Phi3MLP] > T1r3

[gate_up_proj:Linear] > T1r3

[gate_up_proj:Linear] < T1r3

[activation_fn:SiLUActivation] > T1r3

[activation_fn:SiLUActivation] < T1r3

[down_proj:Linear] > T1r3

[down_proj:Linear] < T1r3

[mlp:Phi3MLP] < T1r3

[resid_mlp_dropout:Dropout] > T1r3

[resid_mlp_dropout:Dropout] < T1r3

[layers[0]:Phi3DecoderLayer] < T1r3

[layers[1]:Phi3DecoderLayer] > *(T1r3,), **dict(attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[input_layernorm:Phi3RMSNorm] > T1r3

[input_layernorm:Phi3RMSNorm] < T1r3

[self_attn:Phi3Attention] > **dict(hidden_states:T1r3,attention_mask:T9r4,position_ids:T7r2,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]),use_cache:bool,cache_position:T7r1,position_embeddings:(T1r3,T1r3))

[qkv_proj:Linear] > T1r3

[qkv_proj:Linear] < T1r3

[o_proj:Linear] > T1r3

[o_proj:Linear] < T1r3

[self_attn:Phi3Attention] < *(T1r3,None)

[resid_attn_dropout:Dropout] > T1r3

[resid_attn_dropout:Dropout] < T1r3

[post_attention_layernorm:Phi3RMSNorm] > T1r3

[post_attention_layernorm:Phi3RMSNorm] < T1r3

[mlp:Phi3MLP] > T1r3

[gate_up_proj:Linear] > T1r3

[gate_up_proj:Linear] < T1r3

[activation_fn:SiLUActivation] > T1r3

[activation_fn:SiLUActivation] < T1r3

[down_proj:Linear] > T1r3

[down_proj:Linear] < T1r3

[mlp:Phi3MLP] < T1r3

[resid_mlp_dropout:Dropout] > T1r3

[resid_mlp_dropout:Dropout] < T1r3

[layers[1]:Phi3DecoderLayer] < T1r3

[norm:Phi3RMSNorm] > T1r3

[norm:Phi3RMSNorm] < T1r3

[model:Phi3Model] < *BaseModelOutputWithPast(last_hidden_state:T1r3,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]))

[lm_head:Linear] > T1r3

[lm_head:Linear] < T1r3

[__main__:Phi3ForCausalLM] < *CausalLMOutputWithPast(logits:T1r3,past_key_values:DynamicCache(key_cache=#2[T1r4,T1r4], value_cache=#2[T1r4,T1r4]))

[trace_forward_execution] traced execution of model Phi3ForCausalLM

>>> __main__: Phi3ForCausalLM

> ((),dict(input_ids:CT7s2x3[14302,30977:A22329.0],attention_mask:CT7s2x33[1,1:A1.0],past_key_values:DynamicCache(key_cache=#2[CT1s2x32x30x96[-4.6530022621154785,4.525870323181152:A0.0007576574308194559],CT1s2x32x30x96[-4.304272651672363,4.806103229522705:A-0.002908728721934681]], value_cache=#2[CT1s2x32x30x96[-4.232974529266357,4.303123950958252:A-2.4212665335637202e-05],CT1s2x32x30x96[-4.307050704956055,4.548398494720459:A-0.0010661965557350723]])))

> ((),dict(input_ids:CT7s3x4[2342,30236:A13059.416666666666],attention_mask:CT7s3x35[1,1:A1.0],past_key_values:DynamicCache(key_cache=#2[CT1s3x32x31x96[-4.795901775360107,4.936037540435791:A0.0006760473840221051],CT1s3x32x31x96[-4.504631519317627,4.66909646987915:A0.0002402408084757681]], value_cache=#2[CT1s3x32x31x96[-4.552186965942383,4.805452346801758:A0.00026920107927555037],CT1s3x32x31x96[-4.3572845458984375,4.77432107925415:A0.003039900976282685]])))

>>> model: Phi3Model

> ((),dict(input_ids:CT7s2x3[14302,30977:A22329.0],attention_mask:CT7s2x33[1,1:A1.0],position_ids:None,past_key_values:DynamicCache(key_cache=#2[CT1s2x32x30x96[-4.6530022621154785,4.525870323181152:A0.0007576574308194559],CT1s2x32x30x96[-4.304272651672363,4.806103229522705:A-0.002908728721934681]], value_cache=#2[CT1s2x32x30x96[-4.232974529266357,4.303123950958252:A-2.4212665335637202e-05],CT1s2x32x30x96[-4.307050704956055,4.548398494720459:A-0.0010661965557350723]]),inputs_embeds:None,use_cache:None,cache_position:None))

> ((),dict(input_ids:CT7s3x4[2342,30236:A13059.416666666666],attention_mask:CT7s3x35[1,1:A1.0],position_ids:None,past_key_values:DynamicCache(key_cache=#2[CT1s3x32x31x96[-4.795901775360107,4.936037540435791:A0.0006760473840221051],CT1s3x32x31x96[-4.504631519317627,4.66909646987915:A0.0002402408084757681]], value_cache=#2[CT1s3x32x31x96[-4.552186965942383,4.805452346801758:A0.00026920107927555037],CT1s3x32x31x96[-4.3572845458984375,4.77432107925415:A0.003039900976282685]]),inputs_embeds:None,use_cache:None,cache_position:None))

>>> embed_tokens: Embedding

> ((CT7s2x3[14302,30977:A22329.0],),{})

> ((CT7s3x4[2342,30236:A13059.416666666666],),{})

< (CT1s2x3x3072[-0.0731288269162178,0.08779632300138474:A8.343658588479592e-05],)

< (CT1s3x4x3072[-0.08399917185306549,0.0895691066980362:A4.718146423578052e-05],)

<<<

>>> layers[0]: Phi3DecoderLayer

> ((CT1s2x3x3072[-0.0731288269162178,0.08779632300138474:A8.343658588479592e-05],),dict(attention_mask:CT9s2x1x3x33[False,True:A0.9696969696969697],position_ids:CT7s1x3[30,32:A31.0],past_key_values:DynamicCache(key_cache=#2[CT1s2x32x30x96[-4.6530022621154785,4.525870323181152:A0.0007576574308194559],CT1s2x32x30x96[-4.304272651672363,4.806103229522705:A-0.002908728721934681]], value_cache=#2[CT1s2x32x30x96[-4.232974529266357,4.303123950958252:A-2.4212665335637202e-05],CT1s2x32x30x96[-4.307050704956055,4.548398494720459:A-0.0010661965557350723]]),use_cache:bool=True,cache_position:CT7s3[30,32:A31.0],position_embeddings:(CT1s1x3x96[-1.1855769157409668,1.1902371644973755:A0.746652018013669],CT1s1x3x96[-1.1887905597686768,1.190193772315979:A0.1589894221542636])))

> ((CT1s3x4x3072[-0.08399917185306549,0.0895691066980362:A4.718146423578052e-05],),dict(attention_mask:CT9s3x1x4x35[False,True:A0.9571428571428572],position_ids:CT7s1x4[31,34:A32.5],past_key_values:DynamicCache(key_cache=#2[CT1s3x32x31x96[-4.795901775360107,4.936037540435791:A0.0006760473840221051],CT1s3x32x31x96[-4.504631519317627,4.66909646987915:A0.0002402408084757681]], value_cache=#2[CT1s3x32x31x96[-4.552186965942383,4.805452346801758:A0.00026920107927555037],CT1s3x32x31x96[-4.3572845458984375,4.77432107925415:A0.003039900976282685]]),use_cache:bool=True,cache_position:CT7s4[31,34:A32.5],position_embeddings:(CT1s1x4x96[-1.1855769157409668,1.190237045288086:A0.7129333875218435],CT1s1x4x96[-1.1719439029693604,1.1902378797531128:A0.18296290554159592])))

>>> self_attn: Phi3Attention

> ((),dict(hidden_states:CT1s2x3x3072[-3.587798833847046,4.40887975692749:A0.003921798224335286],attention_mask:CT9s2x1x3x33[False,True:A0.9696969696969697],position_ids:CT7s1x3[30,32:A31.0],past_key_values:DynamicCache(key_cache=#2[CT1s2x32x30x96[-4.6530022621154785,4.525870323181152:A0.0007576574308194559],CT1s2x32x30x96[-4.304272651672363,4.806103229522705:A-0.002908728721934681]], value_cache=#2[CT1s2x32x30x96[-4.232974529266357,4.303123950958252:A-2.4212665335637202e-05],CT1s2x32x30x96[-4.307050704956055,4.548398494720459:A-0.0010661965557350723]]),use_cache:bool=True,cache_position:CT7s3[30,32:A31.0],position_embeddings:(CT1s1x3x96[-1.1855769157409668,1.1902371644973755:A0.746652018013669],CT1s1x3x96[-1.1887905597686768,1.190193772315979:A0.1589894221542636])))

> ((),dict(hidden_states:CT1s3x4x3072[-4.229971885681152,4.4241862297058105:A0.002278887272579608],attention_mask:CT9s3x1x4x35[False,True:A0.9571428571428572],position_ids:CT7s1x4[31,34:A32.5],past_key_values:DynamicCache(key_cache=#2[CT1s3x32x31x96[-4.795901775360107,4.936037540435791:A0.0006760473840221051],CT1s3x32x31x96[-4.504631519317627,4.66909646987915:A0.0002402408084757681]], value_cache=#2[CT1s3x32x31x96[-4.552186965942383,4.805452346801758:A0.00026920107927555037],CT1s3x32x31x96[-4.3572845458984375,4.77432107925415:A0.003039900976282685]]),use_cache:bool=True,cache_position:CT7s4[31,34:A32.5],position_embeddings:(CT1s1x4x96[-1.1855769157409668,1.190237045288086:A0.7129333875218435],CT1s1x4x96[-1.1719439029693604,1.1902378797531128:A0.18296290554159592])))

>>> o_proj: Linear

> ((CT1s2x3x3072[-2.626713991165161,1.7886841297149658:A0.001251200348841704],),{})

> ((CT1s3x4x3072[-2.119600296020508,2.195861339569092:A0.0010147980615120072],),{})

< (CT1s2x3x3072[-1.3978718519210815,1.48145592212677:A0.00012072662433284777],)

< (CT1s3x4x3072[-1.5819348096847534,1.6352994441986084:A-0.0023909121722611973],)

<<<

>>> qkv_proj: Linear

> ((CT1s2x3x3072[-3.587798833847046,4.40887975692749:A0.003921798224335286],),{})

> ((CT1s3x4x3072[-4.229971885681152,4.4241862297058105:A0.002278887272579608],),{})

< (CT1s2x3x9216[-4.782982349395752,4.552403450012207:A0.002869967194404429],)

< (CT1s3x4x9216[-4.8670854568481445,4.951562404632568:A0.0034322424564308036],)

<<<

< (CT1s2x3x3072[-1.3978718519210815,1.48145592212677:A0.00012072662433284777],None)

< (CT1s3x4x3072[-1.5819348096847534,1.6352994441986084:A-0.0023909121722611973],None)

<<<

>>> mlp: Phi3MLP

> ((CT1s2x3x3072[-3.743211269378662,3.7259743213653564:A0.00032121702676985505],),{})

> ((CT1s3x4x3072[-4.296080589294434,4.162869453430176:A-0.006826439422444973],),{})

>>> gate_up_proj: Linear

> ((CT1s2x3x3072[-3.743211269378662,3.7259743213653564:A0.00032121702676985505],),{})

> ((CT1s3x4x3072[-4.296080589294434,4.162869453430176:A-0.006826439422444973],),{})

< (CT1s2x3x16384[-4.702624320983887,4.701742649078369:A-0.002079409643679734],)

< (CT1s3x4x16384[-4.724364757537842,4.801564693450928:A-0.005045084881643902],)

<<<

>>> down_proj: Linear

> ((CT1s2x3x8192[-15.17435073852539,9.74260425567627:A0.0023403476103333507],),{})

> ((CT1s3x4x8192[-12.76889705657959,12.707403182983398:A0.0014742590248078378],),{})

< (CT1s2x3x3072[-4.984103679656982,5.78546142578125:A-0.0054593018738336874],)

< (CT1s3x4x3072[-5.760891914367676,5.134554386138916:A-0.008695052235676966],)

<<<

>>> activation_fn: SiLUActivation

> ((CT1s2x3x8192[-4.569545269012451,4.613117218017578:A-0.0034347895305302245],),{})

> ((CT1s3x4x8192[-4.659404754638672,4.801564693450928:A-0.0068326877975550815],),{})

< (CT1s2x3x8192[-0.27846455574035645,4.567800998687744:A0.24383247007692285],)

< (CT1s3x4x8192[-0.27846455574035645,4.76243257522583:A0.24092995681131166],)

<<<

< (CT1s2x3x3072[-4.984103679656982,5.78546142578125:A-0.0054593018738336874],)

< (CT1s3x4x3072[-5.760891914367676,5.134554386138916:A-0.008695052235676966],)

<<<

>>> input_layernorm: Phi3RMSNorm

> ((CT1s2x3x3072[-0.0731288269162178,0.08779632300138474:A8.343658588479592e-05],),{})

> ((CT1s3x4x3072[-0.08399917185306549,0.0895691066980362:A4.718146423578052e-05],),{})

< (CT1s2x3x3072[-3.587798833847046,4.40887975692749:A0.003921798224335286],)

< (CT1s3x4x3072[-4.229971885681152,4.4241862297058105:A0.002278887272579608],)

<<<

>>> post_attention_layernorm: Phi3RMSNorm

> ((CT1s2x3x3072[-1.408341646194458,1.4862360954284668:A0.0002041631934477866],),{})

> ((CT1s3x4x3072[-1.5883933305740356,1.6197993755340576:A-0.0023437306700455135],),{})

< (CT1s2x3x3072[-3.743211269378662,3.7259743213653564:A0.00032121702676985505],)

< (CT1s3x4x3072[-4.296080589294434,4.162869453430176:A-0.006826439422444973],)

<<<

>>> resid_attn_dropout: Dropout

> ((CT1s2x3x3072[-1.3978718519210815,1.48145592212677:A0.00012072662433284777],),{})

> ((CT1s3x4x3072[-1.5819348096847534,1.6352994441986084:A-0.0023909121722611973],),{})

< (CT1s2x3x3072[-1.3978718519210815,1.48145592212677:A0.00012072662433284777],)

< (CT1s3x4x3072[-1.5819348096847534,1.6352994441986084:A-0.0023909121722611973],)

<<<

>>> resid_mlp_dropout: Dropout

> ((CT1s2x3x3072[-4.984103679656982,5.78546142578125:A-0.0054593018738336874],),{})

> ((CT1s3x4x3072[-5.760891914367676,5.134554386138916:A-0.008695052235676966],),{})

< (CT1s2x3x3072[-4.984103679656982,5.78546142578125:A-0.0054593018738336874],)

< (CT1s3x4x3072[-5.760891914367676,5.134554386138916:A-0.008695052235676966],)

<<<

< (CT1s2x3x3072[-5.477933883666992,5.8609442710876465:A-0.005255139024130686],)

< (CT1s3x4x3072[-6.0157599449157715,5.4845099449157715:A-0.0110387832329732],)

<<<

>>> layers[1]: Phi3DecoderLayer

> ((CT1s2x3x3072[-5.477933883666992,5.8609442710876465:A-0.005255139024130686],),dict(attention_mask:CT9s2x1x3x33[False,True:A0.9696969696969697],position_ids:CT7s1x3[30,32:A31.0],past_key_values:DynamicCache(key_cache=#2[CT1s2x32x33x96[-5.402509689331055,5.437028408050537:A0.0015122028784644258],CT1s2x32x30x96[-4.304272651672363,4.806103229522705:A-0.002908728721934681]], value_cache=#2[CT1s2x32x33x96[-4.782982349395752,4.303123950958252:A0.00023037239611686097],CT1s2x32x30x96[-4.307050704956055,4.548398494720459:A-0.0010661965557350723]]),use_cache:bool=True,cache_position:CT7s3[30,32:A31.0],position_embeddings:(CT1s1x3x96[-1.1855769157409668,1.1902371644973755:A0.746652018013669],CT1s1x3x96[-1.1887905597686768,1.190193772315979:A0.1589894221542636])))

> ((CT1s3x4x3072[-6.0157599449157715,5.4845099449157715:A-0.0110387832329732],),dict(attention_mask:CT9s3x1x4x35[False,True:A0.9571428571428572],position_ids:CT7s1x4[31,34:A32.5],past_key_values:DynamicCache(key_cache=#2[CT1s3x32x35x96[-5.129370212554932,5.3524885177612305:A0.0029069027014291685],CT1s3x32x31x96[-4.504631519317627,4.66909646987915:A0.0002402408084757681]], value_cache=#2[CT1s3x32x35x96[-4.552186965942383,4.951562404632568:A0.0010402998548718232],CT1s3x32x31x96[-4.3572845458984375,4.77432107925415:A0.003039900976282685]]),use_cache:bool=True,cache_position:CT7s4[31,34:A32.5],position_embeddings:(CT1s1x4x96[-1.1855769157409668,1.190237045288086:A0.7129333875218435],CT1s1x4x96[-1.1719439029693604,1.1902378797531128:A0.18296290554159592])))

>>> self_attn: Phi3Attention

> ((),dict(hidden_states:CT1s2x3x3072[-3.878816604614258,4.137322902679443:A-0.0037933456840608423],attention_mask:CT9s2x1x3x33[False,True:A0.9696969696969697],position_ids:CT7s1x3[30,32:A31.0],past_key_values:DynamicCache(key_cache=#2[CT1s2x32x33x96[-5.402509689331055,5.437028408050537:A0.0015122028784644258],CT1s2x32x30x96[-4.304272651672363,4.806103229522705:A-0.002908728721934681]], value_cache=#2[CT1s2x32x33x96[-4.782982349395752,4.303123950958252:A0.00023037239611686097],CT1s2x32x30x96[-4.307050704956055,4.548398494720459:A-0.0010661965557350723]]),use_cache:bool=True,cache_position:CT7s3[30,32:A31.0],position_embeddings:(CT1s1x3x96[-1.1855769157409668,1.1902371644973755:A0.746652018013669],CT1s1x3x96[-1.1887905597686768,1.190193772315979:A0.1589894221542636])))

> ((),dict(hidden_states:CT1s3x4x3072[-4.361047267913818,3.8896636962890625:A-0.007821610954112442],attention_mask:CT9s3x1x4x35[False,True:A0.9571428571428572],position_ids:CT7s1x4[31,34:A32.5],past_key_values:DynamicCache(key_cache=#2[CT1s3x32x35x96[-5.129370212554932,5.3524885177612305:A0.0029069027014291685],CT1s3x32x31x96[-4.504631519317627,4.66909646987915:A0.0002402408084757681]], value_cache=#2[CT1s3x32x35x96[-4.552186965942383,4.951562404632568:A0.0010402998548718232],CT1s3x32x31x96[-4.3572845458984375,4.77432107925415:A0.003039900976282685]]),use_cache:bool=True,cache_position:CT7s4[31,34:A32.5],position_embeddings:(CT1s1x4x96[-1.1855769157409668,1.190237045288086:A0.7129333875218435],CT1s1x4x96[-1.1719439029693604,1.1902378797531128:A0.18296290554159592])))

>>> o_proj: Linear

> ((CT1s2x3x3072[-2.080373525619507,1.929775357246399:A-0.005456733117247086],),{})

> ((CT1s3x4x3072[-2.6371731758117676,2.4485175609588623:A0.004138793330354989],),{})

< (CT1s2x3x3072[-1.7166292667388916,1.5776588916778564:A0.0018298219020178091],)

< (CT1s3x4x3072[-1.6159858703613281,1.690280556678772:A-0.0020622446187432944],)

<<<

>>> qkv_proj: Linear

> ((CT1s2x3x3072[-3.878816604614258,4.137322902679443:A-0.0037933456840608423],),{})

> ((CT1s3x4x3072[-4.361047267913818,3.8896636962890625:A-0.007821610954112442],),{})

< (CT1s2x3x9216[-4.666598796844482,4.321796417236328:A-0.00021538856065540636],)

< (CT1s3x4x9216[-4.948755741119385,6.303519248962402:A0.009440800977479832],)

<<<

< (CT1s2x3x3072[-1.7166292667388916,1.5776588916778564:A0.0018298219020178091],None)

< (CT1s3x4x3072[-1.6159858703613281,1.690280556678772:A-0.0020622446187432944],None)

<<<

>>> mlp: Phi3MLP

> ((CT1s2x3x3072[-3.890134572982788,4.615504264831543:A-0.002348823769923156],),{})

> ((CT1s3x4x3072[-4.071815013885498,4.180184364318848:A-0.008973767383221285],),{})

>>> gate_up_proj: Linear

> ((CT1s2x3x3072[-3.890134572982788,4.615504264831543:A-0.002348823769923156],),{})

> ((CT1s3x4x3072[-4.071815013885498,4.180184364318848:A-0.008973767383221285],),{})

< (CT1s2x3x16384[-4.72442102432251,4.901435852050781:A-0.0018572128812882245],)

< (CT1s3x4x16384[-5.16550350189209,5.184349060058594:A0.004185410158105067],)

<<<

>>> down_proj: Linear

> ((CT1s2x3x8192[-9.067243576049805,11.414295196533203:A-0.00034322951576227595],),{})

> ((CT1s3x4x8192[-13.098726272583008,11.7630033493042:A-0.0014596994237370666],),{})

< (CT1s2x3x3072[-4.959231853485107,5.511347770690918:A-0.006164387192560146],)

< (CT1s3x4x3072[-5.060450077056885,5.8758745193481445:A0.0038178380907664985],)

<<<

>>> activation_fn: SiLUActivation

> ((CT1s2x3x8192[-4.72442102432251,4.901435852050781:A-0.0022851155253960087],),{})

> ((CT1s3x4x8192[-4.83467435836792,5.0295305252075195:A0.003626759888094947],),{})

< (CT1s2x3x8192[-0.27846455574035645,4.86525821685791:A0.24454633568750017],)

< (CT1s3x4x8192[-0.27846455574035645,4.996841907501221:A0.24929644321612657],)

<<<

< (CT1s2x3x3072[-4.959231853485107,5.511347770690918:A-0.006164387192560146],)

< (CT1s3x4x3072[-5.060450077056885,5.8758745193481445:A0.0038178380907664985],)

<<<

>>> input_layernorm: Phi3RMSNorm

> ((CT1s2x3x3072[-5.477933883666992,5.8609442710876465:A-0.005255139024130686],),{})

> ((CT1s3x4x3072[-6.0157599449157715,5.4845099449157715:A-0.0110387832329732],),{})

< (CT1s2x3x3072[-3.878816604614258,4.137322902679443:A-0.0037933456840608423],)

< (CT1s3x4x3072[-4.361047267913818,3.8896636962890625:A-0.007821610954112442],)

<<<

>>> post_attention_layernorm: Phi3RMSNorm

> ((CT1s2x3x3072[-5.691557884216309,6.624452114105225:A-0.00342531708661732],),{})

> ((CT1s3x4x3072[-5.816491603851318,5.969421863555908:A-0.01310102805099531],),{})

< (CT1s2x3x3072[-3.890134572982788,4.615504264831543:A-0.002348823769923156],)

< (CT1s3x4x3072[-4.071815013885498,4.180184364318848:A-0.008973767383221285],)

<<<

>>> resid_attn_dropout: Dropout

> ((CT1s2x3x3072[-1.7166292667388916,1.5776588916778564:A0.0018298219020178091],),{})

> ((CT1s3x4x3072[-1.6159858703613281,1.690280556678772:A-0.0020622446187432944],),{})

< (CT1s2x3x3072[-1.7166292667388916,1.5776588916778564:A0.0018298219020178091],)

< (CT1s3x4x3072[-1.6159858703613281,1.690280556678772:A-0.0020622446187432944],)

<<<

>>> resid_mlp_dropout: Dropout

> ((CT1s2x3x3072[-4.959231853485107,5.511347770690918:A-0.006164387192560146],),{})

> ((CT1s3x4x3072[-5.060450077056885,5.8758745193481445:A0.0038178380907664985],),{})

< (CT1s2x3x3072[-4.959231853485107,5.511347770690918:A-0.006164387192560146],)

< (CT1s3x4x3072[-5.060450077056885,5.8758745193481445:A0.0038178380907664985],)

<<<

< (CT1s2x3x3072[-8.325420379638672,8.062938690185547:A-0.009589704636204665],)

< (CT1s3x4x3072[-8.455057144165039,7.9424285888671875:A-0.009283190114729223],)

<<<

>>> norm: Phi3RMSNorm

> ((CT1s2x3x3072[-8.325420379638672,8.062938690185547:A-0.009589704636204665],),{})

> ((CT1s3x4x3072[-8.455057144165039,7.9424285888671875:A-0.009283190114729223],),{})

< (CT1s2x3x3072[-4.1325907707214355,4.0022993087768555:A-0.004913300995191605],)

< (CT1s3x4x3072[-4.452674388885498,4.0953850746154785:A-0.004730929032951494],)

<<<

>>> rotary_emb: Phi3RotaryEmbedding

> ((CT1s2x3x3072[-0.0731288269162178,0.08779632300138474:A8.343658588479592e-05],),dict(position_ids:CT7s1x3[30,32:A31.0]))

> ((CT1s3x4x3072[-0.08399917185306549,0.0895691066980362:A4.718146423578052e-05],),dict(position_ids:CT7s1x4[31,34:A32.5]))

< (CT1s1x3x96[-1.1855769157409668,1.1902371644973755:A0.746652018013669],CT1s1x3x96[-1.1887905597686768,1.190193772315979:A0.1589894221542636])

< (CT1s1x4x96[-1.1855769157409668,1.190237045288086:A0.7129333875218435],CT1s1x4x96[-1.1719439029693604,1.1902378797531128:A0.18296290554159592])

<<<

< (BaseModelOutputWithPast(last_hidden_state:CT1s2x3x3072[-4.1325907707214355,4.0022993087768555:A-0.004913300995191605],past_key_values:DynamicCache(key_cache=#2[CT1s2x32x33x96[-5.402509689331055,5.437028408050537:A0.0015122028784644258],CT1s2x32x33x96[-5.697273254394531,5.64616584777832:A-0.002411452736933751]], value_cache=#2[CT1s2x32x33x96[-4.782982349395752,4.303123950958252:A0.00023037239611686097],CT1s2x32x33x96[-4.651107311248779,4.548398494720459:A-0.0017475117509606708]])),)

< (BaseModelOutputWithPast(last_hidden_state:CT1s3x4x3072[-4.452674388885498,4.0953850746154785:A-0.004730929032951494],past_key_values:DynamicCache(key_cache=#2[CT1s3x32x35x96[-5.129370212554932,5.3524885177612305:A0.0029069027014291685],CT1s3x32x35x96[-5.6584882736206055,5.205029487609863:A0.0006168220610235804]], value_cache=#2[CT1s3x32x35x96[-4.552186965942383,4.951562404632568:A0.0010402998548718232],CT1s3x32x35x96[-4.444775581359863,6.303519248962402:A0.003496529404818466]])),)

<<<

>>> lm_head: Linear

> ((CT1s2x3x3072[-4.1325907707214355,4.0022993087768555:A-0.004913300995191605],),{})

> ((CT1s3x4x3072[-4.452674388885498,4.0953850746154785:A-0.004730929032951494],),{})

< (CT1s2x3x32064[-4.986209869384766,4.816640853881836:A-0.004613860504347395],)

< (CT1s3x4x32064[-5.025021553039551,4.8513007164001465:A-0.001983840996172239],)

<<<

< (CausalLMOutputWithPast(logits:CT1s2x3x32064[-4.986209869384766,4.816640853881836:A-0.004613860504347395],past_key_values:DynamicCache(key_cache=#2[CT1s2x32x33x96[-5.402509689331055,5.437028408050537:A0.0015122028784644258],CT1s2x32x33x96[-5.697273254394531,5.64616584777832:A-0.002411452736933751]], value_cache=#2[CT1s2x32x33x96[-4.782982349395752,4.303123950958252:A0.00023037239611686097],CT1s2x32x33x96[-4.651107311248779,4.548398494720459:A-0.0017475117509606708]])),)

< (CausalLMOutputWithPast(logits:CT1s3x4x32064[-5.025021553039551,4.8513007164001465:A-0.001983840996172239],past_key_values:DynamicCache(key_cache=#2[CT1s3x32x35x96[-5.129370212554932,5.3524885177612305:A0.0029069027014291685],CT1s3x32x35x96[-5.6584882736206055,5.205029487609863:A0.0006168220610235804]], value_cache=#2[CT1s3x32x35x96[-4.552186965942383,4.951562404632568:A0.0010402998548718232],CT1s3x32x35x96[-4.444775581359863,6.303519248962402:A0.003496529404818466]])),)

<<<

[_untrace_forward_execution] M:__main__-Phi3ForCausalLM

[_untrace_forward_execution] .. M:model-Phi3Model

[_untrace_forward_execution] .... M:embed_tokens-Embedding

[_untrace_forward_execution] .... M:layers[0]-Phi3DecoderLayer

[_untrace_forward_execution] ...... M:self_attn-Phi3Attention

[_untrace_forward_execution] ........ M:o_proj-Linear

[_untrace_forward_execution] ........ M:qkv_proj-Linear

[_untrace_forward_execution] ...... M:mlp-Phi3MLP

[_untrace_forward_execution] ........ M:gate_up_proj-Linear

[_untrace_forward_execution] ........ M:down_proj-Linear

[_untrace_forward_execution] ........ M:activation_fn-SiLUActivation

[_untrace_forward_execution] ...... M:input_layernorm-Phi3RMSNorm

[_untrace_forward_execution] ...... M:post_attention_layernorm-Phi3RMSNorm

[_untrace_forward_execution] ...... M:resid_attn_dropout-Dropout

[_untrace_forward_execution] ...... M:resid_mlp_dropout-Dropout

[_untrace_forward_execution] .... M:layers[1]-Phi3DecoderLayer

[_untrace_forward_execution] ...... M:self_attn-Phi3Attention

[_untrace_forward_execution] ........ M:o_proj-Linear

[_untrace_forward_execution] ........ M:qkv_proj-Linear

[_untrace_forward_execution] ...... M:mlp-Phi3MLP

[_untrace_forward_execution] ........ M:gate_up_proj-Linear

[_untrace_forward_execution] ........ M:down_proj-Linear

[_untrace_forward_execution] ........ M:activation_fn-SiLUActivation

[_untrace_forward_execution] ...... M:input_layernorm-Phi3RMSNorm

[_untrace_forward_execution] ...... M:post_attention_layernorm-Phi3RMSNorm

[_untrace_forward_execution] ...... M:resid_attn_dropout-Dropout

[_untrace_forward_execution] ...... M:resid_mlp_dropout-Dropout

[_untrace_forward_execution] .... M:norm-Phi3RMSNorm

[_untrace_forward_execution] .... M:rotary_emb-Phi3RotaryEmbedding

[_untrace_forward_execution] .. M:lm_head-Linear

Now we keep in memory every input/output for the submodules, we can guess the dynamic shapes for every of them. The final ones:

dynamic_shapes = diag.guess_dynamic_shapes()

print("The dynamic shapes are:")

pprint.pprint(dynamic_shapes)

The dynamic shapes are:

((),

{'attention_mask': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},

'input_ids': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},

'past_key_values': [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)},

{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)},

{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)},

{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}]})

And all the dynamic shapes all along the traced submodules.

print(

diag.pretty_text(

with_dynamic_shape=True,

with_shape=False,

with_min_max=False,

with_device=False,

with_inputs=False,

).replace("<_DimHint.DYNAMIC: 3>", "DYN")

)

>>> __main__: Phi3ForCausalLM

DS=((), {'attention_mask': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)}, 'input_ids': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)}, 'past_key_values': [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}]})

>>> model: Phi3Model

DS=((), {'attention_mask': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)}, 'cache_position': None, 'input_ids': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)}, 'inputs_embeds': None, 'past_key_values': [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}], 'position_ids': None, 'use_cache': None})

>>> embed_tokens: Embedding: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> layers[0]: Phi3DecoderLayer

DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {'attention_mask': {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC), 3: DimHint(DYNAMIC)}, 'cache_position': {0: DimHint(DYNAMIC)}, 'past_key_values': [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}], 'position_embeddings': ({1: DimHint(DYNAMIC)}, {1: DimHint(DYNAMIC)}), 'position_ids': {1: DimHint(DYNAMIC)}, 'use_cache': None})

>>> self_attn: Phi3Attention

DS=((), {'attention_mask': {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC), 3: DimHint(DYNAMIC)}, 'cache_position': {0: DimHint(DYNAMIC)}, 'hidden_states': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)}, 'past_key_values': [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}], 'position_embeddings': ({1: DimHint(DYNAMIC)}, {1: DimHint(DYNAMIC)}), 'position_ids': {1: DimHint(DYNAMIC)}, 'use_cache': None})

>>> o_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> qkv_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

<<<

>>> mlp: Phi3MLP

DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {})

>>> gate_up_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> down_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> activation_fn: SiLUActivation: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

<<<

>>> input_layernorm: Phi3RMSNorm: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> post_attention_layernorm: Phi3RMSNorm: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> resid_attn_dropout: Dropout: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> resid_mlp_dropout: Dropout: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

<<<

>>> layers[1]: Phi3DecoderLayer

DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {'attention_mask': {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC), 3: DimHint(DYNAMIC)}, 'cache_position': {0: DimHint(DYNAMIC)}, 'past_key_values': [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}], 'position_embeddings': ({1: DimHint(DYNAMIC)}, {1: DimHint(DYNAMIC)}), 'position_ids': {1: DimHint(DYNAMIC)}, 'use_cache': None})

>>> self_attn: Phi3Attention

DS=((), {'attention_mask': {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC), 3: DimHint(DYNAMIC)}, 'cache_position': {0: DimHint(DYNAMIC)}, 'hidden_states': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)}, 'past_key_values': [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}], 'position_embeddings': ({1: DimHint(DYNAMIC)}, {1: DimHint(DYNAMIC)}), 'position_ids': {1: DimHint(DYNAMIC)}, 'use_cache': None})

>>> o_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> qkv_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

<<<

>>> mlp: Phi3MLP

DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {})

>>> gate_up_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> down_proj: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> activation_fn: SiLUActivation: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

<<<

>>> input_layernorm: Phi3RMSNorm: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> post_attention_layernorm: Phi3RMSNorm: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> resid_attn_dropout: Dropout: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> resid_mlp_dropout: Dropout: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

<<<

>>> norm: Phi3RMSNorm: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

>>> rotary_emb: Phi3RotaryEmbedding: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {'position_ids': {1: DimHint(DYNAMIC)}}) <<<

<<<

>>> lm_head: Linear: DS=(({0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)},), {}) <<<

<<<

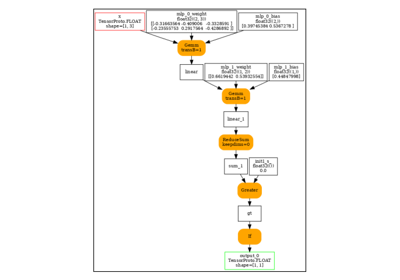

Evaluate the export¶

In many cases, the export (to torch.fx.Graph, to ONNX)

does not work on the first try. We need a way to understand

how much the model can be exported. It can be used to evaluate

the how much code needs to be rewritten or patched to be exportable.

The verbosity can be increase to show dynamic shapes, results

of the discrepancies.

Let’s display the module and its submodule first.

print(

diag.pretty_text(

with_dynamic_shape=False,

with_shape=False,

with_min_max=False,

with_device=False,

with_inputs=False,

)

)

>>> __main__: Phi3ForCausalLM

>>> model: Phi3Model

>>> embed_tokens: Embedding <<<

>>> layers[0]: Phi3DecoderLayer

>>> self_attn: Phi3Attention

>>> o_proj: Linear <<<

>>> qkv_proj: Linear <<<

<<<

>>> mlp: Phi3MLP

>>> gate_up_proj: Linear <<<

>>> down_proj: Linear <<<

>>> activation_fn: SiLUActivation <<<

<<<

>>> input_layernorm: Phi3RMSNorm <<<

>>> post_attention_layernorm: Phi3RMSNorm <<<

>>> resid_attn_dropout: Dropout <<<

>>> resid_mlp_dropout: Dropout <<<

<<<

>>> layers[1]: Phi3DecoderLayer

>>> self_attn: Phi3Attention

>>> o_proj: Linear <<<

>>> qkv_proj: Linear <<<

<<<

>>> mlp: Phi3MLP

>>> gate_up_proj: Linear <<<

>>> down_proj: Linear <<<

>>> activation_fn: SiLUActivation <<<

<<<

>>> input_layernorm: Phi3RMSNorm <<<

>>> post_attention_layernorm: Phi3RMSNorm <<<

>>> resid_attn_dropout: Dropout <<<

>>> resid_mlp_dropout: Dropout <<<

<<<

>>> norm: Phi3RMSNorm <<<

>>> rotary_emb: Phi3RotaryEmbedding <<<

<<<

>>> lm_head: Linear <<<

<<<

The we try to export to see the submodule failing the whole model. We can pickle the failing model and restore it to speedup the refactoring to make it work.

print("----------------------")

ep = diag.try_export(

exporter="fx",

use_dynamic_shapes=True,

exporter_kwargs=dict(strict=False),

verbose=1,

)

----------------------

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] M:__main__-Phi3ForCausalLM --- FAIL, step=EXPORT, reason=Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None) --- For more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation --- --- The error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.['Traceback (most recent call last):\n', ' File "~/github/experimental-experiment/experimental_experiment/torch_interpreter/piece_by_piece.py", line 1573, in _try_export_no_bypass_export\n ep = torch_export(\n ^^^^^^^^^^^^^\n', ' File "~/github/experimental-experiment/experimental_experiment/export_helpers.py", line 164, in torch_export\n return torch.export.export(\n ^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 205, in export\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 171, in export\n return _export(\n ^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_utils_internal.py", line 96, in wrapper_function\n return function(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2508, in _export\n ep = _export_for_training(\n ^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2296, in _export_for_training\n export_artifact = export_func(\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2178, in _non_strict_export\n ) = make_fake_inputs(\n ^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_export/non_strict_utils.py", line 419, in make_fake_inputs\n _check_dynamic_shapes(combined_args, dynamic_shapes)\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1079, in _check_dynamic_shapes\n _tree_map_with_path(check_shape, combined_args, dynamic_shapes, tree_name="inputs")\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 639, in _tree_map_with_path\n return tree_map_with_path(f, tree, *dynamic_shapes, is_leaf=is_leaf)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in tree_map_with_path\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 1280, in unflatten\n leaves = list(leaves)\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in <genexpr>\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 636, in f\n return func(path, t, *dynamic_shapes)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1072, in check_shape\n raise UserError(\n', "torch._dynamo.exc.UserError: Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None)\nFor more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation\n\nThe error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.\n"]

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] .. M:model-Phi3Model --- FAIL, step=EXPORT, reason=Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None) --- For more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation --- --- The error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.['Traceback (most recent call last):\n', ' File "~/github/experimental-experiment/experimental_experiment/torch_interpreter/piece_by_piece.py", line 1573, in _try_export_no_bypass_export\n ep = torch_export(\n ^^^^^^^^^^^^^\n', ' File "~/github/experimental-experiment/experimental_experiment/export_helpers.py", line 164, in torch_export\n return torch.export.export(\n ^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 205, in export\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 171, in export\n return _export(\n ^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_utils_internal.py", line 96, in wrapper_function\n return function(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2508, in _export\n ep = _export_for_training(\n ^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2296, in _export_for_training\n export_artifact = export_func(\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2178, in _non_strict_export\n ) = make_fake_inputs(\n ^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_export/non_strict_utils.py", line 419, in make_fake_inputs\n _check_dynamic_shapes(combined_args, dynamic_shapes)\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1079, in _check_dynamic_shapes\n _tree_map_with_path(check_shape, combined_args, dynamic_shapes, tree_name="inputs")\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 639, in _tree_map_with_path\n return tree_map_with_path(f, tree, *dynamic_shapes, is_leaf=is_leaf)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in tree_map_with_path\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 1280, in unflatten\n leaves = list(leaves)\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in <genexpr>\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 636, in f\n return func(path, t, *dynamic_shapes)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1072, in check_shape\n raise UserError(\n', "torch._dynamo.exc.UserError: Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None)\nFor more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation\n\nThe error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.\n"]

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] .... M:embed_tokens-Embedding --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] .... M:layers[0]-Phi3DecoderLayer --- FAIL, step=EXPORT, reason=Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None) --- For more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation --- --- The error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.['Traceback (most recent call last):\n', ' File "~/github/experimental-experiment/experimental_experiment/torch_interpreter/piece_by_piece.py", line 1573, in _try_export_no_bypass_export\n ep = torch_export(\n ^^^^^^^^^^^^^\n', ' File "~/github/experimental-experiment/experimental_experiment/export_helpers.py", line 164, in torch_export\n return torch.export.export(\n ^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 205, in export\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 171, in export\n return _export(\n ^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_utils_internal.py", line 96, in wrapper_function\n return function(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2508, in _export\n ep = _export_for_training(\n ^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2296, in _export_for_training\n export_artifact = export_func(\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2178, in _non_strict_export\n ) = make_fake_inputs(\n ^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_export/non_strict_utils.py", line 419, in make_fake_inputs\n _check_dynamic_shapes(combined_args, dynamic_shapes)\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1079, in _check_dynamic_shapes\n _tree_map_with_path(check_shape, combined_args, dynamic_shapes, tree_name="inputs")\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 639, in _tree_map_with_path\n return tree_map_with_path(f, tree, *dynamic_shapes, is_leaf=is_leaf)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in tree_map_with_path\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 1280, in unflatten\n leaves = list(leaves)\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in <genexpr>\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 636, in f\n return func(path, t, *dynamic_shapes)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1072, in check_shape\n raise UserError(\n', "torch._dynamo.exc.UserError: Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None)\nFor more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation\n\nThe error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.\n"]

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ...... M:self_attn-Phi3Attention --- FAIL, step=EXPORT, reason=Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None) --- For more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation --- --- The error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.['Traceback (most recent call last):\n', ' File "~/github/experimental-experiment/experimental_experiment/torch_interpreter/piece_by_piece.py", line 1573, in _try_export_no_bypass_export\n ep = torch_export(\n ^^^^^^^^^^^^^\n', ' File "~/github/experimental-experiment/experimental_experiment/export_helpers.py", line 164, in torch_export\n return torch.export.export(\n ^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 205, in export\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 171, in export\n return _export(\n ^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_utils_internal.py", line 96, in wrapper_function\n return function(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2508, in _export\n ep = _export_for_training(\n ^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2296, in _export_for_training\n export_artifact = export_func(\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2178, in _non_strict_export\n ) = make_fake_inputs(\n ^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_export/non_strict_utils.py", line 419, in make_fake_inputs\n _check_dynamic_shapes(combined_args, dynamic_shapes)\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1079, in _check_dynamic_shapes\n _tree_map_with_path(check_shape, combined_args, dynamic_shapes, tree_name="inputs")\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 639, in _tree_map_with_path\n return tree_map_with_path(f, tree, *dynamic_shapes, is_leaf=is_leaf)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in tree_map_with_path\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 1280, in unflatten\n leaves = list(leaves)\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in <genexpr>\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 636, in f\n return func(path, t, *dynamic_shapes)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1072, in check_shape\n raise UserError(\n', "torch._dynamo.exc.UserError: Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None)\nFor more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation\n\nThe error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.\n"]

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ........ M:o_proj-Linear --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ........ M:qkv_proj-Linear --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ...... M:mlp-Phi3MLP --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ...... M:input_layernorm-Phi3RMSNorm --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ...... M:post_attention_layernorm-Phi3RMSNorm --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ...... M:resid_attn_dropout-Dropout --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] ...... M:resid_mlp_dropout-Dropout --- OK:

[torch_export] export starts with backed_size_oblivious=False

[try_export-FX] .... M:layers[1]-Phi3DecoderLayer --- FAIL, step=EXPORT, reason=Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None) --- For more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation --- --- The error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.['Traceback (most recent call last):\n', ' File "~/github/experimental-experiment/experimental_experiment/torch_interpreter/piece_by_piece.py", line 1573, in _try_export_no_bypass_export\n ep = torch_export(\n ^^^^^^^^^^^^^\n', ' File "~/github/experimental-experiment/experimental_experiment/export_helpers.py", line 164, in torch_export\n return torch.export.export(\n ^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 205, in export\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/__init__.py", line 171, in export\n return _export(\n ^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_utils_internal.py", line 96, in wrapper_function\n return function(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2508, in _export\n ep = _export_for_training(\n ^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1343, in wrapper\n raise e\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 1309, in wrapper\n ep = fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/exported_program.py", line 124, in wrapper\n return fn(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2296, in _export_for_training\n export_artifact = export_func(\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/_trace.py", line 2178, in _non_strict_export\n ) = make_fake_inputs(\n ^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/_export/non_strict_utils.py", line 419, in make_fake_inputs\n _check_dynamic_shapes(combined_args, dynamic_shapes)\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1079, in _check_dynamic_shapes\n _tree_map_with_path(check_shape, combined_args, dynamic_shapes, tree_name="inputs")\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 639, in _tree_map_with_path\n return tree_map_with_path(f, tree, *dynamic_shapes, is_leaf=is_leaf)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in tree_map_with_path\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 1280, in unflatten\n leaves = list(leaves)\n ^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/utils/_pytree.py", line 2207, in <genexpr>\n return treespec.unflatten(func(*xs) for xs in zip(*all_keypath_leaves, strict=True))\n ^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 636, in f\n return func(path, t, *dynamic_shapes)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n', ' File "~/vv/this312/lib/python3.12/site-packages/torch/export/dynamic_shapes.py", line 1072, in check_shape\n raise UserError(\n', "torch._dynamo.exc.UserError: Cannot associate shape [{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}] specified at `dynamic_shapes['past_key_values']` to non-tensor type <class 'transformers.cache_utils.DynamicCache'> at `inputs['past_key_values']` (expected None)\nFor more information about this error, see: https://pytorch.org/docs/main/generated/exportdb/index.html#dynamic-shapes-validation\n\nThe error above occurred when calling torch.export.export. If you would like to view some more information about this error, and get a list of all other errors that may occur in your export call, you can replace your `export()` call with `draft_export()`.\n"]

[torch_export] export starts with backed_size_oblivious=False