-m onnx_diagnostic partition … move layer nodes in local functions¶

The command line leverages the metadata added by the exporter. Every node is tagged with information indicating which part of the model it comes from. In particular the key namespace:

transformers.models.llama.modeling_llama.LlamaForCausalLM/model:

transformers.models.llama.modeling_llama.LlamaModel/model.layers.0:

transformers.models.llama.modeling_llama.LlamaDecoderLayer/model.layers.0.self_attn:

transformers.models.llama.modeling_llama.LlamaAttention/unsqueeze_15:

aten.unsqueeze.default

Description¶

See onnx_diagnostic.helpers.onnx_helper.make_model_with_local_functions().

usage: partition [-h] [-r REGEX] [-p META_PREFIX] [-v VERBOSE] input output

Partitions an onnx model by moving nodes into local functions.

Exporters may add metadata to the onnx nodes telling which part

of the model it comes from (namespace, source, ...).

This nodes are moved into local functions.

positional arguments:

input input model

output output model, an expression like '+.part'

inserts '.part' just before the extension"

options:

-h, --help show this help message and exit

-r REGEX, --regex REGEX

merges all nodes sharing the same value in node metadata,

these values must match the regular expression specified by

this parameter, the default value matches what transformers

usually to define a layer

-p META_PREFIX, --meta-prefix META_PREFIX

allowed prefixes for keys in the metadata

-v VERBOSE, --verbose VERBOSE

verbosity

The regular may match the following values,

'model.layers.0.forward', 'model.layers.1.forward', ...

A local function will be created for each distinct layer.

Example:

python -m onnx_diagnostic partition \

model.onnx +.part -v 1 -r "model.layers.0.s.*"

Example¶

python -m onnx_diagnostic partition arnir0_Tiny-LLM-onnx-dynamo-ir-f16-cuda-op18.onnx partition.onnx -r ".*[.]layers[.][0-9]+$" -v 1

This produces the following output:

-- load 'arnir0_Tiny-LLM-onnx-dynamo-ir-f16-cuda-op18.onnx'

-- partition

[make_model_with_local_functions] matched 1 partitions

[make_model_with_local_functions] move 89 nodes in partition 'transformers_models_llama_modeling_llama_LlamaModel/model_layers_0'

-- save into 'partition.onnx'

-- done

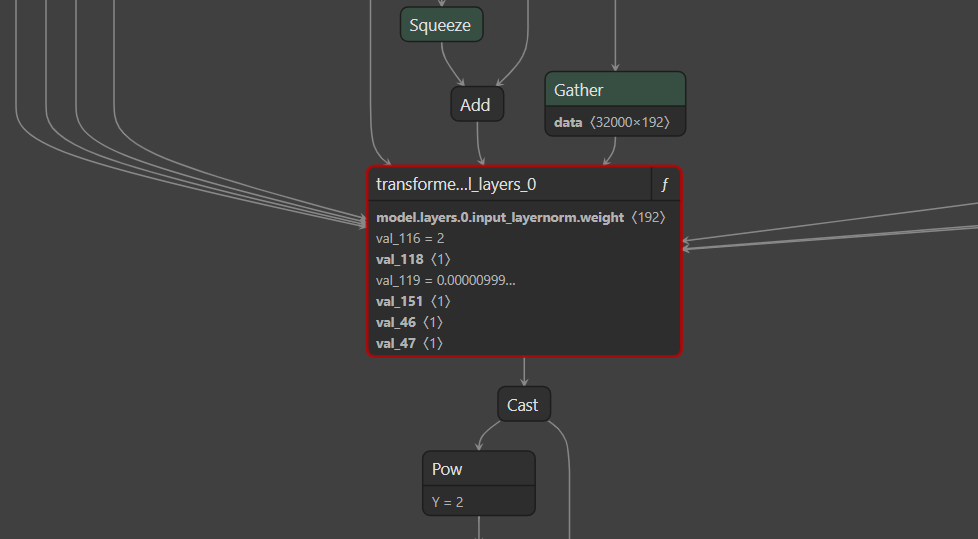

The partitioned model includes the following node: