onnx_diagnostic.helpers.torch_helper¶

- onnx_diagnostic.helpers.torch_helper.closest_factor_pair(n: int)[source][source]¶

Tries to find

a, bsuch asn == a * b.

- onnx_diagnostic.helpers.torch_helper.dummy_llm(cls_name: str | None = None, dynamic_shapes: bool = False) Tuple[Module, Tuple[Tensor, ...]] | Tuple[Module, Tuple[Tensor, ...], Any][source][source]¶

Creates a dummy LLM for test purposes.

- Parameters:

cls_name – None for whole model or a piece of it

dynamic_shapes – returns dynamic shapes as well

<<<

from onnx_diagnostic.helpers.torch_helper import dummy_llm print(dummy_llm())

>>>

(LLM( (embedding): Embedding( (embedding): Embedding(1024, 16) (pe): Embedding(1024, 16) ) (decoder): DecoderLayer( (attention): MultiAttentionBlock( (attention): ModuleList( (0-1): 2 x AttentionBlock( (query): Linear(in_features=16, out_features=16, bias=False) (key): Linear(in_features=16, out_features=16, bias=False) (value): Linear(in_features=16, out_features=16, bias=False) ) ) (linear): Linear(in_features=32, out_features=16, bias=True) ) (feed_forward): FeedForward( (linear_1): Linear(in_features=16, out_features=128, bias=True) (relu): ReLU() (linear_2): Linear(in_features=128, out_features=16, bias=True) ) (norm_1): LayerNorm((16,), eps=1e-05, elementwise_affine=True) (norm_2): LayerNorm((16,), eps=1e-05, elementwise_affine=True) ) ), (tensor([[176, 443, 529, 577, 98, 242, 310, 720, 160, 607, 837, 502, 220, 253, 738, 911, 938, 198, 380, 964, 387, 496, 304, 859, 265, 311, 333, 882, 315, 980]]),))

- onnx_diagnostic.helpers.torch_helper.fake_torchdynamo_exporting()[source][source]¶

Sets

torch.compiler._is_exporting_flagto True to trigger pieces of code only enabled during export.

- onnx_diagnostic.helpers.torch_helper.from_numpy(tensor: ndarray) Tensor[source][source]¶

Converts a

numpy.ndarraytotorch.Tensor.

- onnx_diagnostic.helpers.torch_helper.get_weight_type(model: Module) dtype[source][source]¶

Returns the most probable dtype in a model.

- onnx_diagnostic.helpers.torch_helper.int_device_to_torch_device(device_id: int) device[source][source]¶

Converts a device defined as an integer (coming from

torch.Tensor.get_device()) into atorch.device.

- onnx_diagnostic.helpers.torch_helper.is_stealing() bool[source][source]¶

Returns true if

steal_forward()was yielded.

- onnx_diagnostic.helpers.torch_helper.is_torchdynamo_exporting() bool[source][source]¶

Tells if torch is exporting a model. Relies on

torch.compiler.is_exporting().

- onnx_diagnostic.helpers.torch_helper.model_statistics(model: Module)[source][source]¶

Returns statistics on a model in a dictionary.

- onnx_diagnostic.helpers.torch_helper.onnx_dtype_to_torch_dtype(itype: int) dtype[source][source]¶

Converts an onnx type into a torch dtype.

- Parameters:

to – onnx dtype

- Returns:

torch dtype

- onnx_diagnostic.helpers.torch_helper.proto_from_tensor(arr: Tensor, name: str | None = None, verbose: int = 0) TensorProto[source][source]¶

Converts a torch Tensor into a TensorProto.

- Parameters:

arr – tensor

verbose – display the type and shape

- Returns:

a TensorProto

- onnx_diagnostic.helpers.torch_helper.replace_string_by_dynamic(dynamic_shapes: Any) Any[source][source]¶

Replaces strings by

torch.export.Dim.DYNAMIC.

- onnx_diagnostic.helpers.torch_helper.steal_append(name: str, obj: Any)[source][source]¶

When outside a forward method, it is still possible to add a python object which contains tensors and dump after the execution of the model.

steal_append("quantize", [t1, t2])

The same code can executed multiple times, then the name can extended with a number.

- onnx_diagnostic.helpers.torch_helper.steal_forward(model: ~torch.nn.modules.module.Module | ~typing.Tuple[str, ~torch.nn.modules.module.Module] | ~typing.List[~torch.nn.modules.module.Module | ~typing.Tuple[str, ~torch.nn.modules.module.Module]], fprint: ~typing.Callable = <function string_type>, dump_file: str | None = None, dump_drop: ~typing.Set[str] | None = None, submodules: bool = False, verbose: int = 0, storage_limit: int = 134217728, save_as_external_data: bool = True, **kwargs)[source][source]¶

The necessary modification to steem forward method and prints out inputs and outputs using

onnx_diagnostic.helpers.string_type(). See example Steel method forward to guess inputs and dynamic shapes (with Tiny-LLM) or Dumps intermediate results of a torch model.- Parameters:

model – a model or a list of models to monitor, every model can also be a tuple(name, model), name is displayed well.

fprint – function used to print out (or dump), by default, it is

onnx_diagnostic.helpers.string_type()kwargs – additional parameters sent to

onnx_diagnostic.helpers.string_type()or any other function defined byfprintdump_file – dumps stolen inputs and outputs in an onnx model, they can be restored with

create_input_tensors_from_onnx_modeldump_drop – to drop some inputs too big (only if dump_file is specified)

save_as_external_data – True by default, but maybe better to have everything in a single file if possible

submodules – if True and model is a module, the list extended with all the submodules the module contains

verbose – verbosity

storage_limit – do not stored object bigger than this

The following examples shows how to steal and dump all the inputs / outputs for a module and its submodules, then restores them.

<<<

import torch from onnx_diagnostic.helpers.torch_helper import steal_forward from onnx_diagnostic.helpers.mini_onnx_builder import ( create_input_tensors_from_onnx_model, ) class SubModel(torch.nn.Module): def forward(self, x): return x * x class Model(torch.nn.Module): def __init__(self): super().__init__() self.s1 = SubModel() self.s2 = SubModel() def forward(self, x, y): return self.s1(x) + self.s2(y) inputs = torch.rand(2, 1), torch.rand(2, 1) model = Model() dump_file = "dump_steal_forward_submodules.onnx" with steal_forward(model, submodules=True, dump_file=dump_file): model(*inputs) # Let's restore the stolen data. restored = create_input_tensors_from_onnx_model(dump_file) for k, v in sorted(restored.items()): if isinstance(v, tuple): args, kwargs = v print("input", k, args, kwargs) else: print("output", k, v)

>>>

+-Model-0 -- stolen forward for class Model -- iteration 0 <- args=(T1s2x1,T1s2x1) --- kwargs={} +s1-SubModel-0 -- stolen forward for class SubModel -- iteration 0 <- args=(T1s2x1,) --- kwargs={} -> T1s2x1 -s1-SubModel-0. +s2-SubModel-0 -- stolen forward for class SubModel -- iteration 0 <- args=(T1s2x1,) --- kwargs={} -> T1s2x1 -s2-SubModel-0. -> T1s2x1 --Model-0. input ('-Model-0', 0, 'I') (tensor([[0.0029], [0.4365]]), tensor([[0.3800], [0.6396]])) {} output ('-Model-0', 0, 'O') tensor([[0.1444], [0.5996]]) input ('s1-SubModel-0', 0, 'I') (tensor([[0.0029], [0.4365]]),) {} output ('s1-SubModel-0', 0, 'O') tensor([[8.6121e-06], [1.9052e-01]]) input ('s2-SubModel-0', 0, 'I') (tensor([[0.3800], [0.6396]]),) {} output ('s2-SubModel-0', 0, 'O') tensor([[0.1444], [0.4091]])

Function

steal_append()can be used to dump more tensors. When inside the context, func:is_stealing returns True, False otherwise.

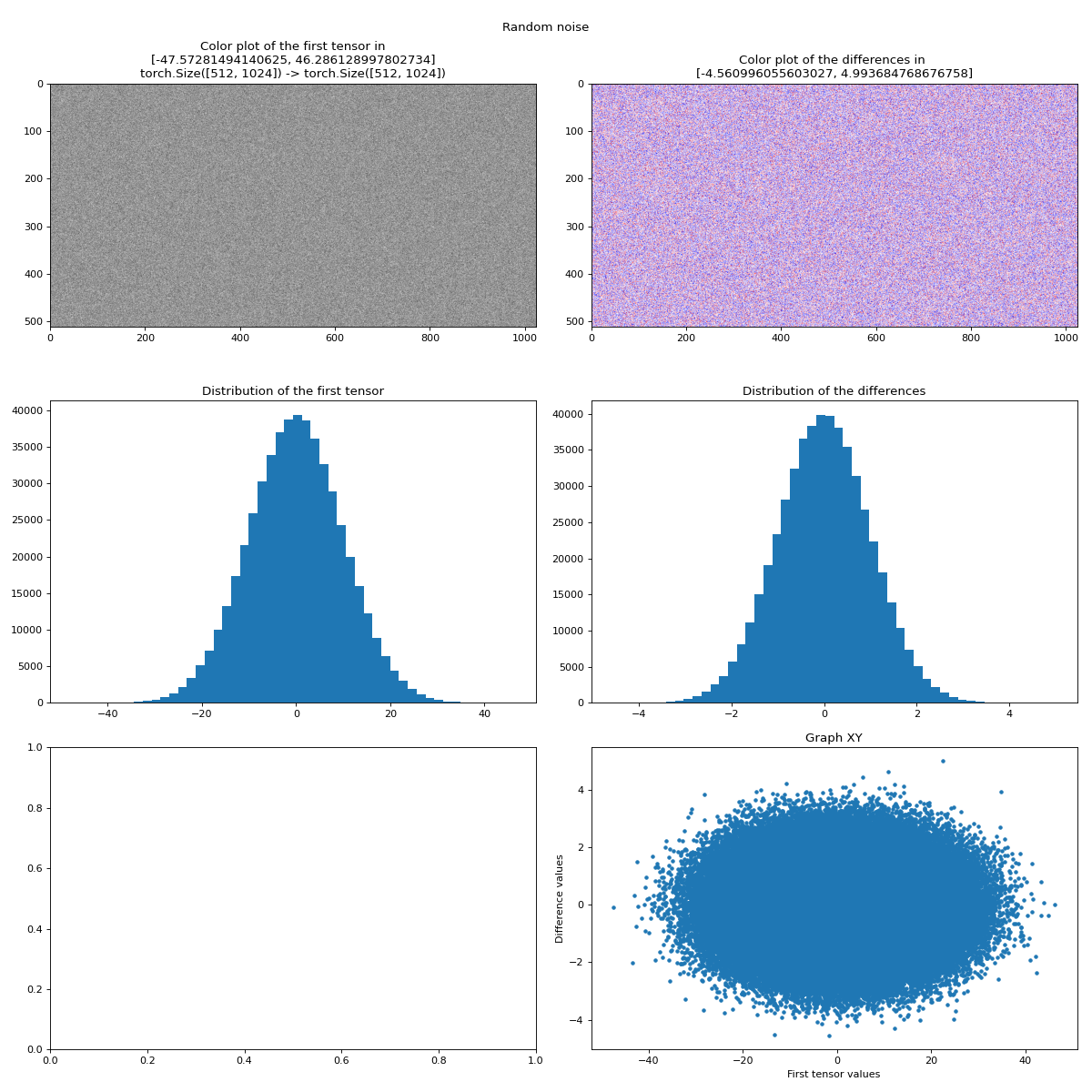

- onnx_diagnostic.helpers.torch_helper.study_discrepancies(t1: Tensor, t2: Tensor, bins: int = 50, figsize: Tuple[int, int] | None = (15, 15), title: str | None = None, name: str | None = None) matplotlib.axes.Axes[source][source]¶

Computes different metrics for the discrepancies. Returns graphs.

import torch from onnx_diagnostic.helpers.torch_helper import study_discrepancies t1 = torch.randn((512, 1024)) * 10 t2 = t1 + torch.randn((512, 1024)) study_discrepancies(t1, t2, title="Random noise")

(

Source code,png,hires.png,pdf)

- onnx_diagnostic.helpers.torch_helper.to_any(value: Any, to_value: dtype | device | str) Any[source][source]¶

Applies torch.to if applicable. Goes recursively.

- onnx_diagnostic.helpers.torch_helper.to_numpy(tensor: Tensor) ndarray[source][source]¶

Converts a

torch.Tensortonumpy.ndarray.

- onnx_diagnostic.helpers.torch_helper.to_tensor(tensor: TensorProto, base_dir: str = '') Tensor[source][source]¶

Converts a TensorProto to a numpy array.

- Parameters:

tensor – a TensorProto object.

base_dir – if external tensor exists, base_dir can help to find the path to it

- Returns:

the converted tensor

- onnx_diagnostic.helpers.torch_helper.torch_deepcopy(value: Any) Any[source][source]¶

Makes a deep copy.

- Parameters:

value – any value

- Returns:

a deep copy