Note

Go to the end to download the full example code.

Export whisper-tiny with InputObserver¶

This reuses the recipe introduced by example Export a LLM with InputObserver (with Tiny-LLM) for model openai/whisper-tiny.

The model¶

import pandas

from transformers import WhisperProcessor, WhisperForConditionalGeneration

from datasets import load_dataset

from onnx_diagnostic import doc

from onnx_diagnostic.helpers import string_type

from onnx_diagnostic.export.api import to_onnx

from onnx_diagnostic.torch_export_patches import (

register_additional_serialization_functions,

torch_export_patches,

)

from onnx_diagnostic.investigate.input_observer import InputObserver

# load model and processor

processor = WhisperProcessor.from_pretrained("openai/whisper-tiny")

model = WhisperForConditionalGeneration.from_pretrained("openai/whisper-tiny")

model.config.forced_decoder_ids = None

# load dummy dataset and read audio files

ds = load_dataset("hf-internal-testing/librispeech_asr_dummy", "clean", split="validation")

samples = [ds[0]["audio"], ds[2]["audio"]]

for s in samples:

print(s["array"].shape, s["array"].min(), s["array"].max(), s["sampling_rate"])

input_features = [

processor(

sample["array"], sampling_rate=sample["sampling_rate"], return_tensors="pt"

).input_features

for sample in samples

]

Loading weights: 0%| | 0/167 [00:00<?, ?it/s]

Loading weights: 1%| | 1/167 [00:00<00:00, 13573.80it/s, Materializing param=model.decoder.embed_positions.weight]

Loading weights: 1%| | 1/167 [00:00<00:00, 5857.97it/s, Materializing param=model.decoder.embed_positions.weight]

Loading weights: 1%| | 2/167 [00:00<00:00, 3178.71it/s, Materializing param=model.decoder.embed_tokens.weight]

Loading weights: 1%| | 2/167 [00:00<00:00, 2678.36it/s, Materializing param=model.decoder.embed_tokens.weight]

Loading weights: 2%|▏ | 3/167 [00:00<00:00, 2334.06it/s, Materializing param=model.decoder.layer_norm.bias]

Loading weights: 2%|▏ | 3/167 [00:00<00:00, 2167.23it/s, Materializing param=model.decoder.layer_norm.bias]

Loading weights: 2%|▏ | 4/167 [00:00<00:00, 501.77it/s, Materializing param=model.decoder.layer_norm.weight]

Loading weights: 2%|▏ | 4/167 [00:00<00:00, 488.68it/s, Materializing param=model.decoder.layer_norm.weight]

Loading weights: 3%|▎ | 5/167 [00:00<00:00, 582.35it/s, Materializing param=model.decoder.layers.0.encoder_attn.k_proj.weight]

Loading weights: 3%|▎ | 5/167 [00:00<00:00, 572.60it/s, Materializing param=model.decoder.layers.0.encoder_attn.k_proj.weight]

Loading weights: 4%|▎ | 6/167 [00:00<00:00, 437.97it/s, Materializing param=model.decoder.layers.0.encoder_attn.out_proj.bias]

Loading weights: 4%|▎ | 6/167 [00:00<00:00, 432.08it/s, Materializing param=model.decoder.layers.0.encoder_attn.out_proj.bias]

Loading weights: 4%|▍ | 7/167 [00:00<00:00, 481.16it/s, Materializing param=model.decoder.layers.0.encoder_attn.out_proj.weight]

Loading weights: 4%|▍ | 7/167 [00:00<00:00, 477.10it/s, Materializing param=model.decoder.layers.0.encoder_attn.out_proj.weight]

Loading weights: 5%|▍ | 8/167 [00:00<00:00, 530.32it/s, Materializing param=model.decoder.layers.0.encoder_attn.q_proj.bias]

Loading weights: 5%|▍ | 8/167 [00:00<00:00, 527.31it/s, Materializing param=model.decoder.layers.0.encoder_attn.q_proj.bias]

Loading weights: 5%|▌ | 9/167 [00:00<00:00, 580.21it/s, Materializing param=model.decoder.layers.0.encoder_attn.q_proj.weight]

Loading weights: 5%|▌ | 9/167 [00:00<00:00, 577.21it/s, Materializing param=model.decoder.layers.0.encoder_attn.q_proj.weight]

Loading weights: 6%|▌ | 10/167 [00:00<00:00, 628.93it/s, Materializing param=model.decoder.layers.0.encoder_attn.v_proj.bias]

Loading weights: 6%|▌ | 10/167 [00:00<00:00, 625.82it/s, Materializing param=model.decoder.layers.0.encoder_attn.v_proj.bias]

Loading weights: 7%|▋ | 11/167 [00:00<00:00, 655.23it/s, Materializing param=model.decoder.layers.0.encoder_attn.v_proj.weight]

Loading weights: 7%|▋ | 11/167 [00:00<00:00, 650.52it/s, Materializing param=model.decoder.layers.0.encoder_attn.v_proj.weight]

Loading weights: 7%|▋ | 12/167 [00:00<00:00, 692.64it/s, Materializing param=model.decoder.layers.0.encoder_attn_layer_norm.bias]

Loading weights: 7%|▋ | 12/167 [00:00<00:00, 689.27it/s, Materializing param=model.decoder.layers.0.encoder_attn_layer_norm.bias]

Loading weights: 8%|▊ | 13/167 [00:00<00:00, 727.03it/s, Materializing param=model.decoder.layers.0.encoder_attn_layer_norm.weight]

Loading weights: 8%|▊ | 13/167 [00:00<00:00, 723.34it/s, Materializing param=model.decoder.layers.0.encoder_attn_layer_norm.weight]

Loading weights: 8%|▊ | 14/167 [00:00<00:00, 764.18it/s, Materializing param=model.decoder.layers.0.fc1.bias]

Loading weights: 8%|▊ | 14/167 [00:00<00:00, 760.92it/s, Materializing param=model.decoder.layers.0.fc1.bias]

Loading weights: 9%|▉ | 15/167 [00:00<00:00, 800.27it/s, Materializing param=model.decoder.layers.0.fc1.weight]

Loading weights: 9%|▉ | 15/167 [00:00<00:00, 796.95it/s, Materializing param=model.decoder.layers.0.fc1.weight]

Loading weights: 10%|▉ | 16/167 [00:00<00:00, 579.10it/s, Materializing param=model.decoder.layers.0.fc2.bias]

Loading weights: 10%|▉ | 16/167 [00:00<00:00, 574.76it/s, Materializing param=model.decoder.layers.0.fc2.bias]

Loading weights: 10%|█ | 17/167 [00:00<00:00, 602.40it/s, Materializing param=model.decoder.layers.0.fc2.weight]

Loading weights: 10%|█ | 17/167 [00:00<00:00, 599.77it/s, Materializing param=model.decoder.layers.0.fc2.weight]

Loading weights: 11%|█ | 18/167 [00:00<00:00, 610.47it/s, Materializing param=model.decoder.layers.0.final_layer_norm.bias]

Loading weights: 11%|█ | 18/167 [00:00<00:00, 606.13it/s, Materializing param=model.decoder.layers.0.final_layer_norm.bias]

Loading weights: 11%|█▏ | 19/167 [00:00<00:00, 626.15it/s, Materializing param=model.decoder.layers.0.final_layer_norm.weight]

Loading weights: 11%|█▏ | 19/167 [00:00<00:00, 622.91it/s, Materializing param=model.decoder.layers.0.final_layer_norm.weight]

Loading weights: 12%|█▏ | 20/167 [00:00<00:00, 616.98it/s, Materializing param=model.decoder.layers.0.self_attn.k_proj.weight]

Loading weights: 12%|█▏ | 20/167 [00:00<00:00, 613.81it/s, Materializing param=model.decoder.layers.0.self_attn.k_proj.weight]

Loading weights: 13%|█▎ | 21/167 [00:00<00:00, 631.42it/s, Materializing param=model.decoder.layers.0.self_attn.out_proj.bias]

Loading weights: 13%|█▎ | 21/167 [00:00<00:00, 628.66it/s, Materializing param=model.decoder.layers.0.self_attn.out_proj.bias]

Loading weights: 13%|█▎ | 22/167 [00:00<00:00, 642.48it/s, Materializing param=model.decoder.layers.0.self_attn.out_proj.weight]

Loading weights: 13%|█▎ | 22/167 [00:00<00:00, 640.44it/s, Materializing param=model.decoder.layers.0.self_attn.out_proj.weight]

Loading weights: 14%|█▍ | 23/167 [00:00<00:00, 661.79it/s, Materializing param=model.decoder.layers.0.self_attn.q_proj.bias]

Loading weights: 14%|█▍ | 23/167 [00:00<00:00, 660.09it/s, Materializing param=model.decoder.layers.0.self_attn.q_proj.bias]

Loading weights: 14%|█▍ | 24/167 [00:00<00:00, 677.63it/s, Materializing param=model.decoder.layers.0.self_attn.q_proj.weight]

Loading weights: 14%|█▍ | 24/167 [00:00<00:00, 674.95it/s, Materializing param=model.decoder.layers.0.self_attn.q_proj.weight]

Loading weights: 15%|█▍ | 25/167 [00:00<00:00, 697.73it/s, Materializing param=model.decoder.layers.0.self_attn.v_proj.bias]

Loading weights: 15%|█▍ | 25/167 [00:00<00:00, 695.81it/s, Materializing param=model.decoder.layers.0.self_attn.v_proj.bias]

Loading weights: 16%|█▌ | 26/167 [00:00<00:00, 676.24it/s, Materializing param=model.decoder.layers.0.self_attn.v_proj.weight]

Loading weights: 16%|█▌ | 26/167 [00:00<00:00, 672.57it/s, Materializing param=model.decoder.layers.0.self_attn.v_proj.weight]

Loading weights: 16%|█▌ | 27/167 [00:00<00:00, 692.05it/s, Materializing param=model.decoder.layers.0.self_attn_layer_norm.bias]

Loading weights: 16%|█▌ | 27/167 [00:00<00:00, 689.84it/s, Materializing param=model.decoder.layers.0.self_attn_layer_norm.bias]

Loading weights: 17%|█▋ | 28/167 [00:00<00:00, 683.14it/s, Materializing param=model.decoder.layers.0.self_attn_layer_norm.weight]

Loading weights: 17%|█▋ | 28/167 [00:00<00:00, 679.55it/s, Materializing param=model.decoder.layers.0.self_attn_layer_norm.weight]

Loading weights: 17%|█▋ | 29/167 [00:00<00:00, 697.70it/s, Materializing param=model.decoder.layers.1.encoder_attn.k_proj.weight]

Loading weights: 17%|█▋ | 29/167 [00:00<00:00, 694.44it/s, Materializing param=model.decoder.layers.1.encoder_attn.k_proj.weight]

Loading weights: 18%|█▊ | 30/167 [00:00<00:00, 690.91it/s, Materializing param=model.decoder.layers.1.encoder_attn.out_proj.bias]

Loading weights: 18%|█▊ | 30/167 [00:00<00:00, 687.46it/s, Materializing param=model.decoder.layers.1.encoder_attn.out_proj.bias]

Loading weights: 19%|█▊ | 31/167 [00:00<00:00, 693.16it/s, Materializing param=model.decoder.layers.1.encoder_attn.out_proj.weight]

Loading weights: 19%|█▊ | 31/167 [00:00<00:00, 689.83it/s, Materializing param=model.decoder.layers.1.encoder_attn.out_proj.weight]

Loading weights: 19%|█▉ | 32/167 [00:00<00:00, 706.00it/s, Materializing param=model.decoder.layers.1.encoder_attn.q_proj.bias]

Loading weights: 19%|█▉ | 32/167 [00:00<00:00, 703.88it/s, Materializing param=model.decoder.layers.1.encoder_attn.q_proj.bias]

Loading weights: 20%|█▉ | 33/167 [00:00<00:00, 716.24it/s, Materializing param=model.decoder.layers.1.encoder_attn.q_proj.weight]

Loading weights: 20%|█▉ | 33/167 [00:00<00:00, 713.77it/s, Materializing param=model.decoder.layers.1.encoder_attn.q_proj.weight]

Loading weights: 20%|██ | 34/167 [00:00<00:00, 728.57it/s, Materializing param=model.decoder.layers.1.encoder_attn.v_proj.bias]

Loading weights: 20%|██ | 34/167 [00:00<00:00, 726.55it/s, Materializing param=model.decoder.layers.1.encoder_attn.v_proj.bias]

Loading weights: 21%|██ | 35/167 [00:00<00:00, 654.28it/s, Materializing param=model.decoder.layers.1.encoder_attn.v_proj.weight]

Loading weights: 21%|██ | 35/167 [00:00<00:00, 648.61it/s, Materializing param=model.decoder.layers.1.encoder_attn.v_proj.weight]

Loading weights: 22%|██▏ | 36/167 [00:00<00:00, 646.91it/s, Materializing param=model.decoder.layers.1.encoder_attn_layer_norm.bias]

Loading weights: 22%|██▏ | 36/167 [00:00<00:00, 644.09it/s, Materializing param=model.decoder.layers.1.encoder_attn_layer_norm.bias]

Loading weights: 22%|██▏ | 37/167 [00:00<00:00, 636.07it/s, Materializing param=model.decoder.layers.1.encoder_attn_layer_norm.weight]

Loading weights: 22%|██▏ | 37/167 [00:00<00:00, 633.53it/s, Materializing param=model.decoder.layers.1.encoder_attn_layer_norm.weight]

Loading weights: 23%|██▎ | 38/167 [00:00<00:00, 643.07it/s, Materializing param=model.decoder.layers.1.fc1.bias]

Loading weights: 23%|██▎ | 38/167 [00:00<00:00, 641.08it/s, Materializing param=model.decoder.layers.1.fc1.bias]

Loading weights: 23%|██▎ | 39/167 [00:00<00:00, 652.40it/s, Materializing param=model.decoder.layers.1.fc1.weight]

Loading weights: 23%|██▎ | 39/167 [00:00<00:00, 650.90it/s, Materializing param=model.decoder.layers.1.fc1.weight]

Loading weights: 24%|██▍ | 40/167 [00:00<00:00, 619.32it/s, Materializing param=model.decoder.layers.1.fc2.bias]

Loading weights: 24%|██▍ | 40/167 [00:00<00:00, 616.82it/s, Materializing param=model.decoder.layers.1.fc2.bias]

Loading weights: 25%|██▍ | 41/167 [00:00<00:00, 582.93it/s, Materializing param=model.decoder.layers.1.fc2.weight]

Loading weights: 25%|██▍ | 41/167 [00:00<00:00, 580.72it/s, Materializing param=model.decoder.layers.1.fc2.weight]

Loading weights: 25%|██▌ | 42/167 [00:00<00:00, 588.80it/s, Materializing param=model.decoder.layers.1.final_layer_norm.bias]

Loading weights: 25%|██▌ | 42/167 [00:00<00:00, 586.91it/s, Materializing param=model.decoder.layers.1.final_layer_norm.bias]

Loading weights: 26%|██▌ | 43/167 [00:00<00:00, 542.35it/s, Materializing param=model.decoder.layers.1.final_layer_norm.weight]

Loading weights: 26%|██▌ | 43/167 [00:00<00:00, 540.29it/s, Materializing param=model.decoder.layers.1.final_layer_norm.weight]

Loading weights: 26%|██▋ | 44/167 [00:00<00:00, 513.82it/s, Materializing param=model.decoder.layers.1.self_attn.k_proj.weight]

Loading weights: 26%|██▋ | 44/167 [00:00<00:00, 512.48it/s, Materializing param=model.decoder.layers.1.self_attn.k_proj.weight]

Loading weights: 27%|██▋ | 45/167 [00:00<00:00, 493.94it/s, Materializing param=model.decoder.layers.1.self_attn.out_proj.bias]

Loading weights: 27%|██▋ | 45/167 [00:00<00:00, 492.68it/s, Materializing param=model.decoder.layers.1.self_attn.out_proj.bias]

Loading weights: 28%|██▊ | 46/167 [00:00<00:00, 500.85it/s, Materializing param=model.decoder.layers.1.self_attn.out_proj.weight]

Loading weights: 28%|██▊ | 46/167 [00:00<00:00, 499.81it/s, Materializing param=model.decoder.layers.1.self_attn.out_proj.weight]

Loading weights: 28%|██▊ | 47/167 [00:00<00:00, 484.69it/s, Materializing param=model.decoder.layers.1.self_attn.q_proj.bias]

Loading weights: 28%|██▊ | 47/167 [00:00<00:00, 483.23it/s, Materializing param=model.decoder.layers.1.self_attn.q_proj.bias]

Loading weights: 29%|██▊ | 48/167 [00:00<00:00, 489.52it/s, Materializing param=model.decoder.layers.1.self_attn.q_proj.weight]

Loading weights: 29%|██▊ | 48/167 [00:00<00:00, 488.21it/s, Materializing param=model.decoder.layers.1.self_attn.q_proj.weight]

Loading weights: 29%|██▉ | 49/167 [00:00<00:00, 494.98it/s, Materializing param=model.decoder.layers.1.self_attn.v_proj.bias]

Loading weights: 29%|██▉ | 49/167 [00:00<00:00, 494.12it/s, Materializing param=model.decoder.layers.1.self_attn.v_proj.bias]

Loading weights: 30%|██▉ | 50/167 [00:00<00:00, 502.16it/s, Materializing param=model.decoder.layers.1.self_attn.v_proj.weight]

Loading weights: 30%|██▉ | 50/167 [00:00<00:00, 501.45it/s, Materializing param=model.decoder.layers.1.self_attn.v_proj.weight]

Loading weights: 31%|███ | 51/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.1.self_attn.v_proj.weight]

Loading weights: 31%|███ | 51/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.1.self_attn_layer_norm.bias]

Loading weights: 31%|███ | 51/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.1.self_attn_layer_norm.bias]

Loading weights: 31%|███ | 52/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.1.self_attn_layer_norm.weight]

Loading weights: 31%|███ | 52/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.1.self_attn_layer_norm.weight]

Loading weights: 32%|███▏ | 53/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.k_proj.weight]

Loading weights: 32%|███▏ | 53/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.k_proj.weight]

Loading weights: 32%|███▏ | 54/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.out_proj.bias]

Loading weights: 32%|███▏ | 54/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.out_proj.bias]

Loading weights: 33%|███▎ | 55/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.out_proj.weight]

Loading weights: 33%|███▎ | 55/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.out_proj.weight]

Loading weights: 34%|███▎ | 56/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.q_proj.bias]

Loading weights: 34%|███▎ | 56/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.q_proj.bias]

Loading weights: 34%|███▍ | 57/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.q_proj.weight]

Loading weights: 34%|███▍ | 57/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.q_proj.weight]

Loading weights: 35%|███▍ | 58/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.v_proj.bias]

Loading weights: 35%|███▍ | 58/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.v_proj.bias]

Loading weights: 35%|███▌ | 59/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.v_proj.weight]

Loading weights: 35%|███▌ | 59/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn.v_proj.weight]

Loading weights: 36%|███▌ | 60/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn_layer_norm.bias]

Loading weights: 36%|███▌ | 60/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn_layer_norm.bias]

Loading weights: 37%|███▋ | 61/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn_layer_norm.weight]

Loading weights: 37%|███▋ | 61/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.encoder_attn_layer_norm.weight]

Loading weights: 37%|███▋ | 62/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc1.bias]

Loading weights: 37%|███▋ | 62/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc1.bias]

Loading weights: 38%|███▊ | 63/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc1.weight]

Loading weights: 38%|███▊ | 63/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc1.weight]

Loading weights: 38%|███▊ | 64/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc2.bias]

Loading weights: 38%|███▊ | 64/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc2.bias]

Loading weights: 39%|███▉ | 65/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc2.weight]

Loading weights: 39%|███▉ | 65/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.fc2.weight]

Loading weights: 40%|███▉ | 66/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.final_layer_norm.bias]

Loading weights: 40%|███▉ | 66/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.final_layer_norm.bias]

Loading weights: 40%|████ | 67/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.final_layer_norm.weight]

Loading weights: 40%|████ | 67/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.final_layer_norm.weight]

Loading weights: 41%|████ | 68/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.k_proj.weight]

Loading weights: 41%|████ | 68/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.k_proj.weight]

Loading weights: 41%|████▏ | 69/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.out_proj.bias]

Loading weights: 41%|████▏ | 69/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.out_proj.bias]

Loading weights: 42%|████▏ | 70/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.out_proj.weight]

Loading weights: 42%|████▏ | 70/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.out_proj.weight]

Loading weights: 43%|████▎ | 71/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.q_proj.bias]

Loading weights: 43%|████▎ | 71/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.q_proj.bias]

Loading weights: 43%|████▎ | 72/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.q_proj.weight]

Loading weights: 43%|████▎ | 72/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.q_proj.weight]

Loading weights: 44%|████▎ | 73/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.v_proj.bias]

Loading weights: 44%|████▎ | 73/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.v_proj.bias]

Loading weights: 44%|████▍ | 74/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.v_proj.weight]

Loading weights: 44%|████▍ | 74/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn.v_proj.weight]

Loading weights: 45%|████▍ | 75/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn_layer_norm.bias]

Loading weights: 45%|████▍ | 75/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn_layer_norm.bias]

Loading weights: 46%|████▌ | 76/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn_layer_norm.weight]

Loading weights: 46%|████▌ | 76/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.2.self_attn_layer_norm.weight]

Loading weights: 46%|████▌ | 77/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.k_proj.weight]

Loading weights: 46%|████▌ | 77/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.k_proj.weight]

Loading weights: 47%|████▋ | 78/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.out_proj.bias]

Loading weights: 47%|████▋ | 78/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.out_proj.bias]

Loading weights: 47%|████▋ | 79/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.out_proj.weight]

Loading weights: 47%|████▋ | 79/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.out_proj.weight]

Loading weights: 48%|████▊ | 80/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.q_proj.bias]

Loading weights: 48%|████▊ | 80/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.q_proj.bias]

Loading weights: 49%|████▊ | 81/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.q_proj.weight]

Loading weights: 49%|████▊ | 81/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.q_proj.weight]

Loading weights: 49%|████▉ | 82/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.v_proj.bias]

Loading weights: 49%|████▉ | 82/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.v_proj.bias]

Loading weights: 50%|████▉ | 83/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.v_proj.weight]

Loading weights: 50%|████▉ | 83/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn.v_proj.weight]

Loading weights: 50%|█████ | 84/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn_layer_norm.bias]

Loading weights: 50%|█████ | 84/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn_layer_norm.bias]

Loading weights: 51%|█████ | 85/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn_layer_norm.weight]

Loading weights: 51%|█████ | 85/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.encoder_attn_layer_norm.weight]

Loading weights: 51%|█████▏ | 86/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc1.bias]

Loading weights: 51%|█████▏ | 86/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc1.bias]

Loading weights: 52%|█████▏ | 87/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc1.weight]

Loading weights: 52%|█████▏ | 87/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc1.weight]

Loading weights: 53%|█████▎ | 88/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc2.bias]

Loading weights: 53%|█████▎ | 88/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc2.bias]

Loading weights: 53%|█████▎ | 89/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc2.weight]

Loading weights: 53%|█████▎ | 89/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.fc2.weight]

Loading weights: 54%|█████▍ | 90/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.final_layer_norm.bias]

Loading weights: 54%|█████▍ | 90/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.final_layer_norm.bias]

Loading weights: 54%|█████▍ | 91/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.final_layer_norm.weight]

Loading weights: 54%|█████▍ | 91/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.final_layer_norm.weight]

Loading weights: 55%|█████▌ | 92/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.k_proj.weight]

Loading weights: 55%|█████▌ | 92/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.k_proj.weight]

Loading weights: 56%|█████▌ | 93/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.out_proj.bias]

Loading weights: 56%|█████▌ | 93/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.out_proj.bias]

Loading weights: 56%|█████▋ | 94/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.out_proj.weight]

Loading weights: 56%|█████▋ | 94/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.out_proj.weight]

Loading weights: 57%|█████▋ | 95/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.q_proj.bias]

Loading weights: 57%|█████▋ | 95/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.q_proj.bias]

Loading weights: 57%|█████▋ | 96/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.q_proj.weight]

Loading weights: 57%|█████▋ | 96/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.q_proj.weight]

Loading weights: 58%|█████▊ | 97/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.v_proj.bias]

Loading weights: 58%|█████▊ | 97/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.v_proj.bias]

Loading weights: 59%|█████▊ | 98/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.v_proj.weight]

Loading weights: 59%|█████▊ | 98/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn.v_proj.weight]

Loading weights: 59%|█████▉ | 99/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn_layer_norm.bias]

Loading weights: 59%|█████▉ | 99/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn_layer_norm.bias]

Loading weights: 60%|█████▉ | 100/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn_layer_norm.weight]

Loading weights: 60%|█████▉ | 100/167 [00:00<00:00, 507.14it/s, Materializing param=model.decoder.layers.3.self_attn_layer_norm.weight]

Loading weights: 60%|██████ | 101/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv1.bias]

Loading weights: 60%|██████ | 101/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv1.bias]

Loading weights: 61%|██████ | 102/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv1.weight]

Loading weights: 61%|██████ | 102/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv1.weight]

Loading weights: 62%|██████▏ | 103/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv2.bias]

Loading weights: 62%|██████▏ | 103/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv2.bias]

Loading weights: 62%|██████▏ | 104/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv2.weight]

Loading weights: 62%|██████▏ | 104/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.conv2.weight]

Loading weights: 63%|██████▎ | 105/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.embed_positions.weight]

Loading weights: 63%|██████▎ | 105/167 [00:00<00:00, 507.14it/s, Materializing param=model.encoder.embed_positions.weight]

Loading weights: 63%|██████▎ | 106/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.embed_positions.weight]

Loading weights: 63%|██████▎ | 106/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layer_norm.bias]

Loading weights: 63%|██████▎ | 106/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layer_norm.bias]

Loading weights: 64%|██████▍ | 107/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layer_norm.weight]

Loading weights: 64%|██████▍ | 107/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layer_norm.weight]

Loading weights: 65%|██████▍ | 108/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc1.bias]

Loading weights: 65%|██████▍ | 108/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc1.bias]

Loading weights: 65%|██████▌ | 109/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc1.weight]

Loading weights: 65%|██████▌ | 109/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc1.weight]

Loading weights: 66%|██████▌ | 110/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc2.bias]

Loading weights: 66%|██████▌ | 110/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc2.bias]

Loading weights: 66%|██████▋ | 111/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc2.weight]

Loading weights: 66%|██████▋ | 111/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.fc2.weight]

Loading weights: 67%|██████▋ | 112/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.final_layer_norm.bias]

Loading weights: 67%|██████▋ | 112/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.final_layer_norm.bias]

Loading weights: 68%|██████▊ | 113/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.final_layer_norm.weight]

Loading weights: 68%|██████▊ | 113/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.final_layer_norm.weight]

Loading weights: 68%|██████▊ | 114/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.k_proj.weight]

Loading weights: 68%|██████▊ | 114/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.k_proj.weight]

Loading weights: 69%|██████▉ | 115/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.out_proj.bias]

Loading weights: 69%|██████▉ | 115/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.out_proj.bias]

Loading weights: 69%|██████▉ | 116/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.out_proj.weight]

Loading weights: 69%|██████▉ | 116/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.out_proj.weight]

Loading weights: 70%|███████ | 117/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.q_proj.bias]

Loading weights: 70%|███████ | 117/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.q_proj.bias]

Loading weights: 71%|███████ | 118/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.q_proj.weight]

Loading weights: 71%|███████ | 118/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.q_proj.weight]

Loading weights: 71%|███████▏ | 119/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.v_proj.bias]

Loading weights: 71%|███████▏ | 119/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.v_proj.bias]

Loading weights: 72%|███████▏ | 120/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.v_proj.weight]

Loading weights: 72%|███████▏ | 120/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn.v_proj.weight]

Loading weights: 72%|███████▏ | 121/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn_layer_norm.bias]

Loading weights: 72%|███████▏ | 121/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn_layer_norm.bias]

Loading weights: 73%|███████▎ | 122/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn_layer_norm.weight]

Loading weights: 73%|███████▎ | 122/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.0.self_attn_layer_norm.weight]

Loading weights: 74%|███████▎ | 123/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc1.bias]

Loading weights: 74%|███████▎ | 123/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc1.bias]

Loading weights: 74%|███████▍ | 124/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc1.weight]

Loading weights: 74%|███████▍ | 124/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc1.weight]

Loading weights: 75%|███████▍ | 125/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc2.bias]

Loading weights: 75%|███████▍ | 125/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc2.bias]

Loading weights: 75%|███████▌ | 126/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc2.weight]

Loading weights: 75%|███████▌ | 126/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.fc2.weight]

Loading weights: 76%|███████▌ | 127/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.final_layer_norm.bias]

Loading weights: 76%|███████▌ | 127/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.final_layer_norm.bias]

Loading weights: 77%|███████▋ | 128/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.final_layer_norm.weight]

Loading weights: 77%|███████▋ | 128/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.final_layer_norm.weight]

Loading weights: 77%|███████▋ | 129/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.k_proj.weight]

Loading weights: 77%|███████▋ | 129/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.k_proj.weight]

Loading weights: 78%|███████▊ | 130/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.out_proj.bias]

Loading weights: 78%|███████▊ | 130/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.out_proj.bias]

Loading weights: 78%|███████▊ | 131/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.out_proj.weight]

Loading weights: 78%|███████▊ | 131/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.out_proj.weight]

Loading weights: 79%|███████▉ | 132/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.q_proj.bias]

Loading weights: 79%|███████▉ | 132/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.q_proj.bias]

Loading weights: 80%|███████▉ | 133/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.q_proj.weight]

Loading weights: 80%|███████▉ | 133/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.q_proj.weight]

Loading weights: 80%|████████ | 134/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.v_proj.bias]

Loading weights: 80%|████████ | 134/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.v_proj.bias]

Loading weights: 81%|████████ | 135/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.v_proj.weight]

Loading weights: 81%|████████ | 135/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn.v_proj.weight]

Loading weights: 81%|████████▏ | 136/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn_layer_norm.bias]

Loading weights: 81%|████████▏ | 136/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn_layer_norm.bias]

Loading weights: 82%|████████▏ | 137/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn_layer_norm.weight]

Loading weights: 82%|████████▏ | 137/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.1.self_attn_layer_norm.weight]

Loading weights: 83%|████████▎ | 138/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc1.bias]

Loading weights: 83%|████████▎ | 138/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc1.bias]

Loading weights: 83%|████████▎ | 139/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc1.weight]

Loading weights: 83%|████████▎ | 139/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc1.weight]

Loading weights: 84%|████████▍ | 140/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc2.bias]

Loading weights: 84%|████████▍ | 140/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc2.bias]

Loading weights: 84%|████████▍ | 141/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc2.weight]

Loading weights: 84%|████████▍ | 141/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.fc2.weight]

Loading weights: 85%|████████▌ | 142/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.final_layer_norm.bias]

Loading weights: 85%|████████▌ | 142/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.final_layer_norm.bias]

Loading weights: 86%|████████▌ | 143/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.final_layer_norm.weight]

Loading weights: 86%|████████▌ | 143/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.final_layer_norm.weight]

Loading weights: 86%|████████▌ | 144/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.k_proj.weight]

Loading weights: 86%|████████▌ | 144/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.k_proj.weight]

Loading weights: 87%|████████▋ | 145/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.out_proj.bias]

Loading weights: 87%|████████▋ | 145/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.out_proj.bias]

Loading weights: 87%|████████▋ | 146/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.out_proj.weight]

Loading weights: 87%|████████▋ | 146/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.out_proj.weight]

Loading weights: 88%|████████▊ | 147/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.q_proj.bias]

Loading weights: 88%|████████▊ | 147/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.q_proj.bias]

Loading weights: 89%|████████▊ | 148/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.q_proj.weight]

Loading weights: 89%|████████▊ | 148/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.q_proj.weight]

Loading weights: 89%|████████▉ | 149/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.v_proj.bias]

Loading weights: 89%|████████▉ | 149/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.v_proj.bias]

Loading weights: 90%|████████▉ | 150/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.v_proj.weight]

Loading weights: 90%|████████▉ | 150/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn.v_proj.weight]

Loading weights: 90%|█████████ | 151/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn_layer_norm.bias]

Loading weights: 90%|█████████ | 151/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn_layer_norm.bias]

Loading weights: 91%|█████████ | 152/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn_layer_norm.weight]

Loading weights: 91%|█████████ | 152/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.2.self_attn_layer_norm.weight]

Loading weights: 92%|█████████▏| 153/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc1.bias]

Loading weights: 92%|█████████▏| 153/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc1.bias]

Loading weights: 92%|█████████▏| 154/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc1.weight]

Loading weights: 92%|█████████▏| 154/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc1.weight]

Loading weights: 93%|█████████▎| 155/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc2.bias]

Loading weights: 93%|█████████▎| 155/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc2.bias]

Loading weights: 93%|█████████▎| 156/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc2.weight]

Loading weights: 93%|█████████▎| 156/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.fc2.weight]

Loading weights: 94%|█████████▍| 157/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.final_layer_norm.bias]

Loading weights: 94%|█████████▍| 157/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.final_layer_norm.bias]

Loading weights: 95%|█████████▍| 158/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.final_layer_norm.weight]

Loading weights: 95%|█████████▍| 158/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.final_layer_norm.weight]

Loading weights: 95%|█████████▌| 159/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.k_proj.weight]

Loading weights: 95%|█████████▌| 159/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.k_proj.weight]

Loading weights: 96%|█████████▌| 160/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.out_proj.bias]

Loading weights: 96%|█████████▌| 160/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.out_proj.bias]

Loading weights: 96%|█████████▋| 161/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.out_proj.weight]

Loading weights: 96%|█████████▋| 161/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.out_proj.weight]

Loading weights: 97%|█████████▋| 162/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.q_proj.bias]

Loading weights: 97%|█████████▋| 162/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.q_proj.bias]

Loading weights: 98%|█████████▊| 163/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.q_proj.weight]

Loading weights: 98%|█████████▊| 163/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.q_proj.weight]

Loading weights: 98%|█████████▊| 164/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.v_proj.bias]

Loading weights: 98%|█████████▊| 164/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.v_proj.bias]

Loading weights: 99%|█████████▉| 165/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.v_proj.weight]

Loading weights: 99%|█████████▉| 165/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn.v_proj.weight]

Loading weights: 99%|█████████▉| 166/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn_layer_norm.bias]

Loading weights: 99%|█████████▉| 166/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn_layer_norm.bias]

Loading weights: 100%|██████████| 167/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn_layer_norm.weight]

Loading weights: 100%|██████████| 167/167 [00:00<00:00, 516.52it/s, Materializing param=model.encoder.layers.3.self_attn_layer_norm.weight]

Loading weights: 100%|██████████| 167/167 [00:00<00:00, 649.67it/s, Materializing param=model.encoder.layers.3.self_attn_layer_norm.weight]

(93680,) -0.33911133 0.38793945 16000

(199760,) -0.4654541 0.59262085 16000

Captures inputs and outputs for the encoder, decoder.

observer_encoder, observer_decoder = InputObserver(), InputObserver()

with register_additional_serialization_functions(patch_transformers=True):

for features in input_features:

with (

observer_encoder(model.model.encoder, store_n_calls=4),

observer_decoder(model.model.decoder, store_n_calls=4),

):

predicted_ids = model.generate(features)

print(f"{observer_encoder.num_obs()} observations stored for encoder.")

print(f"{observer_decoder.num_obs()} observations stored for decoder.")

4 observations stored for encoder.

8 observations stored for decoder.

Export the encoder¶

kwargs = observer_encoder.infer_arguments()

dynamic_shapes = observer_encoder.infer_dynamic_shapes(set_batch_dimension_for=True)

print(f"encoder kwargs={string_type(kwargs, with_shape=True)}")

print(f"encoder dynamic_shapes={dynamic_shapes}")

for candidate in observer_encoder.info.inputs:

print(

" ",

candidate,

candidate.str_obs(),

string_type(candidate.aligned_flat_list, with_shape=True),

)

filename_encoder = "plot_export_whisper_tiny_input_observer_encoder.onnx"

with torch_export_patches(patch_transformers=True):

to_onnx(

model.model.encoder,

args=(),

filename=filename_encoder,

kwargs=kwargs,

dynamic_shapes=dynamic_shapes,

exporter="custom",

)

encoder kwargs=dict(input_features:T1s1x80x3000,attention_mask:T7s1x80)

encoder dynamic_shapes={'input_features': {0: DimHint(DYNAMIC)}, 'attention_mask': {0: DimHint(DYNAMIC)}}

InputCandidate(1 args, 0 kwargs, 1 tensors, 2 aligned tensors) InputCandidate(args=(T1s1x80x3000,), kwargs={}, cst_kwargs={}) #2[T1s1x80x3000,None]

InputCandidate(0 args, 2 kwargs, 2 tensors, 2 aligned tensors) InputCandidate(args=(), kwargs=dict(input_features:T1s1x80x3000,attention_mask:T7s1x80), cst_kwargs={}) #2[T1s1x80x3000,T7s1x80]

InputCandidate(1 args, 0 kwargs, 1 tensors, 2 aligned tensors) InputCandidate(args=(T1s1x80x3000,), kwargs={}, cst_kwargs={}) #2[T1s1x80x3000,None]

InputCandidate(0 args, 2 kwargs, 2 tensors, 2 aligned tensors) InputCandidate(args=(), kwargs=dict(input_features:T1s1x80x3000,attention_mask:T7s1x80), cst_kwargs={}) #2[T1s1x80x3000,T7s1x80]

Let’s measure the discrepancies.

data = observer_encoder.check_discrepancies(filename_encoder, progress_bar=True)

print(pandas.DataFrame(data))

0%| | 0/4 [00:00<?, ?it/s]

25%|██▌ | 1/4 [00:00<00:00, 3.16it/s]

50%|█████ | 2/4 [00:00<00:00, 4.42it/s]

75%|███████▌ | 3/4 [00:00<00:00, 5.57it/s]

100%|██████████| 4/4 [00:00<00:00, 5.85it/s]

100%|██████████| 4/4 [00:00<00:00, 5.25it/s]

abs rel sum n dnan dev >0.1 ... ort_duration n_inputs n_none n_empty inputs outputs_torch outputs_ort

0 0.004698 0.172071 2.154763 576000.0 0 0 0 ... 0.289419 2 1 1 dict(input_features:T1s1x80x3000,attention_mas... #1[T1s1x1500x384] #1[T1s1x1500x384]

1 0.004698 0.172071 2.154763 576000.0 0 0 0 ... 0.153828 2 0 0 dict(input_features:T1s1x80x3000,attention_mas... #1[T1s1x1500x384] #1[T1s1x1500x384]

2 0.002703 0.211847 1.891840 576000.0 0 0 0 ... 0.115835 2 1 1 dict(input_features:T1s1x80x3000,attention_mas... #1[T1s1x1500x384] #1[T1s1x1500x384]

3 0.002703 0.211847 1.891840 576000.0 0 0 0 ... 0.148021 2 0 0 dict(input_features:T1s1x80x3000,attention_mas... #1[T1s1x1500x384] #1[T1s1x1500x384]

[4 rows x 18 columns]

Export the decoder¶

kwargs = observer_decoder.infer_arguments()

dynamic_shapes = observer_decoder.infer_dynamic_shapes(set_batch_dimension_for=True)

print(f"decoder kwargs={string_type(kwargs, with_shape=True)}")

print(f"decoder dynamic_shapes={dynamic_shapes}")

filename_decoder = "plot_export_whisper_tiny_input_observer_decoder.onnx"

with torch_export_patches(patch_transformers=True):

to_onnx(

model.model.decoder,

args=(),

filename=filename_decoder,

kwargs=observer_decoder.infer_arguments(),

dynamic_shapes=observer_decoder.infer_dynamic_shapes(set_batch_dimension_for=True),

exporter="custom",

)

decoder kwargs=dict(input_ids:T7s1x4,encoder_hidden_states:T1s1x1500x384,past_key_values:EncoderDecoderCache(self_attention_cache=DynamicCache(key_cache=#4[T1s1x6x0x64,T1s1x6x0x64,T1s1x6x0x64,T1s1x6x0x64], value_cache=#4[T1s1x6x0x64,T1s1x6x0x64,T1s1x6x0x64,T1s1x6x0x64]), cross_attention_cache=DynamicCache(key_cache=#4[T1s1x6x1500x64,T1s1x6x1500x64,T1s1x6x1500x64,T1s1x6x1500x64], value_cache=#4[T1s1x6x1500x64,T1s1x6x1500x64,T1s1x6x1500x64,T1s1x6x1500x64])),cache_position:T7s4)

decoder dynamic_shapes={'input_ids': {0: DimHint(DYNAMIC), 1: DimHint(DYNAMIC)}, 'encoder_hidden_states': {0: DimHint(DYNAMIC)}, 'past_key_values': [[{0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC), 2: DimHint(DYNAMIC)}], [{0: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC)}, {0: DimHint(DYNAMIC)}]], 'cache_position': {0: DimHint(DYNAMIC)}}

Let’s measure the discrepancies.

data = observer_decoder.check_discrepancies(filename_decoder, progress_bar=True, atol=1e-3)

print(pandas.DataFrame(data))

0%| | 0/8 [00:00<?, ?it/s]

12%|█▎ | 1/8 [00:00<00:03, 2.29it/s]

38%|███▊ | 3/8 [00:00<00:00, 6.10it/s]

75%|███████▌ | 6/8 [00:00<00:00, 10.71it/s]

100%|██████████| 8/8 [00:00<00:00, 12.90it/s]

100%|██████████| 8/8 [00:00<00:00, 9.73it/s]

error SUCCESS index duration_torch ort_duration n_inputs n_none ... rel sum n dnan dev >0.1 >0.01

0 [ONNXRuntimeError] : 2 : INVALID_ARGUMENT : No... False 2 0.091052 0.412167 19 17 ... NaN NaN NaN NaN NaN NaN NaN

1 NaN False 9 0.025122 0.007265 19 16 ... 1289.270441 2.292150e+06 4621824.0 0.0 0.0 3797779.0 4530052.0

2 NaN True 9 0.007005 0.010515 19 0 ... 0.001967 1.118496e-02 4623744.0 0.0 0.0 0.0 0.0

3 NaN True 9 0.005593 0.005936 19 0 ... 0.000828 1.679566e-02 4626816.0 0.0 0.0 0.0 0.0

4 [ONNXRuntimeError] : 2 : INVALID_ARGUMENT : No... False 2 0.020414 0.003014 19 17 ... NaN NaN NaN NaN NaN NaN NaN

5 NaN False 9 0.021384 0.005686 19 16 ... 1437.924748 2.460245e+06 4621824.0 0.0 0.0 3856072.0 4536294.0

6 NaN True 9 0.004642 0.006332 19 0 ... 0.004936 1.089283e-02 4623744.0 0.0 0.0 0.0 0.0

7 NaN True 9 0.004207 0.007222 19 0 ... 0.011505 9.736205e-03 4626816.0 0.0 0.0 0.0 0.0

[8 rows x 19 columns]

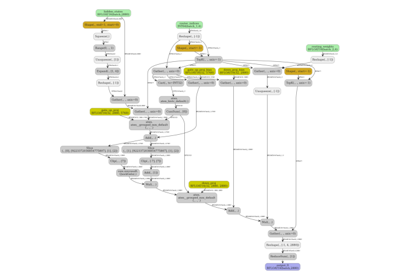

doc.save_fig(doc.plot_dot(filename_decoder), f"{filename_decoder}.png", dpi=400)

Total running time of the script: (0 minutes 47.460 seconds)

Related examples