Examples Gallery¶

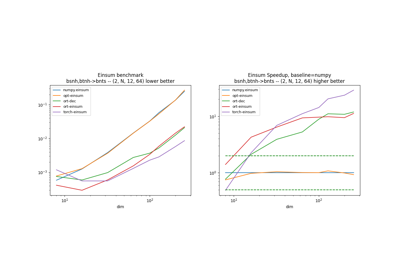

Measuring onnxruntime performance against a cython binding

Measuring onnxruntime performance against a cython binding

Measures loading, saving time for an onnx model in python

Measures loading, saving time for an onnx model in python

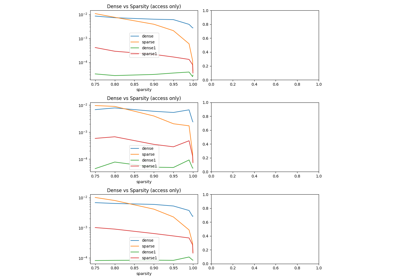

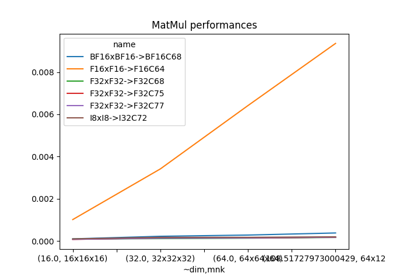

Measuring Gemm performance with different input and output tests

Measuring Gemm performance with different input and output tests

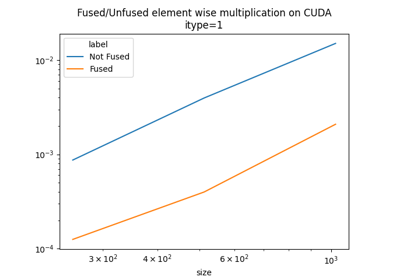

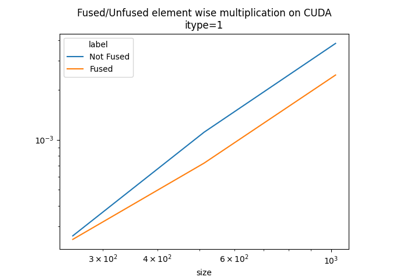

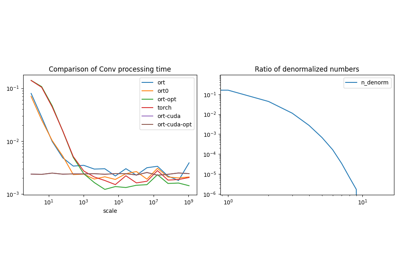

How float format has an impact on speed computation

How float format has an impact on speed computation

Profiles a simple onnx graph including a singleGemm

Profiles a simple onnx graph including a singleGemm