Note

Go to the end to download the full example code.

Compares implementations of Einsum¶

This example compares different equations for function numpy.einsum().

It compares numpy implementation to a custom implementation,

onnxruntime implementation and opt-einsum optimisation.

If available, tensorflow and pytorch are included as well.

The custom implementation does not do any transpose.

It uses parallelisation and SIMD optimization when the summation

happens on the last axis of both matrices. It only implements

matrix multiplication. We also measure the improvment made with

function einsum.

Available optimisation¶

The code shows which optimisation is used for the custom implementation, AVX or SSE and the number of available processors, equal to the default number of used threads to parallelize.

import logging

import numpy

import pandas

import matplotlib.pyplot as plt

from onnx import TensorProto

from onnx.helper import (

make_model,

make_graph,

make_node,

make_tensor_value_info,

make_opsetid,

)

from onnxruntime import InferenceSession

from onnx_extended.ext_test_case import measure_time, unit_test_going

from tqdm import tqdm

from opt_einsum import contract

from onnx_extended.tools.einsum.einsum_fct import _einsum

logging.getLogger("matplotlib.font_manager").setLevel(logging.ERROR)

logging.getLogger("matplotlib.ticker").setLevel(logging.ERROR)

logging.getLogger("PIL.PngImagePlugin").setLevel(logging.ERROR)

logging.getLogger("onnx-extended").setLevel(logging.ERROR)

Einsum: common code¶

try:

from tensorflow import einsum as tf_einsum, convert_to_tensor

except ImportError:

tf_einsum = None

try:

from torch import einsum as torch_einsum, from_numpy

except ImportError:

torch_einsum = None

def build_ort_einsum(equation, op_version=18): # opset=13, 14, ...

onx = make_model(

make_graph(

[make_node("Einsum", ["x", "y"], ["z"], equation=equation)],

equation,

[

make_tensor_value_info("x", TensorProto.FLOAT, None),

make_tensor_value_info("y", TensorProto.FLOAT, None),

],

[make_tensor_value_info("z", TensorProto.FLOAT, None)],

),

opset_imports=[make_opsetid("", op_version)],

ir_version=9,

)

sess = InferenceSession(onx.SerializeToString(), providers=["CPUExecutionProvider"])

return lambda x, y: sess.run(None, {"x": x, "y": y})

def build_ort_decomposed(equation, op_version=18): # opset=13, 14, ...

cache = _einsum(

equation,

numpy.float32,

opset=op_version,

optimize=True,

verbose=True,

runtime="python",

)

if not hasattr(cache, "onnx_"):

cache.build()

sess = InferenceSession(

cache.onnx_.SerializeToString(), providers=["CPUExecutionProvider"]

)

return lambda x, y: sess.run(None, {"X0": x, "X1": y})

def loop_einsum_eq(fct, equation, xs, ys):

for x, y in zip(xs, ys):

fct(equation, x, y)

def loop_einsum_eq_th(fct, equation, xs, ys):

for x, y in zip(xs, ys):

fct(equation, x, y, nthread=-1)

def loop_einsum(fct, xs, ys):

for x, y in zip(xs, ys):

fct(x, y)

def timeit(stmt, ctx, dim, name):

obs = measure_time(stmt, div_by_number=True, context=ctx, repeat=5, number=1)

obs["dim"] = dim

obs["fct"] = name

return obs

def benchmark_equation(equation):

# equations

ort_einsum = build_ort_einsum(equation)

ort_einsum_decomposed = build_ort_decomposed(equation)

res = []

for dim in tqdm([8, 16, 32, 64, 100, 128, 200, 256]): # , 500, 512]):

if unit_test_going() and dim > 64:

break

xs = [numpy.random.rand(2, dim, 12, 64).astype(numpy.float32) for _ in range(5)]

ys = [numpy.random.rand(2, dim, 12, 64).astype(numpy.float32) for _ in range(5)]

# numpy

ctx = dict(

equation=equation,

xs=xs,

ys=ys,

einsum=numpy.einsum,

loop_einsum=loop_einsum,

loop_einsum_eq=loop_einsum_eq,

loop_einsum_eq_th=loop_einsum_eq_th,

)

obs = timeit(

"loop_einsum_eq(einsum, equation, xs, ys)", ctx, dim, "numpy.einsum"

)

res.append(obs)

# opt-einsum

ctx["einsum"] = contract

obs = timeit("loop_einsum_eq(einsum, equation, xs, ys)", ctx, dim, "opt-einsum")

res.append(obs)

# onnxruntime

ctx["einsum"] = ort_einsum

obs = timeit("loop_einsum(einsum, xs, ys)", ctx, dim, "ort-einsum")

res.append(obs)

# onnxruntime decomposed

ctx["einsum"] = ort_einsum_decomposed

obs = timeit("loop_einsum(einsum, xs, ys)", ctx, dim, "ort-dec")

res.append(obs)

if tf_einsum is not None:

# tensorflow

ctx["einsum"] = tf_einsum

ctx["xs"] = [convert_to_tensor(x) for x in xs]

ctx["ys"] = [convert_to_tensor(y) for y in ys]

obs = timeit(

"loop_einsum_eq(einsum, equation, xs, ys)", ctx, dim, "tf-einsum"

)

res.append(obs)

if torch_einsum is not None:

# torch

ctx["einsum"] = torch_einsum

ctx["xs"] = [from_numpy(x) for x in xs]

ctx["ys"] = [from_numpy(y) for y in ys]

obs = timeit(

"loop_einsum_eq(einsum, equation, xs, ys)", ctx, dim, "torch-einsum"

)

res.append(obs)

# Dataframes

df = pandas.DataFrame(res)

piv = df.pivot(index="dim", columns="fct", values="average")

rs = piv.copy()

for c in ["ort-einsum", "ort-dec", "tf-einsum", "torch-einsum", "opt-einsum"]:

if c not in rs.columns:

continue

rs[c] = rs["numpy.einsum"] / rs[c]

rs["numpy.einsum"] = 1.0

# Graphs.

_fig, ax = plt.subplots(1, 2, figsize=(14, 5))

piv.plot(

logx=True,

logy=True,

ax=ax[0],

title=f"Einsum benchmark\n{equation} -- (2, N, 12, 64) lower better",

)

ax[0].legend(prop={"size": 9})

rs.plot(

logx=True,

logy=True,

ax=ax[1],

title="Einsum Speedup, baseline=numpy\n%s -- (2, N, 12, 64)"

" higher better" % equation,

)

ax[1].plot([min(rs.index), max(rs.index)], [0.5, 0.5], "g--")

ax[1].plot([min(rs.index), max(rs.index)], [2.0, 2.0], "g--")

ax[1].legend(prop={"size": 9})

return df, rs, ax

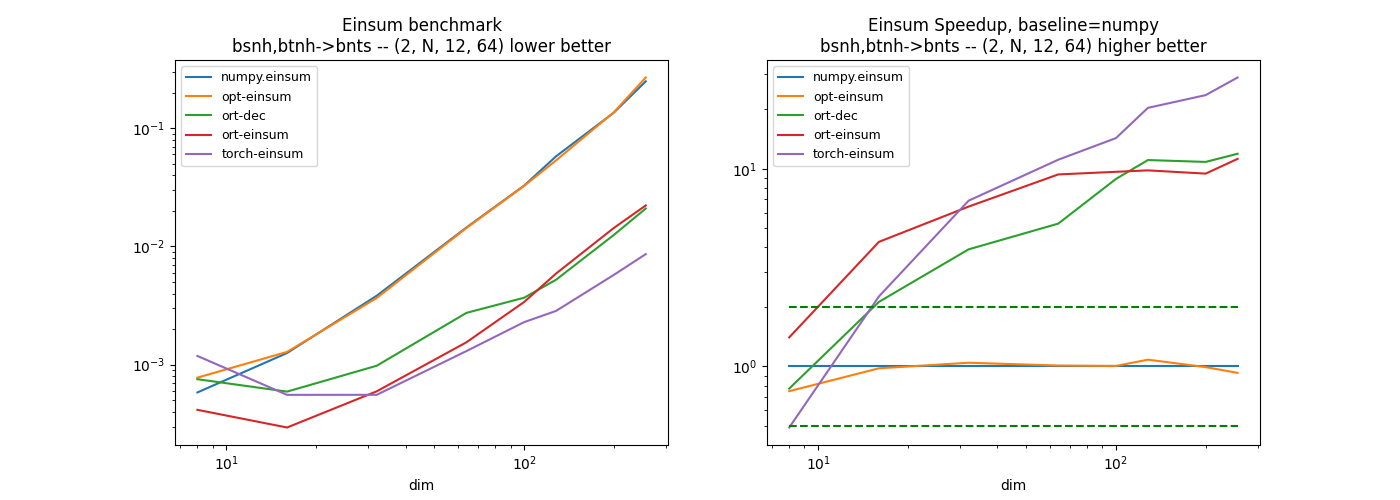

First equation: bsnh,btnh->bnts¶

The decomposition of this equation without einsum function gives the following.

0%| | 0/121 [00:00<?, ?it/s]

0.0058 rtbest='bsnh,btnh->bnts': 0%| | 0/121 [00:00<?, ?it/s]

0.0058 rtbest='bsnh,btnh->bnts': 4%|▍ | 5/121 [00:00<00:02, 46.36it/s]

0.0058 rtbest='bsnh,btnh->bnts': 12%|█▏ | 14/121 [00:00<00:01, 70.77it/s]

0.0058 rtbest='bsnh,btnh->bnts': 19%|█▉ | 23/121 [00:00<00:01, 76.54it/s]

0.0057 rtbest='htnb,hsnb->hnst': 19%|█▉ | 23/121 [00:00<00:01, 76.54it/s]

0.0057 rtbest='htnb,hsnb->hnst': 26%|██▌ | 31/121 [00:00<00:01, 77.33it/s]

0.0056 rtbest='snhb,sthb->shtn': 26%|██▌ | 31/121 [00:00<00:01, 77.33it/s]

0.0055 rtbest='sthb,snhb->shnt': 26%|██▌ | 31/121 [00:00<00:01, 77.33it/s]

0.0053 rtbest='nhtb,nstb->ntsh': 26%|██▌ | 31/121 [00:00<00:01, 77.33it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 26%|██▌ | 31/121 [00:00<00:01, 77.33it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 36%|███▋ | 44/121 [00:00<00:00, 92.08it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 45%|████▍ | 54/121 [00:00<00:00, 92.97it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 53%|█████▎ | 64/121 [00:00<00:00, 91.75it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 63%|██████▎ | 76/121 [00:00<00:00, 98.15it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 71%|███████ | 86/121 [00:00<00:00, 95.88it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 81%|████████ | 98/121 [00:01<00:00, 101.11it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 90%|█████████ | 109/121 [00:01<00:00, 101.24it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 99%|█████████▉| 120/121 [00:01<00:00, 100.59it/s]

0.0053 rtbest='thnb,tsnb->tnsh': 100%|██████████| 121/121 [00:01<00:00, 92.90it/s]

0%| | 0/8 [00:00<?, ?it/s]

12%|█▎ | 1/8 [00:00<00:02, 2.65it/s]

25%|██▌ | 2/8 [00:00<00:01, 3.44it/s]

38%|███▊ | 3/8 [00:01<00:02, 2.27it/s]

50%|█████ | 4/8 [00:01<00:02, 1.75it/s]

62%|██████▎ | 5/8 [00:03<00:02, 1.30it/s]

75%|███████▌ | 6/8 [00:04<00:01, 1.10it/s]

88%|████████▊ | 7/8 [00:06<00:01, 1.26s/it]

100%|██████████| 8/8 [00:08<00:00, 1.67s/it]

100%|██████████| 8/8 [00:08<00:00, 1.11s/it]

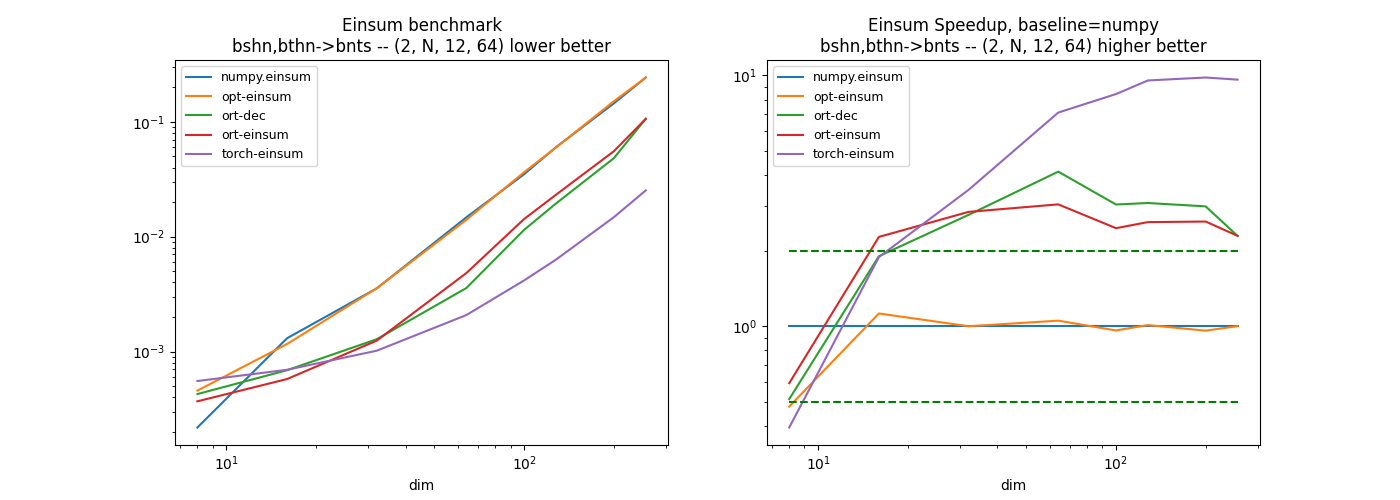

Second equation: bshn,bthn->bnts¶

The summation does not happen on the last axis but on the previous one. Is it worth transposing before doing the summation… The decomposition of this equation without einsum function gives the following.

0%| | 0/121 [00:00<?, ?it/s]

0.01 rtbest='bshn,bthn->bnts': 0%| | 0/121 [00:00<?, ?it/s]

0.0089 rtbest='bshn,bthn->bnts': 0%| | 0/121 [00:00<?, ?it/s]

0.0084 rtbest='bthn,bshn->bnst': 0%| | 0/121 [00:00<?, ?it/s]

0.0077 rtbest='bnht,bsht->btsn': 0%| | 0/121 [00:00<?, ?it/s]

0.0074 rtbest='bsht,bnht->btns': 0%| | 0/121 [00:00<?, ?it/s]

0.0074 rtbest='bsht,bnht->btns': 7%|▋ | 8/121 [00:00<00:01, 75.62it/s]

0.006 rtbest='btsh,bnsh->bhnt': 7%|▋ | 8/121 [00:00<00:01, 75.62it/s]

0.006 rtbest='btsh,bnsh->bhnt': 14%|█▍ | 17/121 [00:00<00:01, 83.64it/s]

0.0059 rtbest='bhst,bnst->btnh': 14%|█▍ | 17/121 [00:00<00:01, 83.64it/s]

0.0059 rtbest='bhst,bnst->btnh': 21%|██▏ | 26/121 [00:00<00:01, 86.15it/s]

0.0056 rtbest='hnbs,htbs->hstn': 21%|██▏ | 26/121 [00:00<00:01, 86.15it/s]

0.0056 rtbest='hnbs,htbs->hstn': 30%|██▉ | 36/121 [00:00<00:00, 90.20it/s]

0.0054 rtbest='ntbs,nhbs->nsht': 30%|██▉ | 36/121 [00:00<00:00, 90.20it/s]

0.0054 rtbest='ntbs,nhbs->nsht': 40%|███▉ | 48/121 [00:00<00:00, 100.28it/s]

0.0054 rtbest='ntbs,nhbs->nsht': 50%|████▉ | 60/121 [00:00<00:00, 105.43it/s]

0.0054 rtbest='ntbs,nhbs->nsht': 60%|█████▉ | 72/121 [00:00<00:00, 109.32it/s]

0.0054 rtbest='ntbs,nhbs->nsht': 69%|██████▊ | 83/121 [00:00<00:00, 73.45it/s]

0.0052 rtbest='tbhs,tnhs->tsnb': 69%|██████▊ | 83/121 [00:00<00:00, 73.45it/s]

0.0051 rtbest='nbst,nhst->nthb': 69%|██████▊ | 83/121 [00:01<00:00, 73.45it/s]

0.0051 rtbest='nbst,nhst->nthb': 79%|███████▉ | 96/121 [00:01<00:00, 84.60it/s]

0.0051 rtbest='nbst,nhst->nthb': 88%|████████▊ | 106/121 [00:01<00:00, 87.61it/s]

0.0051 rtbest='nbst,nhst->nthb': 97%|█████████▋| 117/121 [00:01<00:00, 91.50it/s]

0.0051 rtbest='nbst,nhst->nthb': 100%|██████████| 121/121 [00:01<00:00, 90.89it/s]

0%| | 0/8 [00:00<?, ?it/s]

25%|██▌ | 2/8 [00:00<00:00, 7.16it/s]

38%|███▊ | 3/8 [00:00<00:01, 4.79it/s]

50%|█████ | 4/8 [00:01<00:01, 3.33it/s]

62%|██████▎ | 5/8 [00:01<00:01, 2.42it/s]

75%|███████▌ | 6/8 [00:02<00:01, 1.51it/s]

88%|████████▊ | 7/8 [00:04<00:01, 1.14s/it]

100%|██████████| 8/8 [00:08<00:00, 2.01s/it]

100%|██████████| 8/8 [00:08<00:00, 1.11s/it]

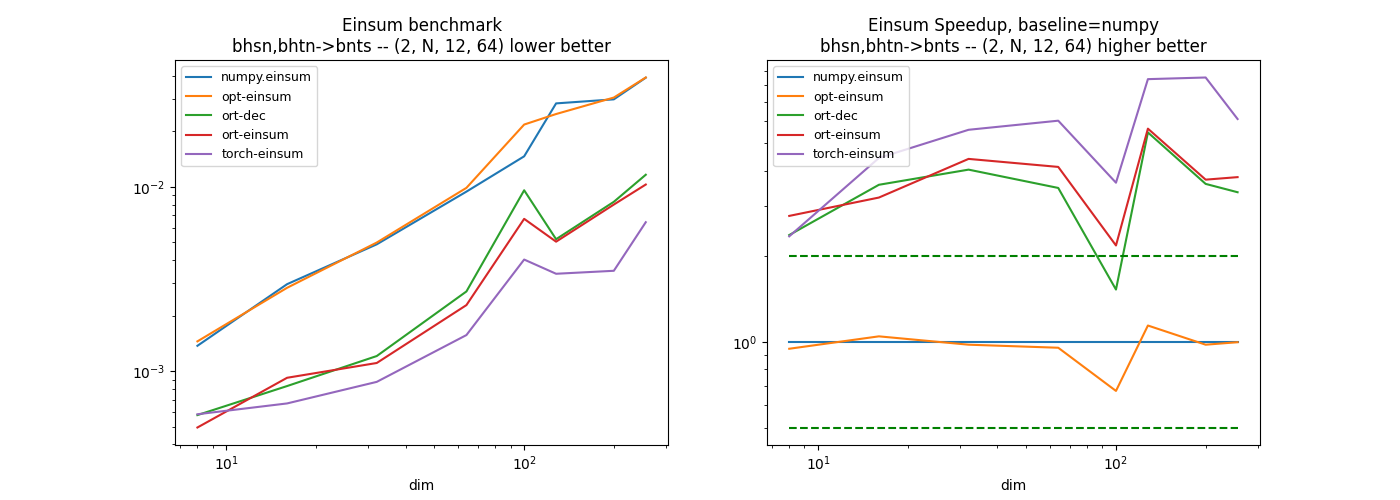

Third equation: bhsn,bhtn->bnts¶

The summation does not happen on the last axis but on the second one. It is worth transposing before multiplying. The decomposition of this equation without einsum function gives the following.

0%| | 0/121 [00:00<?, ?it/s]

0.0063 rtbest='bhsn,bhtn->bnts': 0%| | 0/121 [00:00<?, ?it/s]

0.0058 rtbest='bhsn,bhtn->bnts': 0%| | 0/121 [00:00<?, ?it/s]

0.0058 rtbest='bhsn,bhtn->bnts': 8%|▊ | 10/121 [00:00<00:01, 96.79it/s]

0.0058 rtbest='bhsn,bhtn->bnts': 17%|█▋ | 20/121 [00:00<00:01, 87.44it/s]

0.0057 rtbest='bsnt,bsht->bthn': 17%|█▋ | 20/121 [00:00<00:01, 87.44it/s]

0.0056 rtbest='hbts,hbns->hsnt': 17%|█▋ | 20/121 [00:00<00:01, 87.44it/s]

0.0056 rtbest='hbts,hbns->hsnt': 26%|██▌ | 31/121 [00:00<00:00, 95.45it/s]

0.0056 rtbest='hbts,hbns->hsnt': 35%|███▍ | 42/121 [00:00<00:00, 100.77it/s]

0.0056 rtbest='nbts,nbhs->nsht': 35%|███▍ | 42/121 [00:00<00:00, 100.77it/s]

0.0055 rtbest='sbnt,sbht->sthn': 35%|███▍ | 42/121 [00:00<00:00, 100.77it/s]

0.0055 rtbest='sbnt,sbht->sthn': 45%|████▌ | 55/121 [00:00<00:00, 110.20it/s]

0.0055 rtbest='sbnt,sbht->sthn': 56%|█████▌ | 68/121 [00:00<00:00, 113.14it/s]

0.0055 rtbest='sbnt,sbht->sthn': 66%|██████▌ | 80/121 [00:00<00:00, 114.70it/s]

0.0055 rtbest='sbnt,sbht->sthn': 76%|███████▌ | 92/121 [00:00<00:00, 110.89it/s]

0.0055 rtbest='sbnt,sbht->sthn': 86%|████████▌ | 104/121 [00:00<00:00, 104.92it/s]

0.0055 rtbest='sbnt,sbht->sthn': 96%|█████████▌| 116/121 [00:01<00:00, 108.91it/s]

0.0055 rtbest='sbnt,sbht->sthn': 100%|██████████| 121/121 [00:01<00:00, 107.10it/s]

0%| | 0/8 [00:00<?, ?it/s]

38%|███▊ | 3/8 [00:00<00:00, 15.73it/s]

62%|██████▎ | 5/8 [00:00<00:00, 8.17it/s]

75%|███████▌ | 6/8 [00:00<00:00, 5.75it/s]

88%|████████▊ | 7/8 [00:01<00:00, 4.03it/s]

100%|██████████| 8/8 [00:01<00:00, 2.88it/s]

100%|██████████| 8/8 [00:01<00:00, 4.13it/s]

Conclusion¶

pytorch seems quite efficient on these examples. The custom implementation was a way to investigate the implementation of einsum and find some ways to optimize it.

merged = pandas.concat(dfs)

name = "einsum"

merged.to_csv(f"plot_{name}.csv", index=False)

merged.to_excel(f"plot_{name}.xlsx", index=False)

plt.savefig(f"plot_{name}.png")

# plt.show()

Total running time of the script: (0 minutes 27.981 seconds)

![digraph{

orientation=portrait;

ranksep=0.25;

nodesep=0.05;

width=0.5;

height=0.1;

size=5;

node [shape=record];

0 [label="input 0\\nbsnh\\n[ 0 3 2 1 -1]"];

136918158596176 [label="id\\nNone"];

0 -> 136918158596176;

136918158596320 [label="expand_dims\\naxes=((4, 4),)None"];

136918158596176 -> 136918158596320;

1 [label="input 1\\nbtnh\\n[ 0 3 2 -1 1]"];

136918158592624 [label="id\\nNone"];

1 -> 136918158592624;

136918158596368 [label="expand_dims\\naxes=((3, 3),)None"];

136918158592624 -> 136918158596368;

136918158594304 [label="batch_dot\\nbatch_axes=(0, 1) keep_axes=None left=(0, 1, 2) ndim=5 right=(0, 1, 3) sum_axes=(4,)None"];

136918158593632 -> 136918158594304;

136918158594736 -> 136918158594304;

136918158595792 [label="squeeze\\naxes=(1,)None"];

136918158592816 -> 136918158595792;

136918158594352 [label="id - I-1\\nNone" style=filled fillcolor=red];

136918158595792 -> 136918158594352;

136918158593632 [label="transpose - I0\\nperm=(np.int64(0), np.int64(2), np.int64(1), np.int64(4), np.int64(3))None" style=filled fillcolor=red];

136918158596320 -> 136918158593632;

136918158594736 [label="transpose\\nperm=(np.int64(0), np.int64(2), np.int64(3), np.int64(1), np.int64(4))None"];

136918158596368 -> 136918158594736;

136918158592816 [label="transpose - I1\\nperm=(0, 4, 1, 3, 2)None" style=filled fillcolor=red];

136918158594304 -> 136918158592816;

}](../_images/graphviz-cdc43acfdee558e1b6d234d80b302d61f79a0f6e.png)

![digraph{

orientation=portrait;

ranksep=0.25;

nodesep=0.05;

width=0.5;

height=0.1;

size=5;

node [shape=record];

0 [label="input 0\\nbshn\\n[ 0 2 3 1 -1]"];

136918158599152 [label="id\\nNone"];

0 -> 136918158599152;

136918158599104 [label="expand_dims\\naxes=((4, 4),)None"];

136918158599152 -> 136918158599104;

1 [label="input 1\\nbthn\\n[ 0 2 3 -1 1]"];

136918158599632 [label="id\\nNone"];

1 -> 136918158599632;

136918162471872 [label="expand_dims\\naxes=((3, 3),)None"];

136918158599632 -> 136918162471872;

136918158599344 [label="batch_dot\\nbatch_axes=(0, 1) keep_axes=None left=(0, 1, 2) ndim=5 right=(0, 1, 3) sum_axes=(4,)None"];

136918158598672 -> 136918158599344;

136918158599872 -> 136918158599344;

136918158599968 [label="squeeze\\naxes=(1,)None"];

136918158599488 -> 136918158599968;

136918158599392 [label="id - I-1\\nNone" style=filled fillcolor=red];

136918158599968 -> 136918158599392;

136918158598672 [label="transpose - I0\\nperm=(np.int64(0), np.int64(3), np.int64(1), np.int64(4), np.int64(2))None" style=filled fillcolor=red];

136918158599104 -> 136918158598672;

136918158599872 [label="transpose\\nperm=(np.int64(0), np.int64(4), np.int64(3), np.int64(1), np.int64(2))None"];

136918162471872 -> 136918158599872;

136918158599488 [label="transpose - I1\\nperm=(0, 4, 1, 3, 2)None" style=filled fillcolor=red];

136918158599344 -> 136918158599488;

}](../_images/graphviz-3d0946eb4d07f94685689d674b1ec90084efaef4.png)

![digraph{

orientation=portrait;

ranksep=0.25;

nodesep=0.05;

width=0.5;

height=0.1;

size=5;

node [shape=record];

0 [label="input 0\\nbhsn\\n[ 0 1 3 2 -1]"];

136918158602032 [label="id\\nNone"];

0 -> 136918158602032;

136918158601984 [label="expand_dims\\naxes=((4, 4),)None"];

136918158602032 -> 136918158601984;

1 [label="input 1\\nbhtn\\n[ 0 1 3 -1 2]"];

136918158603424 [label="id\\nNone"];

1 -> 136918158603424;

136918158603232 [label="expand_dims\\naxes=((3, 3),)None"];

136918158603424 -> 136918158603232;

136918162463328 [label="batch_dot\\nbatch_axes=(0, 1) keep_axes=None left=(0, 1, 2) ndim=5 right=(0, 1, 3) sum_axes=(4,)None"];

136918159354160 -> 136918162463328;

136918159347200 -> 136918162463328;

136918159356704 [label="squeeze\\naxes=(1,)None"];

136918159356800 -> 136918159356704;

136918159345376 [label="id - I-1\\nNone" style=filled fillcolor=red];

136918159356704 -> 136918159345376;

136918159354160 [label="transpose - I0\\nperm=(np.int64(0), np.int64(3), np.int64(2), np.int64(4), np.int64(1))None" style=filled fillcolor=red];

136918158601984 -> 136918159354160;

136918159347200 [label="transpose\\nperm=(np.int64(0), np.int64(4), np.int64(3), np.int64(2), np.int64(1))None"];

136918158603232 -> 136918159347200;

136918159356800 [label="transpose - I1\\nperm=(0, 4, 1, 3, 2)None" style=filled fillcolor=red];

136918162463328 -> 136918159356800;

}](../_images/graphviz-762f1f093a7b6cbe196fc9341d16778670a97232.png)