Note

Go to the end to download the full example code.

Measuring performance about Gemm with onnxruntime¶

The benchmark measures the performance of Gemm for different types and configuration. That includes a custom operator only available on CUDA calling function cublasLtMatmul. This function offers many options.

import pprint

import platform

from itertools import product

import numpy

from tqdm import tqdm

import matplotlib.pyplot as plt

from pandas import DataFrame, pivot_table

from onnx import TensorProto

from onnx.helper import (

make_model,

make_node,

make_graph,

make_tensor_value_info,

make_opsetid,

)

from onnx.checker import check_model

from onnx.numpy_helper import from_array

from onnx.reference import ReferenceEvaluator

from onnxruntime import InferenceSession, SessionOptions, get_available_providers

from onnxruntime.capi._pybind_state import (

OrtValue as C_OrtValue,

OrtDevice as C_OrtDevice,

)

from onnxruntime.capi.onnxruntime_pybind11_state import (

Fail,

NotImplemented,

InvalidGraph,

InvalidArgument,

)

try:

from onnx_array_api.plotting.text_plot import onnx_simple_text_plot

except ImportError:

onnx_simple_text_plot = str

try:

from onnx_extended.reference import CReferenceEvaluator

except ImportError:

CReferenceEvaluator = ReferenceEvaluator

from onnx_extended.args import get_parsed_args

from onnx_extended.ext_test_case import unit_test_going, measure_time

try:

from onnx_extended.validation.cuda.cuda_example_py import get_device_prop

from onnx_extended.ortops.tutorial.cuda import get_ort_ext_libs

has_cuda = True

except ImportError:

def get_device_prop():

return {"name": "CPU"}

def get_ort_ext_libs():

return None

has_cuda = False

default_dims = (

"32,32,32;64,64,64;128,128,128;256,256,256;"

"400,400,400;512,512,512;1024,1024,1024"

)

if has_cuda:

prop = get_device_prop()

if prop.get("major", 0) >= 7:

default_dims += ";2048,2048,2048;4096,4096,4096"

if prop.get("major", 0) >= 9:

default_dims += ";16384,16384,16384"

script_args = get_parsed_args(

"plot_bench_gemm_ort",

description=__doc__,

dims=(

"32,32,32;64,64,64" if unit_test_going() else default_dims,

"square matrix dimensions to try, comma separated values",

),

types=(

"FLOAT" if unit_test_going() else "FLOAT8E4M3FN,FLOAT,FLOAT16,BFLOAT16",

"element type to teest",

),

number=2 if unit_test_going() else 4,

repeat=2 if unit_test_going() else 10,

warmup=2 if unit_test_going() else 5,

expose="repeat,number,warmup",

)

Device properties¶

if has_cuda:

properties = get_device_prop()

pprint.pprint(properties)

else:

properties = {"major": 0}

{'clockRate': 0,

'computeMode': 0,

'concurrentKernels': 1,

'isMultiGpuBoard': 0,

'major': 8,

'maxThreadsPerBlock': 1024,

'minor': 9,

'multiProcessorCount': 24,

'name': 'NVIDIA GeForce RTX 4060 Laptop GPU',

'sharedMemPerBlock': 49152,

'totalConstMem': 65536,

'totalGlobalMem': 8585281536}

Model to benchmark¶

It includes one Gemm. The operator changes. It can the regular Gemm, a custom Gemm from domain com.microsoft or a custom implementation from domain onnx_extended.ortops.tutorial.cuda.

def create_model(

mat_type=TensorProto.FLOAT, provider="CUDAExecutionProvider", domain="com.microsoft"

):

A = make_tensor_value_info("A", mat_type, [None, None])

B = make_tensor_value_info("B", mat_type, [None, None])

outputs = [make_tensor_value_info("C", mat_type, [None, None])]

inits = []

if domain != "":

if provider != "CUDAExecutionProvider":

return None

f8 = False

if domain == "com.microsoft":

op_name = "GemmFloat8"

computeType = "CUBLAS_COMPUTE_32F"

node_output = ["C"]

elif mat_type == TensorProto.FLOAT:

op_name = "CustomGemmFloat"

computeType = "CUBLAS_COMPUTE_32F_FAST_TF32"

node_output = ["C"]

elif mat_type == TensorProto.FLOAT16:

op_name = "CustomGemmFloat16"

computeType = "CUBLAS_COMPUTE_32F"

node_output = ["C"]

elif mat_type in (TensorProto.FLOAT8E4M3FN, TensorProto.FLOAT8E5M2):

f8 = True

op_name = "CustomGemmFloat8E4M3FN"

computeType = "CUBLAS_COMPUTE_32F"

node_output = ["C"]

outputs = [

make_tensor_value_info("C", TensorProto.FLOAT16, [None, None]),

]

inits.append(from_array(numpy.array([1], dtype=numpy.float32), name="I"))

else:

return None

node_kw = dict(

alpha=1.0,

transB=1,

domain=domain,

computeType=computeType,

fastAccumulationMode=1,

rowMajor=0 if op_name.startswith("CustomGemmFloat") else 1,

)

node_kw["name"] = (

f"{mat_type}.{len(node_output)}.{len(outputs)}."

f"{domain}..{node_kw['rowMajor']}.."

f"{node_kw['fastAccumulationMode']}..{node_kw['computeType']}.."

f"{f8}"

)

node_inputs = ["A", "B"]

if f8:

node_inputs.append("")

node_inputs.extend(["I"] * 3)

nodes = [make_node(op_name, node_inputs, node_output, **node_kw)]

else:

nodes = [

make_node("Gemm", ["A", "B"], ["C"], transA=1, beta=0.0),

]

graph = make_graph(nodes, "a", [A, B], outputs, inits)

if mat_type < 16:

# regular type

opset, ir = 18, 8

else:

opset, ir = 19, 9

onnx_model = make_model(

graph,

opset_imports=[

make_opsetid("", opset),

make_opsetid("com.microsoft", 1),

make_opsetid("onnx_extended.ortops.tutorial.cuda", 1),

],

ir_version=ir,

)

check_model(onnx_model)

return onnx_model

print(onnx_simple_text_plot(create_model()))

opset: domain='' version=18

opset: domain='com.microsoft' version=1

opset: domain='onnx_extended.ortops.tutorial.cuda' version=1

input: name='A' type=dtype('float32') shape=['', '']

input: name='B' type=dtype('float32') shape=['', '']

GemmFloat8[com.microsoft](A, B, alpha=1.00, computeType=b'CUBLAS_COMPUTE_32F', fastAccumulationMode=1, rowMajor=1, transB=1) -> C

output: name='C' type=dtype('float32') shape=['', '']

A model to cast into anytype. numpy does not support float 8. onnxruntime is used to cast a float array into any type. It must be called with tensor of type OrtValue.

def create_cast(to, cuda=False):

A = make_tensor_value_info("A", TensorProto.FLOAT, [None, None])

C = make_tensor_value_info("C", to, [None, None])

if cuda:

nodes = [

make_node("Cast", ["A"], ["Cc"], to=to),

make_node("MemcpyFromHost", ["Cc"], ["C"]),

]

else:

nodes = [make_node("Cast", ["A"], ["C"], to=to)]

graph = make_graph(nodes, "a", [A], [C])

if to < 16:

# regular type

opset, ir = 18, 8

else:

opset, ir = 19, 9

onnx_model = make_model(

graph, opset_imports=[make_opsetid("", opset)], ir_version=ir

)

if not cuda:

# OpType: MemcpyFromHost

check_model(onnx_model)

return onnx_model

print(onnx_simple_text_plot(create_cast(TensorProto.FLOAT16)))

opset: domain='' version=18

input: name='A' type=dtype('float32') shape=['', '']

Cast(A, to=10) -> C

output: name='C' type=dtype('float16') shape=['', '']

Performance¶

The benchmark will run the following configurations.

types = [getattr(TensorProto, a) for a in script_args.types.split(",")]

engine = [InferenceSession, CReferenceEvaluator]

providers = [

["CUDAExecutionProvider", "CPUExecutionProvider"],

["CPUExecutionProvider"],

]

# M, N, K

# we use multiple of 8, otherwise, float8 does not work.

dims = [[int(i) for i in line.split(",")] for line in script_args.dims.split(";")]

domains = ["onnx_extended.ortops.tutorial.cuda", "", "com.microsoft"]

Let’s cache the matrices involved.

def to_ort_value(m):

device = C_OrtDevice(C_OrtDevice.cpu(), C_OrtDevice.default_memory(), 0)

ort_value = C_OrtValue.ortvalue_from_numpy(m, device)

return ort_value

def cached_inputs(dims, types):

matrices = {}

matrices_cuda = {}

pbar = tqdm(list(product(dims, types)))

for dim, tt in pbar:

m, n, k = dim

pbar.set_description(f"t={tt} dim={dim}")

for i, j in [(m, k), (k, n), (k, m)]:

if (tt, i, j) in matrices:

continue

# CPU

try:

sess = InferenceSession(

create_cast(tt).SerializeToString(),

providers=["CPUExecutionProvider"],

)

cpu = True

except (InvalidGraph, InvalidArgument, NotImplemented):

# not support by this version of onnxruntime

cpu = False

if cpu:

vect = (numpy.random.randn(i, j) * 10).astype(numpy.float32)

ov = to_ort_value(vect)

ovtt = sess._sess.run_with_ort_values({"A": ov}, ["C"], None)[0]

matrices[tt, i, j] = ovtt

else:

continue

# CUDA

if "CUDAExecutionProvider" not in get_available_providers():

# No CUDA

continue

sess = InferenceSession(

create_cast(tt, cuda=True).SerializeToString(),

providers=["CUDAExecutionProvider", "CPUExecutionProvider"],

)

vect = (numpy.random.randn(i, j) * 10).astype(numpy.float32)

ov = to_ort_value(vect)

ovtt = sess._sess.run_with_ort_values({"A": ov}, ["C"], None)[0]

matrices_cuda[tt, i, j] = ovtt

return matrices, matrices_cuda

matrices, matrices_cuda = cached_inputs(dims, types)

print(f"{len(matrices)} matrices were created.")

0%| | 0/36 [00:00<?, ?it/s]

t=17 dim=[32, 32, 32]: 0%| | 0/36 [00:00<?, ?it/s]

t=1 dim=[32, 32, 32]: 0%| | 0/36 [00:00<?, ?it/s]

t=10 dim=[32, 32, 32]: 0%| | 0/36 [00:00<?, ?it/s]

t=10 dim=[32, 32, 32]: 8%|▊ | 3/36 [00:00<00:01, 23.11it/s]

t=16 dim=[32, 32, 32]: 8%|▊ | 3/36 [00:00<00:01, 23.11it/s]

t=17 dim=[64, 64, 64]: 8%|▊ | 3/36 [00:00<00:01, 23.11it/s]

t=1 dim=[64, 64, 64]: 8%|▊ | 3/36 [00:00<00:01, 23.11it/s]

t=10 dim=[64, 64, 64]: 8%|▊ | 3/36 [00:00<00:01, 23.11it/s]

t=16 dim=[64, 64, 64]: 8%|▊ | 3/36 [00:00<00:01, 23.11it/s]

t=16 dim=[64, 64, 64]: 22%|██▏ | 8/36 [00:00<00:00, 33.41it/s]

t=17 dim=[128, 128, 128]: 22%|██▏ | 8/36 [00:00<00:00, 33.41it/s]

t=1 dim=[128, 128, 128]: 22%|██▏ | 8/36 [00:00<00:00, 33.41it/s]

t=10 dim=[128, 128, 128]: 22%|██▏ | 8/36 [00:00<00:00, 33.41it/s]

t=16 dim=[128, 128, 128]: 22%|██▏ | 8/36 [00:00<00:00, 33.41it/s]

t=16 dim=[128, 128, 128]: 33%|███▎ | 12/36 [00:00<00:00, 34.31it/s]

t=17 dim=[256, 256, 256]: 33%|███▎ | 12/36 [00:00<00:00, 34.31it/s]

t=1 dim=[256, 256, 256]: 33%|███▎ | 12/36 [00:00<00:00, 34.31it/s]

t=10 dim=[256, 256, 256]: 33%|███▎ | 12/36 [00:00<00:00, 34.31it/s]

t=16 dim=[256, 256, 256]: 33%|███▎ | 12/36 [00:00<00:00, 34.31it/s]

t=16 dim=[256, 256, 256]: 44%|████▍ | 16/36 [00:00<00:00, 34.83it/s]

t=17 dim=[400, 400, 400]: 44%|████▍ | 16/36 [00:00<00:00, 34.83it/s]

t=1 dim=[400, 400, 400]: 44%|████▍ | 16/36 [00:00<00:00, 34.83it/s]

t=10 dim=[400, 400, 400]: 44%|████▍ | 16/36 [00:00<00:00, 34.83it/s]

t=16 dim=[400, 400, 400]: 44%|████▍ | 16/36 [00:00<00:00, 34.83it/s]

t=16 dim=[400, 400, 400]: 56%|█████▌ | 20/36 [00:00<00:00, 27.29it/s]

t=17 dim=[512, 512, 512]: 56%|█████▌ | 20/36 [00:00<00:00, 27.29it/s]

t=1 dim=[512, 512, 512]: 56%|█████▌ | 20/36 [00:00<00:00, 27.29it/s]

t=10 dim=[512, 512, 512]: 56%|█████▌ | 20/36 [00:00<00:00, 27.29it/s]

t=10 dim=[512, 512, 512]: 64%|██████▍ | 23/36 [00:00<00:00, 25.76it/s]

t=16 dim=[512, 512, 512]: 64%|██████▍ | 23/36 [00:00<00:00, 25.76it/s]

t=17 dim=[1024, 1024, 1024]: 64%|██████▍ | 23/36 [00:00<00:00, 25.76it/s]

t=1 dim=[1024, 1024, 1024]: 64%|██████▍ | 23/36 [00:00<00:00, 25.76it/s]

t=1 dim=[1024, 1024, 1024]: 72%|███████▏ | 26/36 [00:01<00:00, 19.18it/s]

t=10 dim=[1024, 1024, 1024]: 72%|███████▏ | 26/36 [00:01<00:00, 19.18it/s]

t=16 dim=[1024, 1024, 1024]: 72%|███████▏ | 26/36 [00:01<00:00, 19.18it/s]

t=17 dim=[2048, 2048, 2048]: 72%|███████▏ | 26/36 [00:01<00:00, 19.18it/s]

t=17 dim=[2048, 2048, 2048]: 81%|████████ | 29/36 [00:01<00:00, 12.47it/s]

t=1 dim=[2048, 2048, 2048]: 81%|████████ | 29/36 [00:01<00:00, 12.47it/s]

t=10 dim=[2048, 2048, 2048]: 81%|████████ | 29/36 [00:01<00:00, 12.47it/s]

t=10 dim=[2048, 2048, 2048]: 86%|████████▌ | 31/36 [00:02<00:00, 8.70it/s]

t=16 dim=[2048, 2048, 2048]: 86%|████████▌ | 31/36 [00:02<00:00, 8.70it/s]

t=17 dim=[4096, 4096, 4096]: 86%|████████▌ | 31/36 [00:02<00:00, 8.70it/s]

t=17 dim=[4096, 4096, 4096]: 92%|█████████▏| 33/36 [00:03<00:00, 4.06it/s]

t=1 dim=[4096, 4096, 4096]: 92%|█████████▏| 33/36 [00:03<00:00, 4.06it/s]

t=1 dim=[4096, 4096, 4096]: 94%|█████████▍| 34/36 [00:04<00:00, 2.86it/s]

t=10 dim=[4096, 4096, 4096]: 94%|█████████▍| 34/36 [00:04<00:00, 2.86it/s]

t=10 dim=[4096, 4096, 4096]: 97%|█████████▋| 35/36 [00:05<00:00, 2.11it/s]

t=16 dim=[4096, 4096, 4096]: 97%|█████████▋| 35/36 [00:05<00:00, 2.11it/s]

t=16 dim=[4096, 4096, 4096]: 100%|██████████| 36/36 [00:06<00:00, 1.73it/s]

t=16 dim=[4096, 4096, 4096]: 100%|██████████| 36/36 [00:06<00:00, 5.63it/s]

36 matrices were created.

Let’s run the benchmark

def rendering_obs(obs, dim, number, repeat, domain, provider, internal_time):

stype = {

TensorProto.FLOAT: "f32",

TensorProto.FLOAT16: "f16",

TensorProto.BFLOAT16: "bf16",

TensorProto.INT8: "i8",

TensorProto.INT16: "i16",

TensorProto.INT32: "i32",

TensorProto.UINT32: "u32",

TensorProto.FLOAT8E4M3FN: "e4m3fn",

TensorProto.FLOAT8E5M2: "e5m2",

}[tt]

obs.update(

dict(

engine={"InferenceSession": "ort", "CReferenceEvaluator": "np"}[

engine.__name__

],

stype=stype,

type=f"{stype}",

M=dim[0],

N=dim[1],

K=dim[2],

cost=numpy.prod(dim) * 4,

cost_s=f"{numpy.prod(dim) * 4}-{dim[0]}x{dim[1]}x{dim[2]}",

repeat=repeat,

number=number,

domain={

"": "ORT",

"com.microsoft": "COM",

"onnx_extended.ortops.tutorial.cuda": "EXT",

}[domain],

provider={

"CPUExecutionProvider": "cpu",

"CUDAExecutionProvider": "cuda",

}[provider[0]],

platform=platform.processor(),

intime=internal_time,

)

)

return obs

opts = SessionOptions()

r = get_ort_ext_libs()

if r is not None:

opts.register_custom_ops_library(r[0])

data = []

errors = []

pbar = tqdm(list(product(types, engine, providers, dims, domains)))

for tt, engine, provider, dim, domain in pbar:

if (

tt in {TensorProto.FLOAT8E4M3FN, TensorProto.FLOAT8E5M2}

and properties.get("major", 0) < 9

):

# f8 not available

if provider[0] == "CPUExecutionProvider":

continue

errors.append(

f"f8 not available, major={properties.get('major', 0)}, "

f"tt={tt}, provider={provider!r}, domain={domain!r}."

)

continue

elif provider[0] == "CPUExecutionProvider" and max(dim) > 2000:

# too long

continue

if max(dim) <= 200:

repeat, number = script_args.repeat * 4, script_args.number * 4

elif max(dim) <= 256:

repeat, number = script_args.repeat * 2, script_args.number * 2

else:

repeat, number = script_args.repeat, script_args.number

onx = create_model(tt, provider=provider[0], domain=domain)

if onx is None:

if provider[0] == "CPUExecutionProvider":

continue

errors.append(

f"No model for tt={tt}, provider={provider!r}, domain={domain!r}."

)

continue

with open(f"plot_bench_gemm_ort_{tt}_{domain}.onnx", "wb") as f:

f.write(onx.SerializeToString())

k1 = (tt, dim[2], dim[0])

k2 = (tt, dim[2], dim[1])

if k1 not in matrices:

errors.append(f"Key k1={k1!r} not in matrices.")

continue

if k2 not in matrices:

errors.append(f"Key k2={k2!r} not in matrices.")

continue

pbar.set_description(f"t={tt} e={engine.__name__} p={provider[0][:4]} dim={dim}")

if engine == CReferenceEvaluator:

if (

domain != ""

or max(dim) > 256

or provider != ["CPUExecutionProvider"]

or tt not in [TensorProto.FLOAT, TensorProto.FLOAT16]

):

# All impossible or slow cases.

continue

if tt == TensorProto.FLOAT16 and max(dim) > 50:

repeat, number = 2, 2

feeds = {"A": matrices[k1].numpy(), "B": matrices[k2].numpy()}

sess = engine(onx)

sess.run(None, feeds)

obs = measure_time(

lambda sess=sess, feeds=feeds: sess.run(None, feeds),

repeat=repeat,

number=number,

)

elif engine == InferenceSession:

if provider[0] not in get_available_providers():

errors.append(f"provider={provider[0]} is missing")

continue

try:

sess = engine(onx.SerializeToString(), opts, providers=provider)

except (NotImplemented, InvalidGraph, Fail) as e:

# not implemented

errors.append((tt, engine.__class__.__name__, provider, domain, e))

continue

the_feeds = (

{"A": matrices[k1], "B": matrices[k2]}

if provider == ["CPUExecutionProvider"]

else {"A": matrices_cuda[k1], "B": matrices_cuda[k2]}

)

out_names = ["C"]

# warmup

for _i in range(script_args.warmup):

sess._sess.run_with_ort_values(the_feeds, out_names, None)[0]

# benchamrk

times = []

def fct_benchmarked(

sess=sess, times=times, out_names=out_names, the_feeds=the_feeds

):

got = sess._sess.run_with_ort_values(the_feeds, out_names, None)

if len(got) > 1:

times.append(got[1])

obs = measure_time(fct_benchmarked, repeat=repeat, number=number)

internal_time = None

if times:

np_times = [t.numpy() for t in times]

internal_time = (sum(np_times) / len(times))[0]

else:

errors.append(f"unknown engine={engine}")

continue

# improves the rendering

obs = rendering_obs(obs, dim, number, repeat, domain, provider, internal_time)

data.append(obs)

if unit_test_going() and len(data) >= 2:

break

0%| | 0/432 [00:00<?, ?it/s]

t=1 e=InferenceSession p=CUDA dim=[32, 32, 32]: 0%| | 0/432 [00:00<?, ?it/s]

t=1 e=InferenceSession p=CUDA dim=[32, 32, 32]: 25%|██▌ | 109/432 [00:02<00:08, 37.45it/s]

t=1 e=InferenceSession p=CUDA dim=[32, 32, 32]: 25%|██▌ | 109/432 [00:02<00:08, 37.45it/s]

t=1 e=InferenceSession p=CUDA dim=[32, 32, 32]: 25%|██▌ | 109/432 [00:03<00:08, 37.45it/s]

t=1 e=InferenceSession p=CUDA dim=[64, 64, 64]: 25%|██▌ | 109/432 [00:03<00:08, 37.45it/s]

t=1 e=InferenceSession p=CUDA dim=[64, 64, 64]: 25%|██▌ | 109/432 [00:05<00:08, 37.45it/s]

t=1 e=InferenceSession p=CUDA dim=[64, 64, 64]: 26%|██▌ | 113/432 [00:05<00:17, 18.48it/s]

t=1 e=InferenceSession p=CUDA dim=[64, 64, 64]: 26%|██▌ | 113/432 [00:05<00:17, 18.48it/s]

t=1 e=InferenceSession p=CUDA dim=[128, 128, 128]: 26%|██▌ | 113/432 [00:05<00:17, 18.48it/s]

t=1 e=InferenceSession p=CUDA dim=[128, 128, 128]: 27%|██▋ | 115/432 [00:06<00:25, 12.34it/s]

t=1 e=InferenceSession p=CUDA dim=[128, 128, 128]: 27%|██▋ | 115/432 [00:06<00:25, 12.34it/s]

t=1 e=InferenceSession p=CUDA dim=[128, 128, 128]: 27%|██▋ | 116/432 [00:07<00:26, 11.95it/s]

t=1 e=InferenceSession p=CUDA dim=[128, 128, 128]: 27%|██▋ | 116/432 [00:07<00:26, 11.95it/s]

t=1 e=InferenceSession p=CUDA dim=[256, 256, 256]: 27%|██▋ | 116/432 [00:07<00:26, 11.95it/s]

t=1 e=InferenceSession p=CUDA dim=[256, 256, 256]: 27%|██▋ | 118/432 [00:07<00:28, 10.86it/s]

t=1 e=InferenceSession p=CUDA dim=[256, 256, 256]: 27%|██▋ | 118/432 [00:07<00:28, 10.86it/s]

t=1 e=InferenceSession p=CUDA dim=[256, 256, 256]: 27%|██▋ | 118/432 [00:07<00:28, 10.86it/s]

t=1 e=InferenceSession p=CUDA dim=[400, 400, 400]: 27%|██▋ | 118/432 [00:07<00:28, 10.86it/s]

t=1 e=InferenceSession p=CUDA dim=[400, 400, 400]: 28%|██▊ | 121/432 [00:07<00:27, 11.25it/s]

t=1 e=InferenceSession p=CUDA dim=[400, 400, 400]: 28%|██▊ | 121/432 [00:07<00:27, 11.25it/s]

t=1 e=InferenceSession p=CUDA dim=[400, 400, 400]: 28%|██▊ | 121/432 [00:07<00:27, 11.25it/s]

t=1 e=InferenceSession p=CUDA dim=[512, 512, 512]: 28%|██▊ | 121/432 [00:07<00:27, 11.25it/s]

t=1 e=InferenceSession p=CUDA dim=[512, 512, 512]: 29%|██▊ | 124/432 [00:07<00:26, 11.83it/s]

t=1 e=InferenceSession p=CUDA dim=[512, 512, 512]: 29%|██▊ | 124/432 [00:07<00:26, 11.83it/s]

t=1 e=InferenceSession p=CUDA dim=[512, 512, 512]: 29%|██▊ | 124/432 [00:07<00:26, 11.83it/s]

t=1 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 29%|██▊ | 124/432 [00:07<00:26, 11.83it/s]

t=1 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 29%|██▉ | 127/432 [00:08<00:25, 11.86it/s]

t=1 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 29%|██▉ | 127/432 [00:08<00:25, 11.86it/s]

t=1 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 29%|██▉ | 127/432 [00:08<00:25, 11.86it/s]

t=1 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 29%|██▉ | 127/432 [00:08<00:25, 11.86it/s]

t=1 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 30%|███ | 130/432 [00:08<00:32, 9.32it/s]

t=1 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 30%|███ | 130/432 [00:08<00:32, 9.32it/s]

t=1 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 30%|███ | 131/432 [00:09<00:41, 7.19it/s]

t=1 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 30%|███ | 131/432 [00:09<00:41, 7.19it/s]

t=1 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 30%|███ | 131/432 [00:09<00:41, 7.19it/s]

t=1 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 31%|███ | 133/432 [00:11<01:44, 2.87it/s]

t=1 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 31%|███ | 133/432 [00:11<01:44, 2.87it/s]

t=1 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 31%|███ | 134/432 [00:13<02:44, 1.81it/s]

t=1 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 31%|███ | 134/432 [00:13<02:44, 1.81it/s]

t=1 e=InferenceSession p=CPUE dim=[32, 32, 32]: 31%|███ | 134/432 [00:13<02:44, 1.81it/s]

t=1 e=InferenceSession p=CPUE dim=[64, 64, 64]: 31%|███ | 134/432 [00:13<02:44, 1.81it/s]

t=1 e=InferenceSession p=CPUE dim=[128, 128, 128]: 31%|███ | 134/432 [00:13<02:44, 1.81it/s]

t=1 e=InferenceSession p=CPUE dim=[128, 128, 128]: 33%|███▎ | 143/432 [00:13<01:04, 4.48it/s]

t=1 e=InferenceSession p=CPUE dim=[256, 256, 256]: 33%|███▎ | 143/432 [00:13<01:04, 4.48it/s]

t=1 e=InferenceSession p=CPUE dim=[400, 400, 400]: 33%|███▎ | 143/432 [00:13<01:04, 4.48it/s]

t=1 e=InferenceSession p=CPUE dim=[512, 512, 512]: 33%|███▎ | 143/432 [00:13<01:04, 4.48it/s]

t=1 e=InferenceSession p=CPUE dim=[512, 512, 512]: 35%|███▌ | 152/432 [00:13<00:35, 7.98it/s]

t=1 e=InferenceSession p=CPUE dim=[1024, 1024, 1024]: 35%|███▌ | 152/432 [00:13<00:35, 7.98it/s]

t=1 e=InferenceSession p=CPUE dim=[1024, 1024, 1024]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[32, 32, 32]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[64, 64, 64]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[128, 128, 128]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[256, 256, 256]: 36%|███▌ | 156/432 [00:14<00:32, 8.38it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[256, 256, 256]: 46%|████▋ | 200/432 [00:14<00:08, 27.22it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[400, 400, 400]: 46%|████▋ | 200/432 [00:14<00:08, 27.22it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[512, 512, 512]: 46%|████▋ | 200/432 [00:14<00:08, 27.22it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=[1024, 1024, 1024]: 46%|████▋ | 200/432 [00:14<00:08, 27.22it/s]

t=10 e=InferenceSession p=CUDA dim=[32, 32, 32]: 46%|████▋ | 200/432 [00:14<00:08, 27.22it/s]

t=10 e=InferenceSession p=CUDA dim=[32, 32, 32]: 50%|█████ | 217/432 [00:16<00:10, 19.73it/s]

t=10 e=InferenceSession p=CUDA dim=[32, 32, 32]: 50%|█████ | 217/432 [00:16<00:10, 19.73it/s]

t=10 e=InferenceSession p=CUDA dim=[32, 32, 32]: 50%|█████ | 217/432 [00:16<00:10, 19.73it/s]

t=10 e=InferenceSession p=CUDA dim=[64, 64, 64]: 50%|█████ | 217/432 [00:16<00:10, 19.73it/s]

t=10 e=InferenceSession p=CUDA dim=[64, 64, 64]: 50%|█████ | 217/432 [00:17<00:10, 19.73it/s]

t=10 e=InferenceSession p=CUDA dim=[64, 64, 64]: 51%|█████ | 221/432 [00:18<00:18, 11.43it/s]

t=10 e=InferenceSession p=CUDA dim=[64, 64, 64]: 51%|█████ | 221/432 [00:18<00:18, 11.43it/s]

t=10 e=InferenceSession p=CUDA dim=[128, 128, 128]: 51%|█████ | 221/432 [00:18<00:18, 11.43it/s]

t=10 e=InferenceSession p=CUDA dim=[128, 128, 128]: 51%|█████ | 221/432 [00:19<00:18, 11.43it/s]

t=10 e=InferenceSession p=CUDA dim=[128, 128, 128]: 52%|█████▏ | 224/432 [00:19<00:27, 7.54it/s]

t=10 e=InferenceSession p=CUDA dim=[128, 128, 128]: 52%|█████▏ | 224/432 [00:19<00:27, 7.54it/s]

t=10 e=InferenceSession p=CUDA dim=[256, 256, 256]: 52%|█████▏ | 224/432 [00:19<00:27, 7.54it/s]

t=10 e=InferenceSession p=CUDA dim=[256, 256, 256]: 52%|█████▏ | 226/432 [00:20<00:28, 7.19it/s]

t=10 e=InferenceSession p=CUDA dim=[256, 256, 256]: 52%|█████▏ | 226/432 [00:20<00:28, 7.19it/s]

t=10 e=InferenceSession p=CUDA dim=[256, 256, 256]: 52%|█████▏ | 226/432 [00:20<00:28, 7.19it/s]

t=10 e=InferenceSession p=CUDA dim=[400, 400, 400]: 52%|█████▏ | 226/432 [00:20<00:28, 7.19it/s]

t=10 e=InferenceSession p=CUDA dim=[400, 400, 400]: 53%|█████▎ | 229/432 [00:20<00:26, 7.80it/s]

t=10 e=InferenceSession p=CUDA dim=[400, 400, 400]: 53%|█████▎ | 229/432 [00:20<00:26, 7.80it/s]

t=10 e=InferenceSession p=CUDA dim=[400, 400, 400]: 53%|█████▎ | 229/432 [00:20<00:26, 7.80it/s]

t=10 e=InferenceSession p=CUDA dim=[512, 512, 512]: 53%|█████▎ | 229/432 [00:20<00:26, 7.80it/s]

t=10 e=InferenceSession p=CUDA dim=[512, 512, 512]: 54%|█████▎ | 232/432 [00:20<00:23, 8.65it/s]

t=10 e=InferenceSession p=CUDA dim=[512, 512, 512]: 54%|█████▎ | 232/432 [00:20<00:23, 8.65it/s]

t=10 e=InferenceSession p=CUDA dim=[512, 512, 512]: 54%|█████▎ | 232/432 [00:20<00:23, 8.65it/s]

t=10 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 54%|█████▎ | 232/432 [00:20<00:23, 8.65it/s]

t=10 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 54%|█████▍ | 235/432 [00:20<00:21, 9.28it/s]

t=10 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 54%|█████▍ | 235/432 [00:20<00:21, 9.28it/s]

t=10 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 54%|█████▍ | 235/432 [00:20<00:21, 9.28it/s]

t=10 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 54%|█████▍ | 235/432 [00:20<00:21, 9.28it/s]

t=10 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 55%|█████▌ | 238/432 [00:21<00:21, 8.88it/s]

t=10 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 55%|█████▌ | 238/432 [00:21<00:21, 8.88it/s]

t=10 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 55%|█████▌ | 238/432 [00:21<00:21, 8.88it/s]

t=10 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 56%|█████▌ | 240/432 [00:21<00:20, 9.16it/s]

t=10 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 56%|█████▌ | 240/432 [00:21<00:20, 9.16it/s]

t=10 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 56%|█████▌ | 240/432 [00:22<00:20, 9.16it/s]

t=10 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 56%|█████▌ | 242/432 [00:23<00:56, 3.39it/s]

t=10 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 56%|█████▌ | 242/432 [00:23<00:56, 3.39it/s]

t=10 e=InferenceSession p=CPUE dim=[32, 32, 32]: 56%|█████▌ | 242/432 [00:23<00:56, 3.39it/s]

t=10 e=InferenceSession p=CPUE dim=[64, 64, 64]: 56%|█████▌ | 242/432 [00:23<00:56, 3.39it/s]

t=10 e=InferenceSession p=CPUE dim=[128, 128, 128]: 56%|█████▌ | 242/432 [00:23<00:56, 3.39it/s]

t=10 e=InferenceSession p=CPUE dim=[128, 128, 128]: 58%|█████▊ | 251/432 [00:23<00:25, 7.23it/s]

t=10 e=InferenceSession p=CPUE dim=[256, 256, 256]: 58%|█████▊ | 251/432 [00:23<00:25, 7.23it/s]

t=10 e=InferenceSession p=CPUE dim=[400, 400, 400]: 58%|█████▊ | 251/432 [00:23<00:25, 7.23it/s]

t=10 e=InferenceSession p=CPUE dim=[512, 512, 512]: 58%|█████▊ | 251/432 [00:23<00:25, 7.23it/s]

t=10 e=InferenceSession p=CPUE dim=[512, 512, 512]: 60%|██████ | 260/432 [00:23<00:14, 11.94it/s]

t=10 e=InferenceSession p=CPUE dim=[1024, 1024, 1024]: 60%|██████ | 260/432 [00:23<00:14, 11.94it/s]

t=10 e=InferenceSession p=CPUE dim=[1024, 1024, 1024]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[32, 32, 32]: 61%|██████ | 264/432 [00:24<00:14, 11.29it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[32, 32, 32]: 69%|██████▉ | 299/432 [00:24<00:03, 38.51it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[64, 64, 64]: 69%|██████▉ | 299/432 [00:24<00:03, 38.51it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[128, 128, 128]: 69%|██████▉ | 299/432 [00:24<00:03, 38.51it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[256, 256, 256]: 69%|██████▉ | 299/432 [00:24<00:03, 38.51it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[400, 400, 400]: 69%|██████▉ | 299/432 [00:24<00:03, 38.51it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[400, 400, 400]: 72%|███████▏ | 311/432 [00:24<00:03, 37.75it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[512, 512, 512]: 72%|███████▏ | 311/432 [00:24<00:03, 37.75it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=[1024, 1024, 1024]: 72%|███████▏ | 311/432 [00:24<00:03, 37.75it/s]

t=16 e=InferenceSession p=CUDA dim=[32, 32, 32]: 72%|███████▏ | 311/432 [00:24<00:03, 37.75it/s]

t=16 e=InferenceSession p=CUDA dim=[32, 32, 32]: 75%|███████▌ | 326/432 [00:24<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[32, 32, 32]: 75%|███████▌ | 326/432 [00:24<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[64, 64, 64]: 75%|███████▌ | 326/432 [00:24<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[64, 64, 64]: 75%|███████▌ | 326/432 [00:24<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[128, 128, 128]: 75%|███████▌ | 326/432 [00:24<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[128, 128, 128]: 75%|███████▌ | 326/432 [00:25<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[256, 256, 256]: 75%|███████▌ | 326/432 [00:25<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[256, 256, 256]: 75%|███████▌ | 326/432 [00:25<00:02, 49.48it/s]

t=16 e=InferenceSession p=CUDA dim=[256, 256, 256]: 78%|███████▊ | 337/432 [00:25<00:02, 46.37it/s]

t=16 e=InferenceSession p=CUDA dim=[400, 400, 400]: 78%|███████▊ | 337/432 [00:25<00:02, 46.37it/s]

t=16 e=InferenceSession p=CUDA dim=[400, 400, 400]: 78%|███████▊ | 337/432 [00:25<00:02, 46.37it/s]

t=16 e=InferenceSession p=CUDA dim=[512, 512, 512]: 78%|███████▊ | 337/432 [00:25<00:02, 46.37it/s]

t=16 e=InferenceSession p=CUDA dim=[512, 512, 512]: 78%|███████▊ | 337/432 [00:25<00:02, 46.37it/s]

t=16 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 78%|███████▊ | 337/432 [00:25<00:02, 46.37it/s]

t=16 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 78%|███████▊ | 337/432 [00:25<00:02, 46.37it/s]

t=16 e=InferenceSession p=CUDA dim=[1024, 1024, 1024]: 80%|████████ | 346/432 [00:25<00:01, 50.51it/s]

t=16 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 80%|████████ | 346/432 [00:25<00:01, 50.51it/s]

t=16 e=InferenceSession p=CUDA dim=[2048, 2048, 2048]: 80%|████████ | 346/432 [00:25<00:01, 50.51it/s]

t=16 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 80%|████████ | 346/432 [00:25<00:01, 50.51it/s]

t=16 e=InferenceSession p=CUDA dim=[4096, 4096, 4096]: 80%|████████ | 346/432 [00:26<00:01, 50.51it/s]

t=16 e=InferenceSession p=CPUE dim=[32, 32, 32]: 80%|████████ | 346/432 [00:26<00:01, 50.51it/s]

t=16 e=InferenceSession p=CPUE dim=[32, 32, 32]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=InferenceSession p=CPUE dim=[64, 64, 64]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=InferenceSession p=CPUE dim=[128, 128, 128]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=InferenceSession p=CPUE dim=[256, 256, 256]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=InferenceSession p=CPUE dim=[400, 400, 400]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=InferenceSession p=CPUE dim=[512, 512, 512]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=InferenceSession p=CPUE dim=[1024, 1024, 1024]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[32, 32, 32]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[64, 64, 64]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[128, 128, 128]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[256, 256, 256]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[400, 400, 400]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[512, 512, 512]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[1024, 1024, 1024]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[2048, 2048, 2048]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CUDA dim=[4096, 4096, 4096]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[32, 32, 32]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[64, 64, 64]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[128, 128, 128]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[256, 256, 256]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[400, 400, 400]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[512, 512, 512]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[1024, 1024, 1024]: 82%|████████▏ | 355/432 [00:26<00:03, 22.20it/s]

t=16 e=CReferenceEvaluator p=CPUE dim=[1024, 1024, 1024]: 100%|██████████| 432/432 [00:26<00:00, 16.39it/s]

Results¶

0 1 2 3 4

average 0.004419 0.000295 0.002894 0.00026 0.00257

deviation 0.001128 0.000136 0.000282 0.000117 0.00025

min_exec 0.003069 0.000103 0.002479 0.000151 0.002214

max_exec 0.007449 0.000719 0.003572 0.000655 0.00326

repeat 40 40 40 40 40

number 16 16 16 16 16

ttime 0.176769 0.011816 0.115764 0.010414 0.102804

context_size 64 64 64 64 64

warmup_time 0.010165 0.001027 0.003924 0.001295 0.003034

engine ort ort ort ort ort

stype f32 f32 f32 f32 f32

type f32 f32 f32 f32 f32

M 32 32 64 64 128

N 32 32 64 64 128

K 32 32 64 64 128

cost 131072 131072 1048576 1048576 8388608

cost_s 131072-32x32x32 131072-32x32x32 1048576-64x64x64 1048576-64x64x64 8388608-128x128x128

domain EXT ORT EXT ORT EXT

provider cuda cuda cuda cuda cuda

platform x86_64 x86_64 x86_64 x86_64 x86_64

intime None None None None None

The errors¶

1/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

2/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

3/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

4/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

5/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

6/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

7/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

8/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

9/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

10/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

11/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

12/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

13/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

14/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

15/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

16/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

17/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

18/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

19/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

20/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

21/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

22/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

23/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

24/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

25/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

26/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

27/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

28/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

29/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

30/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

31/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

32/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

33/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

34/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

35/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

36/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

37/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

38/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

39/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

40/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

41/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

42/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

43/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

44/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

45/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

46/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

47/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

48/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

49/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

50/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

51/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

52/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

53/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain=''.

54/106-f8 not available, major=8, tt=17, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='com.microsoft'.

55/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

56/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

57/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

58/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

59/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

60/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

61/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

62/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

63/106-(1, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("1.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float),"B": tensor(float),) -> ("C": tensor(float),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

64/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

65/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

66/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

67/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

68/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

69/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

70/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

71/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

72/106-(10, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("10.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(float16),"B": tensor(float16),) -> ("C": tensor(float16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

73/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

74/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

75/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

76/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

77/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

78/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

79/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

80/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

81/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

82/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

83/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

84/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

85/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

86/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

87/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

88/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

89/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

90/106-(16, 'type', ['CUDAExecutionProvider', 'CPUExecutionProvider'], 'com.microsoft', InvalidGraph('[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. In Node, ("16.1.1.com.microsoft..1..1..CUBLAS_COMPUTE_32F..False", GemmFloat8, "com.microsoft", -1) : ("A": tensor(bfloat16),"B": tensor(bfloat16),) -> ("C": tensor(bfloat16),) , Error Unrecognized attribute: computeType for operator GemmFloat8'))

91/106-(16, 'type', ['CPUExecutionProvider'], '', NotImplemented("[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Gemm(13) node with name ''"))

92/106-(16, 'type', ['CPUExecutionProvider'], '', NotImplemented("[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Gemm(13) node with name ''"))

93/106-(16, 'type', ['CPUExecutionProvider'], '', NotImplemented("[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Gemm(13) node with name ''"))

94/106-(16, 'type', ['CPUExecutionProvider'], '', NotImplemented("[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Gemm(13) node with name ''"))

95/106-(16, 'type', ['CPUExecutionProvider'], '', NotImplemented("[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Gemm(13) node with name ''"))

96/106-(16, 'type', ['CPUExecutionProvider'], '', NotImplemented("[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Gemm(13) node with name ''"))

97/106-(16, 'type', ['CPUExecutionProvider'], '', NotImplemented("[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Gemm(13) node with name ''"))

98/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

99/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

100/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

101/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

102/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

103/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

104/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

105/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

106/106-No model for tt=16, provider=['CUDAExecutionProvider', 'CPUExecutionProvider'], domain='onnx_extended.ortops.tutorial.cuda'.

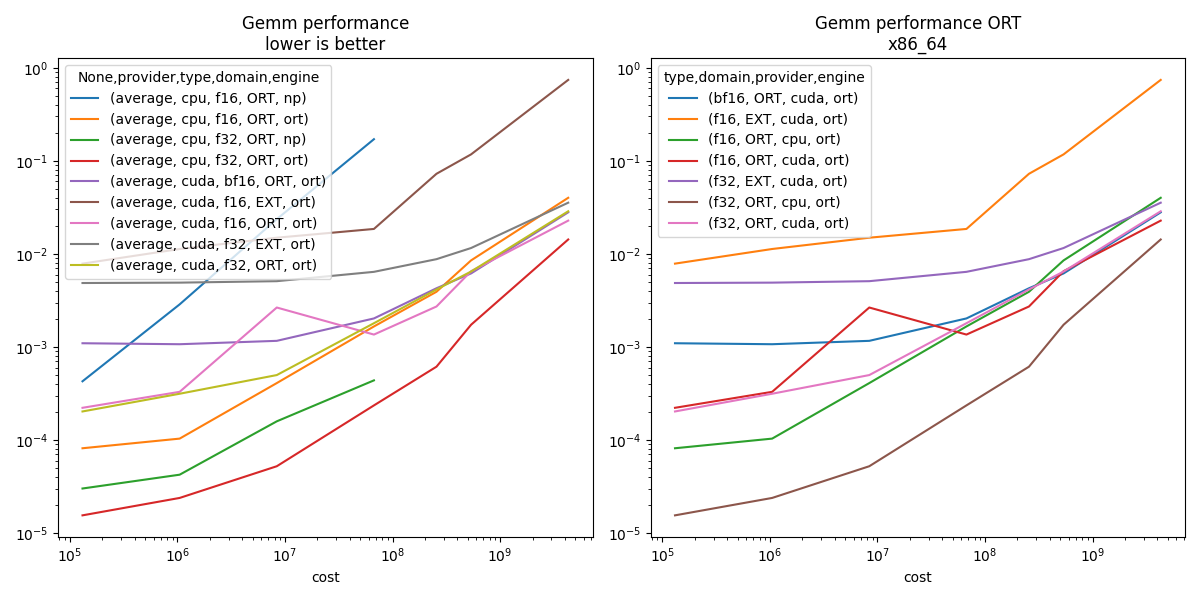

Summary¶

piv = pivot_table(

df,

index=["cost"],

columns=["provider", "type", "domain", "engine"],

values=["average", "intime"],

)

piv.reset_index(drop=False).to_excel("plot_bench_gemm_ort_summary.xlsx")

piv.reset_index(drop=False).to_csv("plot_bench_gemm_ort_summary.csv")

print("summary")

print(piv)

piv

summary

average

provider cpu cuda

type f16 f32 bf16 f16 f32

domain ORT ORT ORT EXT ORT EXT ORT

engine np ort np ort ort ort ort ort ort

cost

131072 0.000137 0.000030 0.000013 0.000016 0.000141 0.002116 0.000191 0.004419 0.000295

1048576 0.000780 0.000053 0.000025 0.000041 0.000156 0.002311 0.000206 0.002894 0.000260

8388608 0.005971 0.000056 0.000065 0.000076 0.000172 0.002482 0.000210 0.002570 0.000275

67108864 0.047454 0.000232 0.003040 0.000180 0.000211 0.002497 0.000240 0.002483 0.000259

256000000 NaN 0.000610 NaN 0.000606 0.000298 0.002658 0.000296 0.002739 0.000445

536870912 NaN 0.001189 NaN 0.000750 0.000401 0.002585 0.000382 0.002921 0.000614

4294967296 NaN 0.008606 NaN 0.008399 0.001175 0.003889 0.001099 0.004283 0.001761

34359738368 NaN NaN NaN NaN 0.003892 0.006272 0.003668 0.009919 0.007221

274877906944 NaN NaN NaN NaN 0.017962 0.022510 0.020687 0.052536 0.041368

With the dimensions.

pivs = pivot_table(

df,

index=["cost_s"],

columns=["provider", "type", "domain", "engine"],

values=["average", "intime"],

)

print(pivs)

average

provider cpu cuda

type f16 f32 bf16 f16 f32

domain ORT ORT ORT EXT ORT EXT ORT

engine np ort np ort ort ort ort ort ort

cost_s

1048576-64x64x64 0.000780 0.000053 0.000025 0.000041 0.000156 0.002311 0.000206 0.002894 0.000260

131072-32x32x32 0.000137 0.000030 0.000013 0.000016 0.000141 0.002116 0.000191 0.004419 0.000295

256000000-400x400x400 NaN 0.000610 NaN 0.000606 0.000298 0.002658 0.000296 0.002739 0.000445

274877906944-4096x4096x4096 NaN NaN NaN NaN 0.017962 0.022510 0.020687 0.052536 0.041368

34359738368-2048x2048x2048 NaN NaN NaN NaN 0.003892 0.006272 0.003668 0.009919 0.007221

4294967296-1024x1024x1024 NaN 0.008606 NaN 0.008399 0.001175 0.003889 0.001099 0.004283 0.001761

536870912-512x512x512 NaN 0.001189 NaN 0.000750 0.000401 0.002585 0.000382 0.002921 0.000614

67108864-256x256x256 0.047454 0.000232 0.003040 0.000180 0.000211 0.002497 0.000240 0.002483 0.000259

8388608-128x128x128 0.005971 0.000056 0.000065 0.000076 0.000172 0.002482 0.000210 0.002570 0.000275

plot

dfi = df[

df.type.isin({"f32", "f16", "bf16", "e4m3fn", "e5m2"}) & df.engine.isin({"ort"})

]

pivi = pivot_table(

dfi,

index=["cost"],

columns=["type", "domain", "provider", "engine"],

values="average",

)

fig, ax = plt.subplots(1, 2, figsize=(12, 6))

piv.plot(ax=ax[0], title="Gemm performance\nlower is better", logx=True, logy=True)

if pivi.shape[0] > 0:

pivi.plot(

ax=ax[1],

title=f"Gemm performance ORT\n{platform.processor()}",

logx=True,

logy=True,

)

fig.tight_layout()

fig.savefig("plot_bench_gemm_ort.png")

Total running time of the script: (0 minutes 34.464 seconds)