Note

Go to the end to download the full example code.

Search images with deep learning (torch)¶

Images are usually very different if we compare them at pixel level but that’s quite different if we look at them after they were processed by a deep learning model. We convert each image into a feature vector extracted from an intermediate layer of the network.

Get a pre-trained model¶

We choose the model described in paper SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size.

import os

import matplotlib.pyplot as plt

from sklearn.neighbors import NearestNeighbors

from torchvision import datasets, transforms, models

from torch.utils.data import DataLoader, ConcatDataset

from mlinsights.ext_test_case import unzip_files

from mlinsights.plotting import plot_gallery_images

from torchvision.models.squeezenet import SqueezeNet1_0_Weights

model = models.squeezenet1_0(weights=SqueezeNet1_0_Weights.IMAGENET1K_V1)

model

Downloading: "https://download.pytorch.org/models/squeezenet1_0-b66bff10.pth" to ~/.cache/torch/hub/checkpoints/squeezenet1_0-b66bff10.pth

0%| | 0.00/4.78M [00:00<?, ?B/s]

10%|█ | 512k/4.78M [00:00<00:01, 4.44MB/s]

24%|██▎ | 1.12M/4.78M [00:00<00:00, 4.95MB/s]

34%|███▍ | 1.62M/4.78M [00:00<00:00, 4.99MB/s]

47%|████▋ | 2.25M/4.78M [00:00<00:00, 5.14MB/s]

63%|██████▎ | 3.00M/4.78M [00:00<00:00, 5.70MB/s]

76%|███████▌ | 3.62M/4.78M [00:00<00:00, 5.87MB/s]

89%|████████▉ | 4.25M/4.78M [00:00<00:00, 5.79MB/s]

100%|██████████| 4.78M/4.78M [00:00<00:00, 5.56MB/s]

SqueezeNet(

(features): Sequential(

(0): Conv2d(3, 96, kernel_size=(7, 7), stride=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True)

(3): Fire(

(squeeze): Conv2d(96, 16, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(16, 64, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(16, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

(4): Fire(

(squeeze): Conv2d(128, 16, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(16, 64, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(16, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

(5): Fire(

(squeeze): Conv2d(128, 32, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(32, 128, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(32, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

(6): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True)

(7): Fire(

(squeeze): Conv2d(256, 32, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(32, 128, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(32, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

(8): Fire(

(squeeze): Conv2d(256, 48, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(48, 192, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(48, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

(9): Fire(

(squeeze): Conv2d(384, 48, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(48, 192, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(48, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

(10): Fire(

(squeeze): Conv2d(384, 64, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(64, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

(11): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True)

(12): Fire(

(squeeze): Conv2d(512, 64, kernel_size=(1, 1), stride=(1, 1))

(squeeze_activation): ReLU(inplace=True)

(expand1x1): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

(expand1x1_activation): ReLU(inplace=True)

(expand3x3): Conv2d(64, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(expand3x3_activation): ReLU(inplace=True)

)

)

(classifier): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Conv2d(512, 1000, kernel_size=(1, 1), stride=(1, 1))

(2): ReLU(inplace=True)

(3): AdaptiveAvgPool2d(output_size=(1, 1))

)

)

The model is stored here:

path = os.path.join(

os.environ.get("USERPROFILE", os.environ.get("HOME", ".")),

".cache",

"torch",

"checkpoints",

)

if os.path.exists(path):

res = os.listdir(path)

else:

res = ["not found", path]

res

['not found', '~/.cache/torch/checkpoints']

pytorch‘s design relies on two methods forward and backward which implement the propagation and backpropagation of the gradient, the structure is not known and could even be dyanmic. That’s why it is difficult to define a number of layers.

len(model.features), len(model.classifier)

(13, 4)

Images¶

We collect images from pixabay.

Raw images¶

if not os.path.exists("simages/category"):

os.makedirs("simages/category")

url = "https://github.com/sdpython/mlinsights/raw/ref/_doc/examples/data/dog-cat-pixabay.zip"

files = unzip_files(url, where_to="simages/category")

if not files:

raise FileNotFoundError(f"No images where unzipped from {url!r}.")

len(files), files[0]

(31, 'simages/category/cat-1151519__480.jpg')

plot_gallery_images(files[:2])

array([<Axes: >, <Axes: >, <Axes: >, <Axes: >], dtype=object)

trans = transforms.Compose(

[

transforms.Resize((224, 224)), # essayer avec 224 seulement

transforms.CenterCrop(224),

transforms.ToTensor(),

]

)

imgs = datasets.ImageFolder("simages", trans)

imgs

Dataset ImageFolder

Number of datapoints: 31

Root location: simages

StandardTransform

Transform: Compose(

Resize(size=(224, 224), interpolation=bilinear, max_size=None, antialias=True)

CenterCrop(size=(224, 224))

ToTensor()

)

dataloader = DataLoader(imgs, batch_size=1, shuffle=False, num_workers=1)

dataloader

<torch.utils.data.dataloader.DataLoader object at 0x79fb09ed2720>

img_seq = iter(dataloader)

img, cl = next(img_seq)

(<class 'torch.Tensor'>, <class 'torch.Tensor'>)

array = img.numpy().transpose((2, 3, 1, 0))

array.shape

(224, 224, 3, 1)

plt.imshow(array[:, :, :, 0])

plt.axis("off")

(np.float64(-0.5), np.float64(223.5), np.float64(223.5), np.float64(-0.5))

(np.float64(-0.5), np.float64(223.5), np.float64(223.5), np.float64(-0.5))

torch implements optimized function to load and process images.

trans = transforms.Compose(

[

transforms.Resize((224, 224)), # essayer avec 224 seulement

transforms.RandomRotation((-10, 10), expand=True),

transforms.CenterCrop(224),

transforms.ToTensor(),

]

)

imgs = datasets.ImageFolder("simages", trans)

dataloader = DataLoader(imgs, batch_size=1, shuffle=True, num_workers=1)

img_seq = iter(dataloader)

imgs = [img[0] for i, img in zip(range(2), img_seq)]

array([<Axes: >, <Axes: >, <Axes: >, <Axes: >], dtype=object)

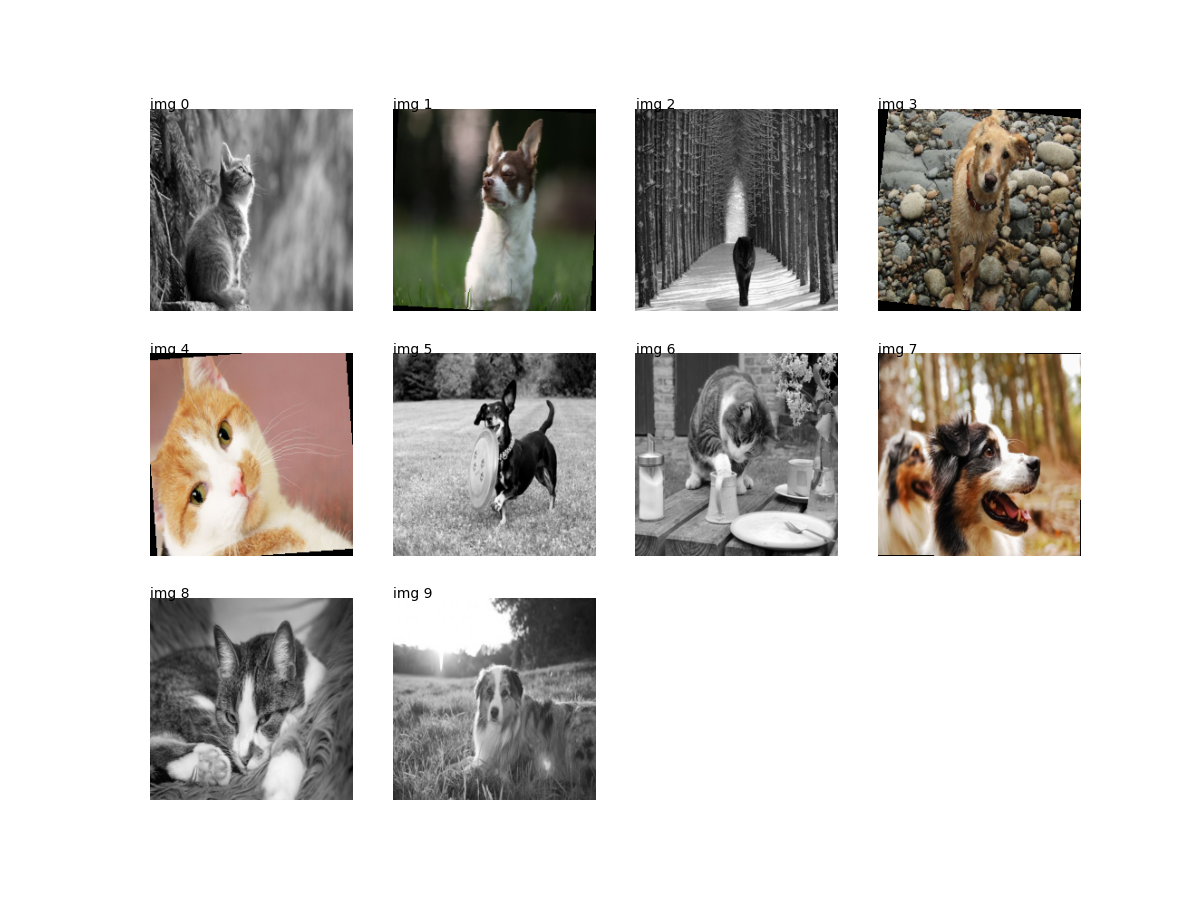

We can multiply the data by implementing a custom sampler or just concatenate loaders.

trans1 = transforms.Compose(

[

transforms.Resize((224, 224)), # essayer avec 224 seulement

transforms.RandomRotation((-10, 10), expand=True),

transforms.CenterCrop(224),

transforms.ToTensor(),

]

)

trans2 = transforms.Compose(

[

transforms.Resize((224, 224)), # essayer avec 224 seulement

transforms.Grayscale(num_output_channels=3),

transforms.CenterCrop(224),

transforms.ToTensor(),

]

)

imgs1 = datasets.ImageFolder("simages", trans1)

imgs2 = datasets.ImageFolder("simages", trans2)

dataloader = DataLoader(

ConcatDataset([imgs1, imgs2]), batch_size=1, shuffle=True, num_workers=1

)

img_seq = iter(dataloader)

imgs = [img[0] for i, img in zip(range(10), img_seq)]

array([[<Axes: >, <Axes: >, <Axes: >, <Axes: >],

[<Axes: >, <Axes: >, <Axes: >, <Axes: >],

[<Axes: >, <Axes: >, <Axes: >, <Axes: >]], dtype=object)

Which leaves 52 images to process out of 61 = 31*2 (the folder contains 31 images).

len(list(img_seq))

52

Search among images¶

We use the class SearchEnginePredictionImages.

The idea of the search engine¶

The deep network is able to classify images coming from a competition called ImageNet which was trained to classify different images. But still, the network has 88 layers which slightly transform the images into classification results. We assume the last layers contains information which allows the network to classify into objects: it is less related to the images than the content of it. In particular, we would like that an image with a daark background does not necessarily return images with a dark background.

# We reshape an image into *(224x224)* which is the size the network

# ingests. We propagate the inputs until the layer just before the last

# one. Its output will be considered as the *featurized image*. We do that

# for a specific set of images called the *neighbors*. When a new image

# comes up, we apply the same process and find the closest images among

# the set of neighbors.

model = models.squeezenet1_0(weights=SqueezeNet1_0_Weights.IMAGENET1K_V1)

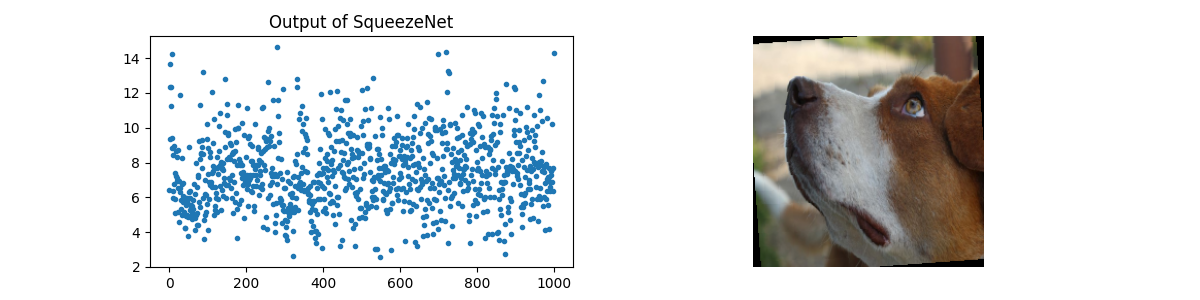

The model outputs the probability for each class.

res = model.forward(imgs[1])

res.shape

torch.Size([1, 1000])

res.detach().numpy().ravel()[:10]

array([4.347286 , 7.350151 , 7.131137 , 6.8547697, 5.1110325, 6.897387 ,

6.8151937, 6.966685 , 7.0494695, 3.8092496], dtype=float32)

(np.float64(-0.5), np.float64(223.5), np.float64(223.5), np.float64(-0.5))

We have features for one image. We build the neighbors, the output for each image in the training datasets.

trans = transforms.Compose(

[transforms.Resize((224, 224)), transforms.CenterCrop(224), transforms.ToTensor()]

)

imgs = datasets.ImageFolder("simages", trans)

dataloader = DataLoader(imgs, batch_size=1, shuffle=False, num_workers=1)

img_seq = iter(dataloader)

imgs = [img[0] for img in img_seq]

all_outputs = [model.forward(img).detach().numpy().ravel() for img in imgs]

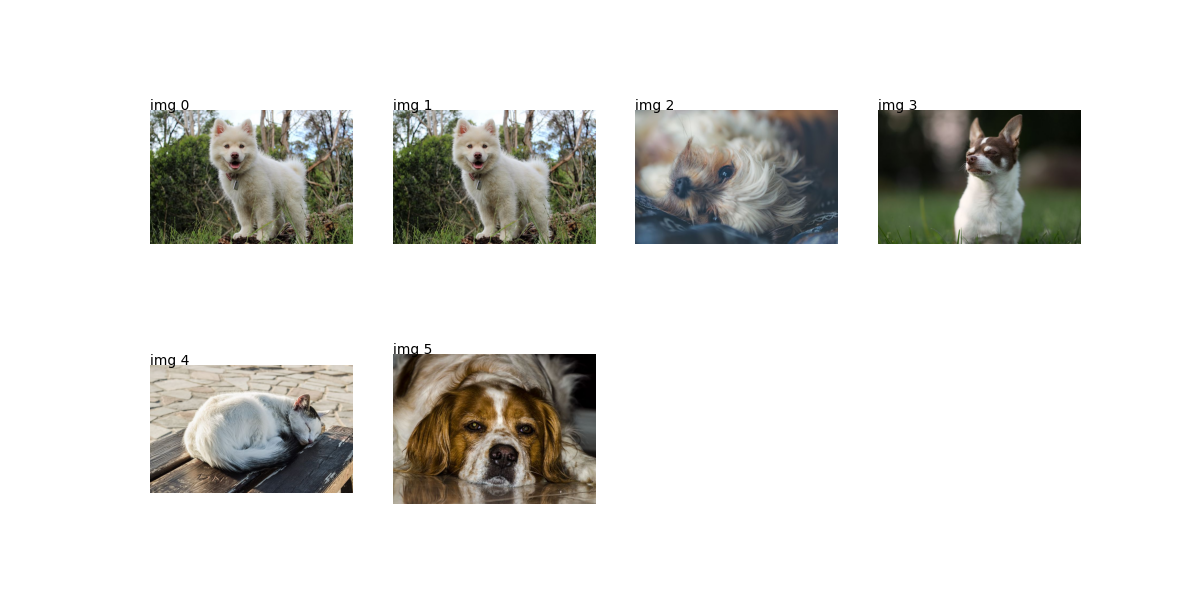

We extract the neighbors for a new image.

one_output = model.forward(imgs[5]).detach().numpy().ravel()

score, index = knn.kneighbors([one_output])

score, index

(array([[20.06299591, 57.36677933, 69.52404785, 69.79970551, 70.69257355]]), array([[5, 1, 0, 9, 2]]))

We need to retrieve images for indexes stored in index.

names = os.listdir("simages/category")

names = [os.path.join("simages/category", n) for n in names if ".zip" not in n]

disp = [names[5]] + [names[i] for i in index.ravel()]

disp

['simages/category/dachshund-2035700__480.jpg', 'simages/category/dachshund-2035700__480.jpg', 'simages/category/shotlanskogo-2934720__480.jpg', 'simages/category/cat-2603300__480.jpg', 'simages/category/dog-2684073__480.jpg', 'simages/category/cat-2917592__480.jpg']

We check the first one is exactly the same as the searched image.

plot_gallery_images(disp)

array([[<Axes: >, <Axes: >, <Axes: >, <Axes: >],

[<Axes: >, <Axes: >, <Axes: >, <Axes: >]], dtype=object)

It is possible to access intermediate layers output however it means rewriting the method forward to capture it: Accessing intermediate layers of a pretrained network forward?.

Going further¶

The original neural network has not been changed and was chosen to be small (88 layers). Other options are available for better performances. The imported model can be also be trained on a classification problem if there is such information to leverage. Even if the model was trained on millions of images, a couple of thousands are enough to train the last layers. The model can also be trained as long as there exists a way to compute a gradient. We could imagine to label the result of this search engine and train the model on pairs of images ranked in the other.

We can use the pairwise

transform

(example of code:

ranking.py). For every

pair , we tell if the search engine should have

(

) or the order order

(

).

is the features produced by the neural

network :

. We train a classifier on the

database:

A training algorithm based on a gradient will have to propagate the gradient:

Total running time of the script: (0 minutes 8.544 seconds)