Note

Go to the end to download the full example code

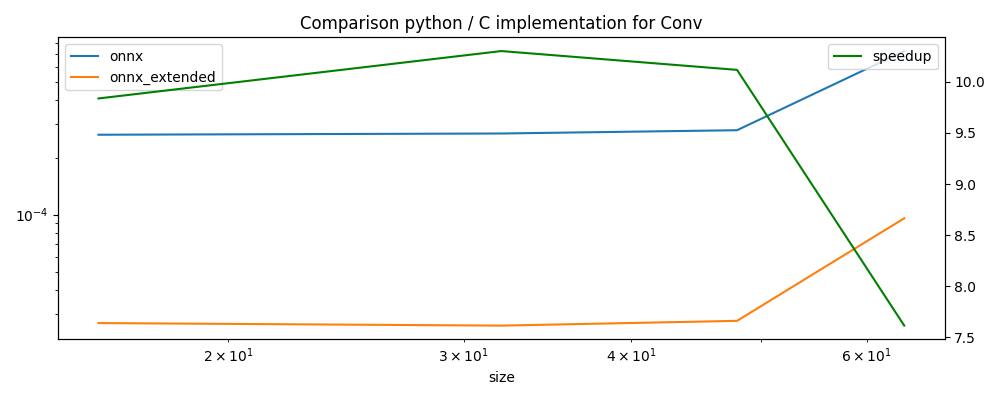

Using C implementation of operator Conv#

onnx-extended includes an implementation of operator Conv

in language C++ must faster than the python implementation

available in package onnx. These implementations

are automatically available through class

onnx_extended.reference.CReferenceEvaluator.

The following example compares the processing time for three runtimes.

Creation of a simple model#

import numpy as np

import matplotlib.pyplot as plt

from pandas import DataFrame

from tqdm import tqdm

from onnx import TensorProto

from onnx.helper import (

make_graph,

make_model,

make_node,

make_opsetid,

make_tensor_value_info,

)

from onnx.reference import ReferenceEvaluator

from onnxruntime import InferenceSession

from onnx_extended.ext_test_case import measure_time, unit_test_going

from onnx_extended.reference import CReferenceEvaluator

X = make_tensor_value_info("X", TensorProto.FLOAT, [None, None, None, None])

Y = make_tensor_value_info("Y", TensorProto.FLOAT, [None, None, None, None])

B = make_tensor_value_info("B", TensorProto.FLOAT, [None, None, None, None])

W = make_tensor_value_info("W", TensorProto.FLOAT, [None, None, None, None])

node = make_node(

"Conv",

["X", "W", "B"],

["Y"],

pads=[1, 1, 1, 1],

dilations=[1, 1],

strides=[2, 2],

)

graph = make_graph([node], "g", [X, W, B], [Y])

onnx_model = make_model(graph, opset_imports=[make_opsetid("", 18)], ir_version=8)

[2023-12-29 23:38:12,287] [INFO] [real_accelerator.py:158:get_accelerator] Setting ds_accelerator to cuda (auto detect)

ReferenceEvaluator and CReferenceEvaluator#

Let’s first compare the outputs are the same.

sH, sW = 64, 64

X = np.arange(sW * sH).reshape((1, 1, sH, sW)).astype(np.float32)

W = np.ones((1, 1, 3, 3), dtype=np.float32)

B = np.array([[[[0]]]], dtype=np.float32)

sess1 = ReferenceEvaluator(onnx_model)

sess2 = CReferenceEvaluator(onnx_model)

expected = sess1.run(None, {"X": X, "W": W, "B": B})[0]

got = sess2.run(None, {"X": X, "W": W, "B": B})[0]

diff = np.abs(expected - got).max()

print(f"difference: {diff}")

difference: 0.0

Everything works fine.

Time measurement#

ReferenceEvaluator: 0.0008064220000014756s

CReferenceEvaluator: 9.955339999851277e-05s

speedup is 8.100396370325099

Let’s add onnxruntime as well.

InferenceSession: 2.9544599999098864e-05s

speedup is 27.295072535288078

Plotting#

data = []

for i in tqdm([16, 32, 48, 64]):

sH, sW = i, i

X = np.arange(sW * sH).reshape((1, 1, sH, sW)).astype(np.float32)

W = np.ones((1, 1, 3, 3), dtype=np.float32)

B = np.array([[[[0]]]], dtype=np.float32)

feeds = {"X": X, "W": W, "B": B}

t1 = measure_time(lambda: sess1.run(None, feeds))

t2 = measure_time(lambda: sess2.run(None, feeds))

obs = dict(size=i, onnx=t1["average"], onnx_extended=t2["average"])

data.append(obs)

if unit_test_going() and len(data) >= 2:

break

df = DataFrame(data)

df

0%| | 0/4 [00:00<?, ?it/s]

25%|██▌ | 1/4 [00:00<00:00, 6.82it/s]

50%|█████ | 2/4 [00:00<00:00, 6.77it/s]

75%|███████▌ | 3/4 [00:00<00:00, 6.63it/s]

100%|██████████| 4/4 [00:00<00:00, 3.92it/s]

100%|██████████| 4/4 [00:00<00:00, 4.63it/s]

Finally.

df = df.set_index("size")

fig, ax = plt.subplots(1, 1, figsize=(10, 4))

df.plot(

ax=ax, logx=True, logy=True, title="Comparison python / C implementation for Conv"

)

df["speedup"] = df["onnx"] / df["onnx_extended"]

ax2 = ax.twinx()

df[["speedup"]].plot(ax=ax2, color="green")

fig.tight_layout()

fig.savefig("plot_op_conv.png")

# plt.show()

Total running time of the script: (0 minutes 10.150 seconds)