Note

Go to the end to download the full example code

TreeEnsemble optimization#

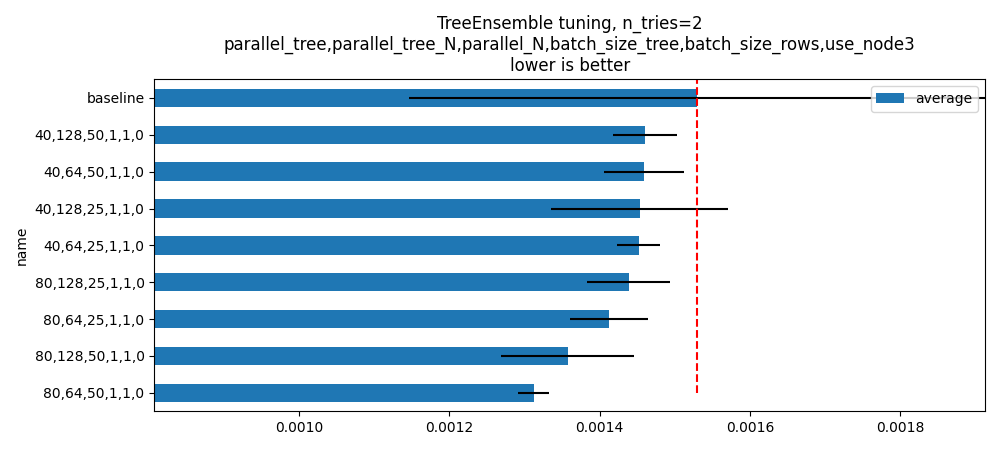

The execution of a TreeEnsembleRegressor can lead to very different results depending on how the computation is parallelized. By trees, by rows, by both, for only one row, for a short batch of rows, a longer one. The implementation in onnxruntime does not let the user changed the predetermined settings but a custom kernel might. That’s what this example is measuring.

The default set of optimized parameters is very short and is meant to be executed fast. Many more parameters can be tried.

python plot_optim_tree_ensemble --scenario=LONG

To change the training parameters:

python plot_optim_tree_ensemble.py

--n_trees=100

--max_depth=10

--n_features=50

--batch_size=100000

Another example with a full list of parameters:

- python plot_optim_tree_ensemble.py

–n_trees=100 –max_depth=10 –n_features=50 –batch_size=100000 –tries=3 –scenario=CUSTOM –parallel_tree=80,40 –parallel_tree_N=128,64 –parallel_N=50,25 –batch_size_tree=1,2 –batch_size_rows=1,2 –use_node3=0

Another example:

python plot_optim_tree_ensemble.py

--n_trees=100 --n_features=10 --batch_size=10000 --max_depth=8 -s SHORT

import logging

import os

import timeit

import numpy

import onnx

from onnx.helper import make_graph, make_model

from onnx.reference import ReferenceEvaluator

import matplotlib.pyplot as plt

from pandas import DataFrame, concat

from sklearn.datasets import make_regression

from sklearn.ensemble import RandomForestRegressor

from skl2onnx import to_onnx

from onnxruntime import InferenceSession, SessionOptions

from onnx_array_api.plotting.text_plot import onnx_simple_text_plot

from onnx_extended.reference import CReferenceEvaluator

from onnx_extended.ortops.optim.cpu import get_ort_ext_libs

from onnx_extended.ortops.optim.optimize import (

change_onnx_operator_domain,

get_node_attribute,

optimize_model,

)

from onnx_extended.tools.onnx_nodes import multiply_tree

from onnx_extended.ext_test_case import get_parsed_args, unit_test_going

logging.getLogger("matplotlib.font_manager").setLevel(logging.ERROR)

script_args = get_parsed_args(

"plot_optim_tree_ensemble",

description=__doc__,

scenarios={

"SHORT": "short optimization (default)",

"LONG": "test more options",

"CUSTOM": "use values specified by the command line",

},

n_features=(2 if unit_test_going() else 5, "number of features to generate"),

n_trees=(3 if unit_test_going() else 10, "number of trees to train"),

max_depth=(2 if unit_test_going() else 5, "max_depth"),

batch_size=(1000 if unit_test_going() else 10000, "batch size"),

parallel_tree=("80,160,40", "values to try for parallel_tree"),

parallel_tree_N=("256,128,64", "values to try for parallel_tree_N"),

parallel_N=("100,50,25", "values to try for parallel_N"),

batch_size_tree=("2,4,8", "values to try for batch_size_tree"),

batch_size_rows=("2,4,8", "values to try for batch_size_rows"),

use_node3=("0,1", "values to try for use_node3"),

expose="",

n_jobs=("-1", "number of jobs to train the RandomForestRegressor"),

)

Training a model#

batch_size = script_args.batch_size

n_features = script_args.n_features

n_trees = script_args.n_trees

max_depth = script_args.max_depth

filename = f"plot_optim_tree_ensemble-f{n_features}-" f"t{n_trees}-d{max_depth}.onnx"

if not os.path.exists(filename):

X, y = make_regression(

batch_size + max(batch_size, 2 ** (max_depth + 1)),

n_features=n_features,

n_targets=1,

)

print(f"Training to get {filename!r} with X.shape={X.shape}")

X, y = X.astype(numpy.float32), y.astype(numpy.float32)

# To be faster, we train only 1 tree.

model = RandomForestRegressor(

1, max_depth=max_depth, verbose=2, n_jobs=int(script_args.n_jobs)

)

model.fit(X[:-batch_size], y[:-batch_size])

onx = to_onnx(model, X[:1])

# And wd multiply the trees.

node = multiply_tree(onx.graph.node[0], n_trees)

onx = make_model(

make_graph([node], onx.graph.name, onx.graph.input, onx.graph.output),

domain=onx.domain,

opset_imports=onx.opset_import,

)

with open(filename, "wb") as f:

f.write(onx.SerializeToString())

else:

X, y = make_regression(batch_size, n_features=n_features, n_targets=1)

X, y = X.astype(numpy.float32), y.astype(numpy.float32)

Xb, yb = X[-batch_size:].copy(), y[-batch_size:].copy()

Training to get 'plot_optim_tree_ensemble-f5-t10-d5.onnx' with X.shape=(20000, 5)

[Parallel(n_jobs=-1)]: Using backend ThreadingBackend with 8 concurrent workers.

building tree 1 of 1

[Parallel(n_jobs=-1)]: Done 1 out of 1 | elapsed: 0.1s finished

2023-10-13 16:49:05,398 skl2onnx [DEBUG] - [Var] +Variable('X', 'X', type=FloatTensorType(shape=[None, 5]))

2023-10-13 16:49:05,399 skl2onnx [DEBUG] - [Var] update is_root=True for Variable('X', 'X', type=FloatTensorType(shape=[None, 5]))

2023-10-13 16:49:05,399 skl2onnx [DEBUG] - [parsing] found alias='SklearnRandomForestRegressor' for type=<class 'sklearn.ensemble._forest.RandomForestRegressor'>.

2023-10-13 16:49:05,399 skl2onnx [DEBUG] - [Op] +Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='', outputs='', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,401 skl2onnx [DEBUG] - [Op] add In Variable('X', 'X', type=FloatTensorType(shape=[None, 5])) to Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,401 skl2onnx [DEBUG] - [Var] +Variable('variable', 'variable', type=FloatTensorType(shape=[]))

2023-10-13 16:49:05,401 skl2onnx [DEBUG] - [Var] set parent for Variable('variable', 'variable', type=FloatTensorType(shape=[])), parent=Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,402 skl2onnx [DEBUG] - [Op] add Out Variable('variable', 'variable', type=FloatTensorType(shape=[])) to Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,402 skl2onnx [DEBUG] - [Var] update is_leaf=True for Variable('variable', 'variable', type=FloatTensorType(shape=[]))

2023-10-13 16:49:05,402 skl2onnx [DEBUG] - [Var] update is_fed=True for Variable('X', 'X', type=FloatTensorType(shape=[None, 5])), parent=None

2023-10-13 16:49:05,402 skl2onnx [DEBUG] - [Var] update is_fed=False for Variable('variable', 'variable', type=FloatTensorType(shape=[])), parent=Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,402 skl2onnx [DEBUG] - [Op] update is_evaluated=False for Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,403 skl2onnx [DEBUG] - [Shape2] call infer_types for Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,403 skl2onnx [DEBUG] - [Shape-a] Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2)) fed 'True' - 'False'

2023-10-13 16:49:05,403 skl2onnx [DEBUG] - [Shape-b] Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2)) inputs=[Variable('X', 'X', type=FloatTensorType(shape=[None, 5]))] - outputs=[Variable('variable', 'variable', type=FloatTensorType(shape=[None, 1]))]

2023-10-13 16:49:05,404 skl2onnx [DEBUG] - [Conv] call Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2)) fed 'True' - 'False'

2023-10-13 16:49:05,404 skl2onnx [DEBUG] - [Node] 'TreeEnsembleRegressor' - 'X' -> 'variable' (name='TreeEnsembleRegressor')

2023-10-13 16:49:05,405 skl2onnx [DEBUG] - [Conv] end - Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,405 skl2onnx [DEBUG] - [Op] update is_evaluated=True for Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

2023-10-13 16:49:05,405 skl2onnx [DEBUG] - [Var] update is_fed=True for Variable('variable', 'variable', type=FloatTensorType(shape=[None, 1])), parent=Operator(type='SklearnRandomForestRegressor', onnx_name='SklearnRandomForestRegressor', inputs='X', outputs='variable', raw_operator=RandomForestRegressor(max_depth=5, n_estimators=1, n_jobs=-1, verbose=2))

Rewrite the onnx file to use a different kernel#

The custom kernel is mapped to a custom operator with the same name the attributes and domain = “onnx_extented.ortops.optim.cpu”. We call a function to do that replacement. First the current model.

opset: domain='ai.onnx.ml' version=1

opset: domain='' version=19

input: name='X' type=dtype('float32') shape=['', 5]

TreeEnsembleRegressor(X, n_targets=1, nodes_falsenodeids=630:[32,17,10...62,0,0], nodes_featureids=630:[2,4,3...4,0,0], nodes_hitrates=630:[1.0,1.0...1.0,1.0], nodes_missing_value_tracks_true=630:[0,0,0...0,0,0], nodes_modes=630:[b'BRANCH_LEQ',b'BRANCH_LEQ'...b'LEAF',b'LEAF'], nodes_nodeids=630:[0,1,2...60,61,62], nodes_treeids=630:[0,0,0...9,9,9], nodes_truenodeids=630:[1,2,3...61,0,0], nodes_values=630:[-0.3130105137825012,0.09077119082212448...0.0,0.0], post_transform=b'NONE', target_ids=320:[0,0,0...0,0,0], target_nodeids=320:[5,6,8...59,61,62], target_treeids=320:[0,0,0...9,9,9], target_weights=320:[-349.0422668457031,-258.12200927734375...258.0306396484375,354.7446594238281]) -> variable

output: name='variable' type=dtype('float32') shape=['', 1]

And then the modified model.

def transform_model(onx, **kwargs):

att = get_node_attribute(onx.graph.node[0], "nodes_modes")

modes = ",".join(map(lambda s: s.decode("ascii"), att.strings))

return change_onnx_operator_domain(

onx,

op_type="TreeEnsembleRegressor",

op_domain="ai.onnx.ml",

new_op_domain="onnx_extented.ortops.optim.cpu",

nodes_modes=modes,

**kwargs,

)

print("Tranform model to add a custom node.")

onx_modified = transform_model(onx)

print(f"Save into {filename + 'modified.onnx'!r}.")

with open(filename + "modified.onnx", "wb") as f:

f.write(onx_modified.SerializeToString())

print("done.")

print(onnx_simple_text_plot(onx_modified))

Tranform model to add a custom node.

Save into 'plot_optim_tree_ensemble-f5-t10-d5.onnxmodified.onnx'.

done.

opset: domain='ai.onnx.ml' version=1

opset: domain='' version=19

opset: domain='onnx_extented.ortops.optim.cpu' version=1

input: name='X' type=dtype('float32') shape=['', 5]

TreeEnsembleRegressor[onnx_extented.ortops.optim.cpu](X, nodes_modes=b'BRANCH_LEQ,BRANCH_LEQ,BRANCH_LEQ,BRANC...LEAF,LEAF', n_targets=1, nodes_falsenodeids=630:[32,17,10...62,0,0], nodes_featureids=630:[2,4,3...4,0,0], nodes_hitrates=630:[1.0,1.0...1.0,1.0], nodes_missing_value_tracks_true=630:[0,0,0...0,0,0], nodes_nodeids=630:[0,1,2...60,61,62], nodes_treeids=630:[0,0,0...9,9,9], nodes_truenodeids=630:[1,2,3...61,0,0], nodes_values=630:[-0.3130105137825012,0.09077119082212448...0.0,0.0], post_transform=b'NONE', target_ids=320:[0,0,0...0,0,0], target_nodeids=320:[5,6,8...59,61,62], target_treeids=320:[0,0,0...9,9,9], target_weights=320:[-349.0422668457031,-258.12200927734375...258.0306396484375,354.7446594238281]) -> variable

output: name='variable' type=dtype('float32') shape=['', 1]

Comparing onnxruntime and the custom kernel#

print(f"Loading {filename!r}")

sess_ort = InferenceSession(filename, providers=["CPUExecutionProvider"])

r = get_ort_ext_libs()

print(f"Creating SessionOptions with {r!r}")

opts = SessionOptions()

if r is not None:

opts.register_custom_ops_library(r[0])

print(f"Loading modified {filename!r}")

sess_cus = InferenceSession(

onx_modified.SerializeToString(), opts, providers=["CPUExecutionProvider"]

)

print(f"Running once with shape {Xb.shape}.")

base = sess_ort.run(None, {"X": Xb})[0]

print(f"Running modified with shape {Xb.shape}.")

got = sess_cus.run(None, {"X": Xb})[0]

print("done.")

Loading 'plot_optim_tree_ensemble-f5-t10-d5.onnx'

Creating SessionOptions with ['~/github/onnx-extended/onnx_extended/ortops/optim/cpu/libortops_optim_cpu.so']

Loading modified 'plot_optim_tree_ensemble-f5-t10-d5.onnx'

Running once with shape (10000, 5).

Running modified with shape (10000, 5).

done.

Discrepancies?

Discrepancies: 0.000244140625

Simple verification#

Baseline with onnxruntime.

t1 = timeit.timeit(lambda: sess_ort.run(None, {"X": Xb}), number=50)

print(f"baseline: {t1}")

baseline: 0.06290939999962575

The custom implementation.

t2 = timeit.timeit(lambda: sess_cus.run(None, {"X": Xb}), number=50)

print(f"new time: {t2}")

new time: 0.032032600000093225

The same implementation but ran from the onnx python backend.

ref = CReferenceEvaluator(filename)

ref.run(None, {"X": Xb})

t3 = timeit.timeit(lambda: ref.run(None, {"X": Xb}), number=50)

print(f"CReferenceEvaluator: {t3}")

CReferenceEvaluator: 0.02749690000200644

The python implementation but from the onnx python backend.

ReferenceEvaluator: 3.0222442999984196 (only 5 times instead of 50)

Time for comparison#

The custom kernel supports the same attributes as TreeEnsembleRegressor plus new ones to tune the parallelization. They can be seen in tree_ensemble.cc. Let’s try out many possibilities. The default values are the first ones.

if unit_test_going():

optim_params = dict(

parallel_tree=[40], # default is 80

parallel_tree_N=[128], # default is 128

parallel_N=[50, 25], # default is 50

batch_size_tree=[1], # default is 1

batch_size_rows=[1], # default is 1

use_node3=[0], # default is 0

)

elif script_args.scenario in (None, "SHORT"):

optim_params = dict(

parallel_tree=[80, 40], # default is 80

parallel_tree_N=[128, 64], # default is 128

parallel_N=[50, 25], # default is 50

batch_size_tree=[1], # default is 1

batch_size_rows=[1], # default is 1

use_node3=[0], # default is 0

)

elif script_args.scenario == "LONG":

optim_params = dict(

parallel_tree=[80, 160, 40],

parallel_tree_N=[256, 128, 64],

parallel_N=[100, 50, 25],

batch_size_tree=[1, 2, 4, 8],

batch_size_rows=[1, 2, 4, 8],

use_node3=[0, 1],

)

elif script_args.scenario == "CUSTOM":

optim_params = dict(

parallel_tree=list(int(i) for i in script_args.parallel_tree.split(",")),

parallel_tree_N=list(int(i) for i in script_args.parallel_tree_N.split(",")),

parallel_N=list(int(i) for i in script_args.parallel_N.split(",")),

batch_size_tree=list(int(i) for i in script_args.batch_size_tree.split(",")),

batch_size_rows=list(int(i) for i in script_args.batch_size_rows.split(",")),

use_node3=list(int(i) for i in script_args.use_node3.split(",")),

)

else:

raise ValueError(

f"Unknown scenario {script_args.scenario!r}, use --help to get them."

)

cmds = []

for att, value in optim_params.items():

cmds.append(f"--{att}={','.join(map(str, value))}")

print("Full list of optimization parameters:")

print(" ".join(cmds))

Full list of optimization parameters:

--parallel_tree=80,40 --parallel_tree_N=128,64 --parallel_N=50,25 --batch_size_tree=1 --batch_size_rows=1 --use_node3=0

Then the optimization.

def create_session(onx):

opts = SessionOptions()

r = get_ort_ext_libs()

if r is None:

raise RuntimeError("No custom implementation available.")

opts.register_custom_ops_library(r[0])

return InferenceSession(

onx.SerializeToString(), opts, providers=["CPUExecutionProvider"]

)

res = optimize_model(

onx,

feeds={"X": Xb},

transform=transform_model,

session=create_session,

baseline=lambda onx: InferenceSession(

onx.SerializeToString(), providers=["CPUExecutionProvider"]

),

params=optim_params,

verbose=True,

number=script_args.number,

repeat=script_args.repeat,

warmup=script_args.warmup,

sleep=script_args.sleep,

n_tries=script_args.tries,

)

0%| | 0/16 [00:00<?, ?it/s]

i=1/16 TRY=0 parallel_tree=80 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 0%| | 0/16 [00:00<?, ?it/s]

i=1/16 TRY=0 parallel_tree=80 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 6%|▋ | 1/16 [00:00<00:07, 2.07it/s]

i=2/16 TRY=0 parallel_tree=80 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 6%|▋ | 1/16 [00:00<00:07, 2.07it/s]

i=2/16 TRY=0 parallel_tree=80 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 12%|█▎ | 2/16 [00:00<00:04, 2.82it/s]

i=3/16 TRY=0 parallel_tree=80 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 12%|█▎ | 2/16 [00:00<00:04, 2.82it/s]

i=3/16 TRY=0 parallel_tree=80 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 19%|█▉ | 3/16 [00:00<00:03, 3.27it/s]

i=4/16 TRY=0 parallel_tree=80 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 19%|█▉ | 3/16 [00:00<00:03, 3.27it/s]

i=4/16 TRY=0 parallel_tree=80 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 25%|██▌ | 4/16 [00:01<00:03, 3.53it/s]

i=5/16 TRY=0 parallel_tree=40 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 25%|██▌ | 4/16 [00:01<00:03, 3.53it/s]

i=5/16 TRY=0 parallel_tree=40 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 31%|███▏ | 5/16 [00:01<00:03, 3.65it/s]

i=6/16 TRY=0 parallel_tree=40 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 31%|███▏ | 5/16 [00:01<00:03, 3.65it/s]

i=6/16 TRY=0 parallel_tree=40 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 38%|███▊ | 6/16 [00:01<00:02, 3.65it/s]

i=7/16 TRY=0 parallel_tree=40 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 38%|███▊ | 6/16 [00:01<00:02, 3.65it/s]

i=7/16 TRY=0 parallel_tree=40 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 44%|████▍ | 7/16 [00:02<00:02, 3.69it/s]

i=8/16 TRY=0 parallel_tree=40 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 44%|████▍ | 7/16 [00:02<00:02, 3.69it/s]

i=8/16 TRY=0 parallel_tree=40 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 50%|█████ | 8/16 [00:02<00:02, 3.73it/s]

i=9/16 TRY=1 parallel_tree=80 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 50%|█████ | 8/16 [00:02<00:02, 3.73it/s]

i=9/16 TRY=1 parallel_tree=80 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 56%|█████▋ | 9/16 [00:02<00:01, 3.79it/s]

i=10/16 TRY=1 parallel_tree=80 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 56%|█████▋ | 9/16 [00:02<00:01, 3.79it/s]

i=10/16 TRY=1 parallel_tree=80 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 62%|██████▎ | 10/16 [00:02<00:01, 3.84it/s]

i=11/16 TRY=1 parallel_tree=80 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 62%|██████▎ | 10/16 [00:02<00:01, 3.84it/s]

i=11/16 TRY=1 parallel_tree=80 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 69%|██████▉ | 11/16 [00:03<00:01, 3.89it/s]

i=12/16 TRY=1 parallel_tree=80 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 69%|██████▉ | 11/16 [00:03<00:01, 3.89it/s]

i=12/16 TRY=1 parallel_tree=80 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 75%|███████▌ | 12/16 [00:03<00:01, 3.87it/s]

i=13/16 TRY=1 parallel_tree=40 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 75%|███████▌ | 12/16 [00:03<00:01, 3.87it/s]

i=13/16 TRY=1 parallel_tree=40 parallel_tree_N=128 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 81%|████████▏ | 13/16 [00:03<00:00, 3.84it/s]

i=14/16 TRY=1 parallel_tree=40 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 81%|████████▏ | 13/16 [00:03<00:00, 3.84it/s]

i=14/16 TRY=1 parallel_tree=40 parallel_tree_N=128 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 88%|████████▊ | 14/16 [00:03<00:00, 3.88it/s]

i=15/16 TRY=1 parallel_tree=40 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 88%|████████▊ | 14/16 [00:03<00:00, 3.88it/s]

i=15/16 TRY=1 parallel_tree=40 parallel_tree_N=64 parallel_N=50 batch_size_tree=1 batch_size_rows=1 use_node3=0: 94%|█████████▍| 15/16 [00:04<00:00, 3.88it/s]

i=16/16 TRY=1 parallel_tree=40 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 94%|█████████▍| 15/16 [00:04<00:00, 3.88it/s]

i=16/16 TRY=1 parallel_tree=40 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 100%|██████████| 16/16 [00:04<00:00, 3.85it/s]

i=16/16 TRY=1 parallel_tree=40 parallel_tree_N=64 parallel_N=25 batch_size_tree=1 batch_size_rows=1 use_node3=0: 100%|██████████| 16/16 [00:04<00:00, 3.67it/s]

And the results.

df = DataFrame(res)

df.to_csv("plot_optim_tree_ensemble.csv", index=False)

df.to_excel("plot_optim_tree_ensemble.xlsx", index=False)

print(df.columns)

print(df.head(5))

Index(['average', 'deviation', 'min_exec', 'max_exec', 'repeat', 'number',

'ttime', 'context_size', 'warmup_time', 'n_exp', 'n_exp_name',

'short_name', 'TRY', 'name', 'parallel_tree', 'parallel_tree_N',

'parallel_N', 'batch_size_tree', 'batch_size_rows', 'use_node3'],

dtype='object')

average deviation min_exec ... batch_size_tree batch_size_rows use_node3

0 0.001146 0.000365 0.000977 ... NaN NaN NaN

1 0.001269 0.000679 0.000504 ... 1.0 1.0 0.0

2 0.001494 0.000699 0.000605 ... 1.0 1.0 0.0

3 0.001292 0.000771 0.000576 ... 1.0 1.0 0.0

4 0.001361 0.000608 0.000594 ... 1.0 1.0 0.0

[5 rows x 20 columns]

Sorting#

small_df = df.drop(

[

"min_exec",

"max_exec",

"repeat",

"number",

"context_size",

"n_exp_name",

],

axis=1,

).sort_values("average")

print(small_df.head(n=10))

average deviation ttime ... batch_size_tree batch_size_rows use_node3

0 0.001146 0.000365 0.011456 ... NaN NaN NaN

1 0.001269 0.000679 0.012689 ... 1.0 1.0 0.0

3 0.001292 0.000771 0.012918 ... 1.0 1.0 0.0

11 0.001333 0.000679 0.013328 ... 1.0 1.0 0.0

14 0.001335 0.000865 0.013353 ... 1.0 1.0 0.0

4 0.001361 0.000608 0.013610 ... 1.0 1.0 0.0

10 0.001384 0.000594 0.013835 ... 1.0 1.0 0.0

15 0.001405 0.000831 0.014052 ... 1.0 1.0 0.0

5 0.001418 0.000688 0.014180 ... 1.0 1.0 0.0

8 0.001424 0.000758 0.014236 ... 1.0 1.0 0.0

[10 rows x 14 columns]

Worst#

print(small_df.tail(n=10))

average deviation ttime ... batch_size_tree batch_size_rows use_node3

5 0.001418 0.000688 0.014180 ... 1.0 1.0 0.0

8 0.001424 0.000758 0.014236 ... 1.0 1.0 0.0

9 0.001446 0.000509 0.014457 ... 1.0 1.0 0.0

12 0.001464 0.000822 0.014637 ... 1.0 1.0 0.0

16 0.001480 0.000681 0.014802 ... 1.0 1.0 0.0

2 0.001494 0.000699 0.014942 ... 1.0 1.0 0.0

13 0.001503 0.000776 0.015035 ... 1.0 1.0 0.0

7 0.001513 0.000628 0.015127 ... 1.0 1.0 0.0

6 0.001571 0.000781 0.015711 ... 1.0 1.0 0.0

17 0.001913 0.001220 0.019128 ... NaN NaN NaN

[10 rows x 14 columns]

Plot#

dfm = (

df[["name", "average"]]

.groupby(["name"], as_index=False)

.agg(["mean", "min", "max"])

.copy()

)

if dfm.shape[1] == 3:

dfm = dfm.reset_index(drop=False)

dfm.columns = ["name", "average", "min", "max"]

dfi = (

dfm[["name", "average", "min", "max"]].sort_values("average").reset_index(drop=True)

)

baseline = dfi[dfi["name"].str.contains("baseline")]

not_baseline = dfi[~dfi["name"].str.contains("baseline")].reset_index(drop=True)

if not_baseline.shape[0] > 50:

not_baseline = not_baseline[:50]

merged = concat([baseline, not_baseline], axis=0)

merged = merged.sort_values("average").reset_index(drop=True).set_index("name")

skeys = ",".join(optim_params.keys())

print(merged.columns)

fig, ax = plt.subplots(1, 1, figsize=(10, merged.shape[0] / 2))

err_min = merged["average"] - merged["min"]

err_max = merged["max"] - merged["average"]

merged[["average"]].plot.barh(

ax=ax,

title=f"TreeEnsemble tuning, n_tries={script_args.tries}"

f"\n{skeys}\nlower is better",

xerr=[err_min, err_max],

)

b = df.loc[df["name"] == "baseline", "average"].mean()

ax.plot([b, b], [0, df.shape[0]], "r--")

ax.set_xlim(

[

(df["min_exec"].min() + df["average"].min()) / 2,

(df["average"].max() + df["average"].max()) / 2,

]

)

# ax.set_xscale("log")

fig.tight_layout()

fig.savefig("plot_optim_tree_ensemble.png")

Index(['average', 'min', 'max'], dtype='object')

Total running time of the script: (0 minutes 17.924 seconds)