Note

Click here to download the full example code

Measuring CPU/GPU performance with a vector sum#

The examples compares multiple versions of a vector sum, CPU, GPU.

Vector Sum#

from tqdm import tqdm

import numpy

import matplotlib.pyplot as plt

from pandas import DataFrame

from onnx_extended.ext_test_case import measure_time, unit_test_going

from onnx_extended.validation.cpu._validation import (

vector_sum_array_avx as vector_sum_avx,

vector_sum_array_avx_parallel as vector_sum_avx_parallel,

)

try:

from onnx_extended.validation.cuda.cuda_example_py import (

vector_sum0,

vector_sum6,

vector_sum_atomic,

)

except ImportError:

# CUDA is not available

vector_sum0 = None

obs = []

dims = [500, 700, 800, 900, 1000, 1100, 1200, 1300, 1400, 1500, 1600, 1700, 1800, 2000]

if unit_test_going():

dims = dims[:3]

for dim in tqdm(dims):

values = numpy.ones((dim, dim), dtype=numpy.float32).ravel()

diff = abs(vector_sum_avx(dim, values) - dim**2)

res = measure_time(lambda: vector_sum_avx(dim, values), max_time=0.5)

obs.append(

dict(

dim=dim,

size=values.size,

time=res["average"],

direction="avx",

time_per_element=res["average"] / dim**2,

diff=diff,

)

)

diff = abs(vector_sum_avx_parallel(dim, values) - dim**2)

res = measure_time(lambda: vector_sum_avx_parallel(dim, values), max_time=0.5)

obs.append(

dict(

dim=dim,

size=values.size,

time=res["average"],

direction="avx//",

time_per_element=res["average"] / dim**2,

diff=diff,

)

)

if vector_sum0 is None:

# CUDA is not available

continue

diff = abs(vector_sum0(values, 32) - dim**2)

res = measure_time(lambda: vector_sum0(values, 32), max_time=0.5)

obs.append(

dict(

dim=dim,

size=values.size,

time=res["average"],

direction="0cuda32",

time_per_element=res["average"] / dim**2,

diff=diff,

)

)

diff = abs(vector_sum_atomic(values, 32) - dim**2)

res = measure_time(lambda: vector_sum_atomic(values, 32), max_time=0.5)

obs.append(

dict(

dim=dim,

size=values.size,

time=res["average"],

direction="Acuda32",

time_per_element=res["average"] / dim**2,

diff=diff,

)

)

diff = abs(vector_sum6(values, 32) - dim**2)

res = measure_time(lambda: vector_sum6(values, 32), max_time=0.5)

obs.append(

dict(

dim=dim,

size=values.size,

time=res["average"],

direction="6cuda32",

time_per_element=res["average"] / dim**2,

diff=diff,

)

)

diff = abs(vector_sum6(values, 256) - dim**2)

res = measure_time(lambda: vector_sum6(values, 256), max_time=0.5)

obs.append(

dict(

dim=dim,

size=values.size,

time=res["average"],

direction="6cuda256",

time_per_element=res["average"] / dim**2,

diff=diff,

)

)

df = DataFrame(obs)

piv = df.pivot(index="dim", columns="direction", values="time_per_element")

print(piv)

0%| | 0/14 [00:00<?, ?it/s]

7%|7 | 1/14 [00:05<01:06, 5.14s/it]

14%|#4 | 2/14 [00:08<00:51, 4.30s/it]

21%|##1 | 3/14 [00:12<00:44, 4.07s/it]

29%|##8 | 4/14 [00:16<00:41, 4.13s/it]

36%|###5 | 5/14 [00:22<00:41, 4.57s/it]

43%|####2 | 6/14 [00:28<00:41, 5.23s/it]

50%|##### | 7/14 [00:32<00:34, 4.86s/it]

57%|#####7 | 8/14 [00:37<00:28, 4.82s/it]

64%|######4 | 9/14 [00:44<00:26, 5.37s/it]

71%|#######1 | 10/14 [00:48<00:19, 4.98s/it]

79%|#######8 | 11/14 [00:55<00:16, 5.53s/it]

86%|########5 | 12/14 [00:58<00:10, 5.01s/it]

93%|#########2| 13/14 [01:05<00:05, 5.55s/it]

100%|##########| 14/14 [01:09<00:00, 5.17s/it]

100%|##########| 14/14 [01:09<00:00, 4.99s/it]

direction 0cuda32 6cuda256 6cuda32 Acuda32 avx avx//

dim

500 6.000230e-09 4.451110e-09 4.537837e-09 6.829200e-08 1.036323e-10 4.052643e-11

700 3.262156e-09 3.143411e-09 3.169420e-09 5.722844e-08 1.008356e-10 3.673565e-11

800 3.106440e-09 4.700776e-09 4.065319e-09 5.775400e-08 1.090837e-10 3.874028e-11

900 3.834964e-09 3.915696e-09 3.751734e-09 5.641287e-08 2.515770e-10 8.390640e-10

1000 2.761807e-09 3.357732e-09 2.853131e-09 5.732877e-08 2.029123e-10 1.741685e-10

1100 3.641848e-09 2.890547e-09 3.218892e-09 5.730594e-08 3.328775e-10 7.352791e-10

1200 2.679208e-09 3.958343e-09 3.529325e-09 5.596419e-08 2.279202e-10 8.028120e-11

1300 6.032650e-09 3.961533e-09 4.926854e-09 5.667383e-08 1.200082e-09 2.806201e-09

1400 5.723378e-09 4.149460e-09 4.087459e-09 5.868545e-08 4.993190e-10 1.947226e-09

1500 5.119023e-09 4.360560e-09 4.594722e-09 5.643186e-08 1.084624e-09 2.745877e-09

1600 5.124195e-09 4.298465e-09 3.773497e-09 5.641852e-08 9.103740e-10 2.801315e-09

1700 5.201476e-09 4.682862e-09 4.631906e-09 6.014909e-08 1.221765e-09 2.224184e-09

1800 5.014763e-09 4.868312e-09 4.844904e-09 5.925867e-08 1.107320e-09 2.617896e-09

2000 3.080799e-09 3.890268e-09 4.546331e-09 5.705496e-08 1.056126e-09 1.638109e-09

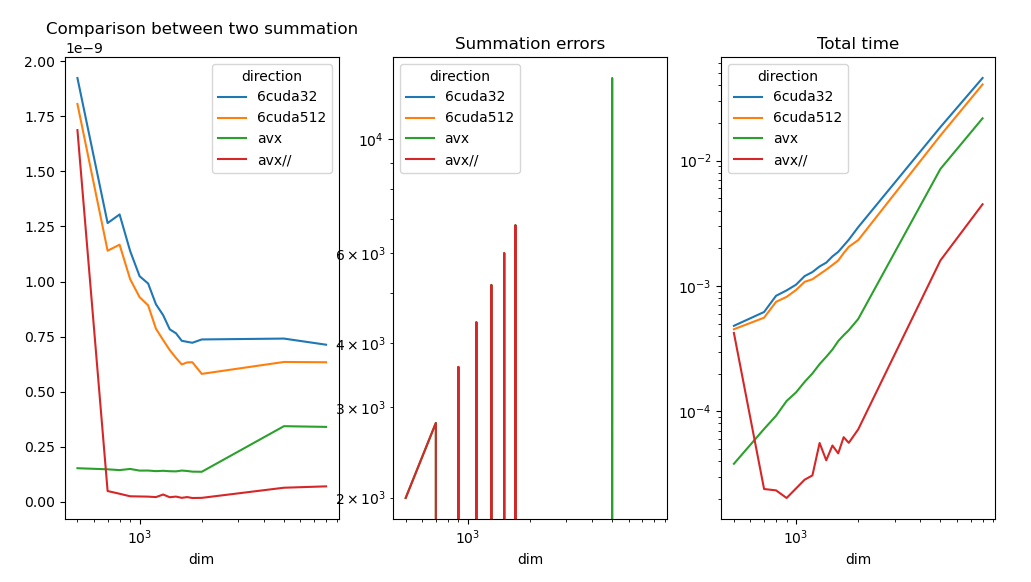

Plots#

piv_diff = df.pivot(index="dim", columns="direction", values="diff")

piv_time = df.pivot(index="dim", columns="direction", values="time")

fig, ax = plt.subplots(1, 3, figsize=(12, 6))

piv.plot(ax=ax[0], logx=True, title="Comparison between two summation")

piv_diff.plot(ax=ax[1], logx=True, logy=True, title="Summation errors")

piv_time.plot(ax=ax[2], logx=True, logy=True, title="Total time")

fig.savefig("plot_bench_gpu_vector_sum_gpu.png")

The results should look like the following.

AVX is still faster. Let’s try to understand why.

Profiling#

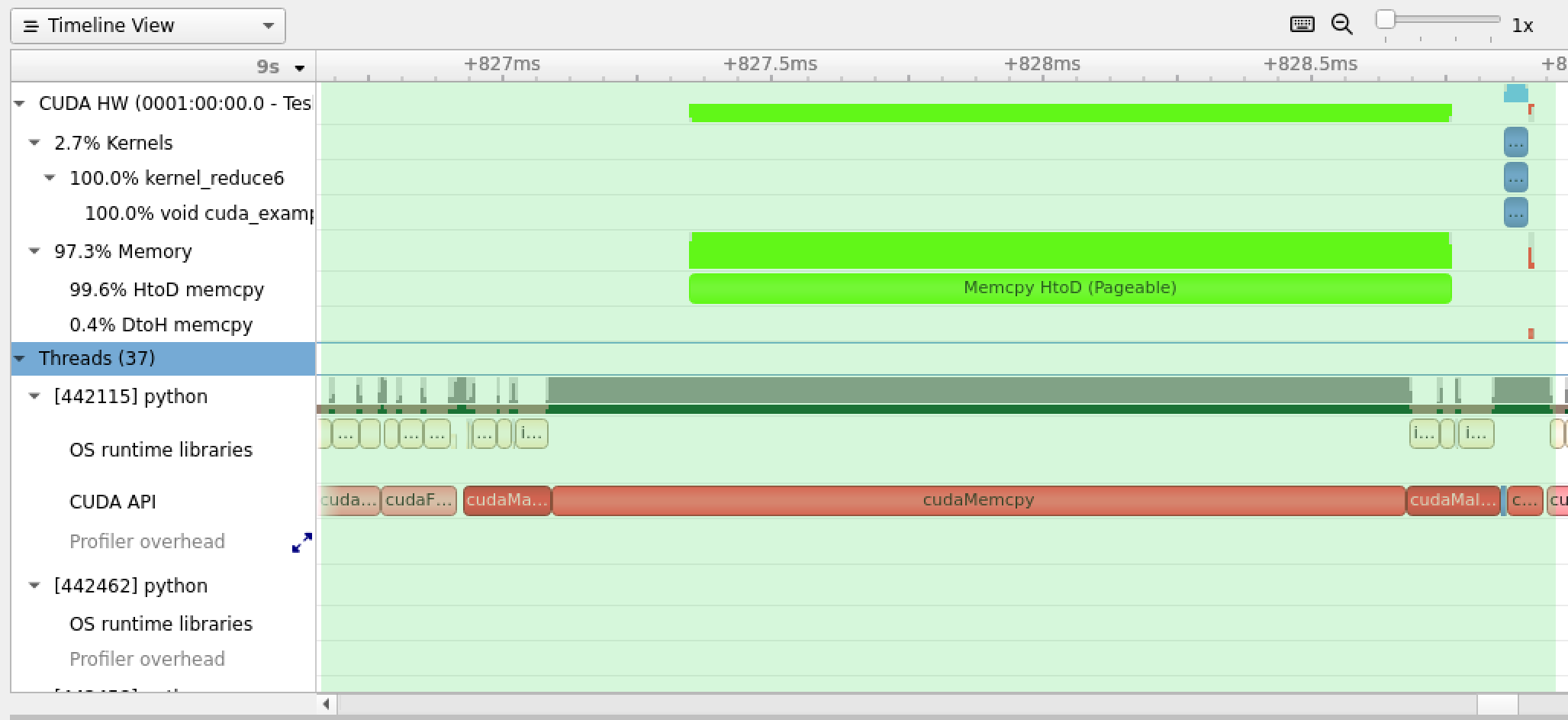

The profiling indicates where the program is most of the time. It shows when the GPU is waiting and when the memory is copied from from host (CPU) to device (GPU) and the other way around. There are the two steps we need to reduce or avoid to make use of the GPU.

Profiling with nsight-compute:

nsys profile --trace=cuda,cudnn,cublas,osrt,nvtx,openmp python <file>

If nsys fails to find python, the command which python should locate it. <file> can be `plot_bench_gpu_vector_sum_gpu.py for example.

Then command nsys-ui starts the Visual Interface interface of the profiling. A screen shot shows the following after loading the profiling.

Most of time is spent in copy the data from CPU memory to GPU memory. In our case, GPU is not really useful because just copying the data from CPU to GPU takes more time than processing it with CPU and AVX instructions.

GPU is useful for deep learning because many operations can be chained and the data stays on GPU memory until the very end. When multiple tools are involved, torch, numpy, onnxruntime, the DLPack avoids copying the data when switching.

The copy of a big tensor can happens by block. The computation may start before the data is fully copied.

Total running time of the script: ( 1 minutes 13.750 seconds)