Note

Go to the end to download the full example code

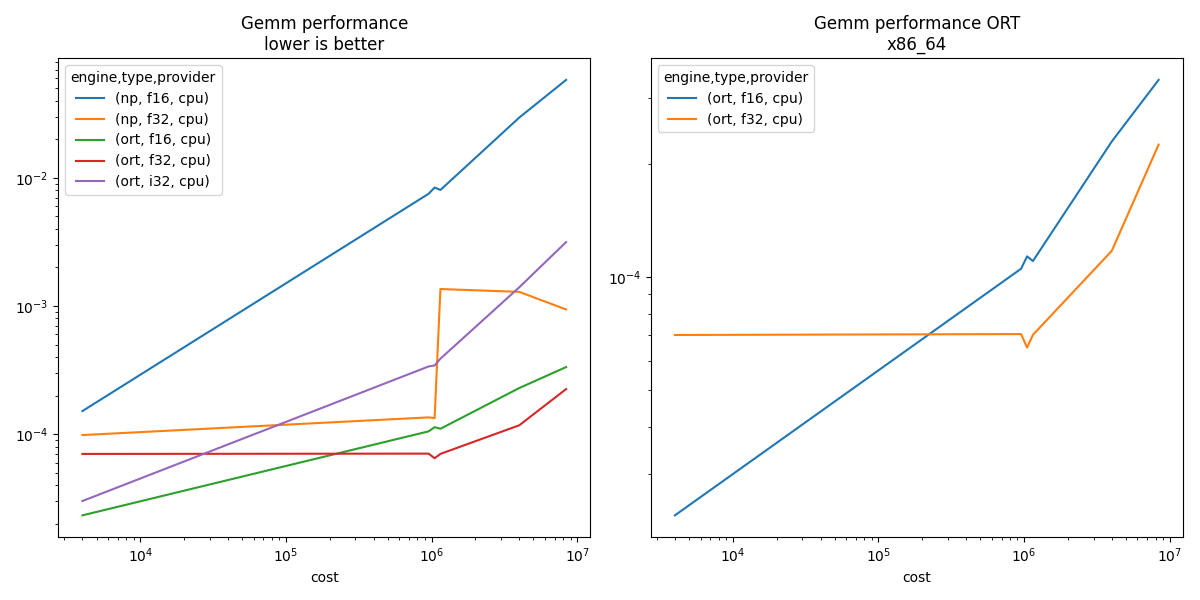

Measuring performance about Gemm#

Differents types, differents backend, differents

Onnx Model#

import platform

from itertools import product

import numpy

from tqdm import tqdm

import matplotlib.pyplot as plt

from pandas import DataFrame, pivot_table

from onnx import TensorProto

from onnx.helper import (

make_model,

make_node,

make_graph,

make_tensor_value_info,

make_opsetid,

)

from onnx.checker import check_model

from onnx.numpy_helper import from_array

from onnxruntime import InferenceSession, get_available_providers

from onnxruntime.capi._pybind_state import (

OrtValue as C_OrtValue,

OrtDevice as C_OrtDevice,

)

from onnxruntime.capi.onnxruntime_pybind11_state import (

NotImplemented,

InvalidGraph,

InvalidArgument,

)

from onnx_extended.reference import CReferenceEvaluator

from onnx_extended.ext_test_case import unit_test_going, measure_time

def create_model(mat_type=TensorProto.FLOAT):

I1 = from_array(numpy.array([1], dtype=numpy.float32), name="I")

A = make_tensor_value_info("A", mat_type, [None, None])

B = make_tensor_value_info("B", mat_type, [None, None])

C = make_tensor_value_info("C", mat_type, [None, None])

nodes = [

make_node("CastLike", ["I", "A"], ["Ic"]),

make_node("Add", ["A", "Ic"], ["A1"]),

make_node("Add", ["A1", "Ic"], ["A2"]),

make_node("Add", ["A2", "Ic"], ["A3"]),

make_node("MatMul", ["A", "B"], ["M0"]),

make_node("MatMul", ["A1", "B"], ["M1"]),

make_node("MatMul", ["A2", "B"], ["M2"]),

make_node("MatMul", ["A3", "B"], ["M3"]),

make_node("Add", ["M0", "M1"], ["M12"]),

make_node("Add", ["M2", "M3"], ["M23"]),

make_node("Add", ["M12", "M23"], ["C"]),

]

graph = make_graph(nodes, "a", [A, B], [C], [I1])

if mat_type < 16:

# regular type

opset, ir = 18, 8

else:

opset, ir = 19, 9

onnx_model = make_model(

graph, opset_imports=[make_opsetid("", opset)], ir_version=ir

)

check_model(onnx_model)

return onnx_model

create_model()

ir_version: 8

graph {

node {

input: "I"

input: "A"

output: "Ic"

op_type: "CastLike"

}

node {

input: "A"

input: "Ic"

output: "A1"

op_type: "Add"

}

node {

input: "A1"

input: "Ic"

output: "A2"

op_type: "Add"

}

node {

input: "A2"

input: "Ic"

output: "A3"

op_type: "Add"

}

node {

input: "A"

input: "B"

output: "M0"

op_type: "MatMul"

}

node {

input: "A1"

input: "B"

output: "M1"

op_type: "MatMul"

}

node {

input: "A2"

input: "B"

output: "M2"

op_type: "MatMul"

}

node {

input: "A3"

input: "B"

output: "M3"

op_type: "MatMul"

}

node {

input: "M0"

input: "M1"

output: "M12"

op_type: "Add"

}

node {

input: "M2"

input: "M3"

output: "M23"

op_type: "Add"

}

node {

input: "M12"

input: "M23"

output: "C"

op_type: "Add"

}

name: "a"

initializer {

dims: 1

data_type: 1

name: "I"

raw_data: "\000\000\200?"

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "C"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

}

opset_import {

domain: ""

version: 18

}

A model to cast

def create_cast(to):

A = make_tensor_value_info("A", TensorProto.FLOAT, [None, None])

C = make_tensor_value_info("C", to, [None, None])

node1 = make_node("Cast", ["A"], ["C"], to=to)

graph = make_graph([node1], "a", [A], [C])

if to < 16:

# regular type

opset, ir = 18, 8

else:

opset, ir = 19, 9

onnx_model = make_model(

graph, opset_imports=[make_opsetid("", opset)], ir_version=ir

)

check_model(onnx_model)

return onnx_model

create_cast(TensorProto.FLOAT16)

ir_version: 8

graph {

node {

input: "A"

output: "C"

op_type: "Cast"

attribute {

name: "to"

i: 10

type: INT

}

}

name: "a"

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "C"

type {

tensor_type {

elem_type: 10

shape {

dim {

}

dim {

}

}

}

}

}

}

opset_import {

domain: ""

version: 18

}

Performance#

The benchmark will run the following configurations.

types = [

TensorProto.FLOAT,

TensorProto.UINT32,

TensorProto.INT32,

TensorProto.INT16,

TensorProto.INT8,

TensorProto.FLOAT16,

TensorProto.BFLOAT16,

TensorProto.FLOAT8E4M3FN,

TensorProto.FLOAT8E5M2,

]

engine = [CReferenceEvaluator, InferenceSession]

providers = [

["CPUExecutionProvider"],

["CUDAExecutionProvider", "CPUExecutionProvider"],

]

# M, N, K

dims = [

(10, 10, 10),

(61, 62, 63),

(64, 64, 64),

(65, 66, 67),

(100, 100, 100),

(128, 128, 128),

# (256, 256, 256),

# (400, 400, 400),

# (512, 512, 512),

]

map_type = {TensorProto.FLOAT: numpy.float32, TensorProto.FLOAT16: numpy.float16}

Let’s cache the matrices involved.

def to_ort_value(m):

device = C_OrtDevice(C_OrtDevice.cpu(), C_OrtDevice.default_memory(), 0)

ort_value = C_OrtValue.ortvalue_from_numpy(m, device)

return ort_value

matrices = {}

for m, n, k in dims:

for tt in types:

for i, j in [(m, k), (k, n)]:

try:

sess = InferenceSession(

create_cast(tt).SerializeToString(),

providers=["CPUExecutionProvider"],

)

except (InvalidGraph, InvalidArgument):

# not support by this version of onnxruntime

continue

vect = (numpy.random.randn(i, j) * 10).astype(numpy.float32)

ov = to_ort_value(vect)

ovtt = sess._sess.run_with_ort_values({"A": ov}, ["C"], None)[0]

matrices[tt, i, j] = ovtt

print(f"{len(matrices)} matrices were created.")

72 matrices were created.

Let’s run the benchmark

data = []

errors = []

pbar = tqdm(list(product(types, engine, providers, dims)))

for tt, engine, provider, dim in pbar:

if max(dim) <= 200:

repeat, number = 50, 25

elif max(dim) <= 256:

repeat, number = 25, 10

else:

repeat, number = 10, 4

onx = create_model(tt)

with open(f"plot_bench_gemm_{tt}.onnx", "wb") as f:

f.write(onx.SerializeToString())

k1 = (tt, dim[0], dim[2])

k2 = (tt, dim[2], dim[1])

if k1 not in matrices:

errors.append(f"Key k1={k1!r} not in matrices.")

continue

if k2 not in matrices:

errors.append(f"Key k2={k2!r} not in matrices.")

continue

if engine == CReferenceEvaluator:

if tt == TensorProto.FLOAT16 and max(dim) > 50:

repeat, number = 2, 2

if provider != ["CPUExecutionProvider"]:

continue

if tt not in [TensorProto.FLOAT, TensorProto.FLOAT16]:

continue

pbar.set_description(

f"t={tt} e={engine.__name__} p={provider[0][:4]} dim={dim}"

)

feeds = {"A": matrices[k1].numpy(), "B": matrices[k2].numpy()}

sess = engine(onx)

sess.run(None, feeds)

obs = measure_time(lambda: sess.run(None, feeds), repeat=repeat, number=number)

elif engine == InferenceSession:

if provider[0] not in get_available_providers():

continue

pbar.set_description(

f"t={tt} e={engine.__name__} p={provider[0][:4]} dim={dim}"

)

feeds = {"A": matrices[k1], "B": matrices[k2]}

try:

sess = engine(onx.SerializeToString(), providers=provider)

except (NotImplemented, InvalidGraph) as e:

# not implemented

errors.append(e)

continue

if provider == ["CPUExecutionProvider"]:

the_feeds = feeds

else:

# moving values to CUDA

device = C_OrtDevice(C_OrtDevice.cuda(), C_OrtDevice.default_memory(), 0)

try:

the_feeds = {

k: C_OrtValue.ortvalue_from_numpy(v.numpy(), device)

for k, v in feeds.items()

}

except RuntimeError as e:

errors.append(f"issue with cuda and type {tt} - {e}")

continue

sess._sess.run_with_ort_values(the_feeds, ["C"], None)[0]

obs = measure_time(

lambda: sess._sess.run_with_ort_values(the_feeds, ["C"], None)[0],

repeat=repeat,

number=number,

)

else:

continue

obs.update(

dict(

engine={"InferenceSession": "ort", "CReferenceEvaluator": "np"}[

engine.__name__

],

type={

TensorProto.FLOAT: "f32",

TensorProto.FLOAT16: "f16",

TensorProto.INT8: "i8",

TensorProto.INT16: "i16",

TensorProto.INT32: "i32",

TensorProto.UINT32: "u32",

}[tt],

M=dim[0],

N=dim[1],

K=dim[2],

cost=numpy.prod(dim) * 4,

cost_s=f"{numpy.prod(dim) * 4}-{dim[0]}x{dim[1]}x{dim[2]}",

repeat=repeat,

number=number,

provider={"CPUExecutionProvider": "cpu", "CUDAExecutionProvider": "cuda"}[

provider[0]

],

platform=platform.processor(),

)

)

data.append(obs)

if unit_test_going() and len(data) >= 2:

break

df = DataFrame(data)

df.to_excel("plot_bench_gemm.xlsx")

df.to_csv("plot_bench_gemm.csv")

df.drop(["min_exec", "max_exec"], axis=1).to_csv("plot_bench_gemm_.csv")

df

0%| | 0/216 [00:00<?, ?it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(10, 10, 10): 0%| | 0/216 [00:00<?, ?it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(10, 10, 10): 0%| | 1/216 [00:00<00:44, 4.82it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(61, 62, 63): 0%| | 1/216 [00:00<00:44, 4.82it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(61, 62, 63): 1%| | 2/216 [00:00<00:39, 5.37it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(64, 64, 64): 1%| | 2/216 [00:00<00:39, 5.37it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(64, 64, 64): 1%|1 | 3/216 [00:00<00:37, 5.61it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(65, 66, 67): 1%|1 | 3/216 [00:00<00:37, 5.61it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(65, 66, 67): 2%|1 | 4/216 [00:02<02:45, 1.28it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(100, 100, 100): 2%|1 | 4/216 [00:02<02:45, 1.28it/s]

t=1 e=CReferenceEvaluator p=CPUE dim=(100, 100, 100): 2%|2 | 5/216 [00:03<03:48, 1.09s/it]

t=1 e=CReferenceEvaluator p=CPUE dim=(128, 128, 128): 2%|2 | 5/216 [00:03<03:48, 1.09s/it]

t=1 e=CReferenceEvaluator p=CPUE dim=(128, 128, 128): 3%|2 | 6/216 [00:05<03:54, 1.12s/it]

t=1 e=InferenceSession p=CPUE dim=(10, 10, 10): 3%|2 | 6/216 [00:05<03:54, 1.12s/it]

t=1 e=InferenceSession p=CPUE dim=(10, 10, 10): 6%|6 | 13/216 [00:05<00:54, 3.74it/s]

t=1 e=InferenceSession p=CPUE dim=(61, 62, 63): 6%|6 | 13/216 [00:05<00:54, 3.74it/s]

t=1 e=InferenceSession p=CPUE dim=(64, 64, 64): 6%|6 | 13/216 [00:05<00:54, 3.74it/s]

t=1 e=InferenceSession p=CPUE dim=(65, 66, 67): 6%|6 | 13/216 [00:05<00:54, 3.74it/s]

t=1 e=InferenceSession p=CPUE dim=(65, 66, 67): 7%|7 | 16/216 [00:05<00:42, 4.73it/s]

t=1 e=InferenceSession p=CPUE dim=(100, 100, 100): 7%|7 | 16/216 [00:05<00:42, 4.73it/s]

t=1 e=InferenceSession p=CPUE dim=(128, 128, 128): 7%|7 | 16/216 [00:05<00:42, 4.73it/s]

t=1 e=InferenceSession p=CPUE dim=(128, 128, 128): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=12 e=InferenceSession p=CPUE dim=(10, 10, 10): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=12 e=InferenceSession p=CPUE dim=(61, 62, 63): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=12 e=InferenceSession p=CPUE dim=(64, 64, 64): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=12 e=InferenceSession p=CPUE dim=(65, 66, 67): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=12 e=InferenceSession p=CPUE dim=(100, 100, 100): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=12 e=InferenceSession p=CPUE dim=(128, 128, 128): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=6 e=InferenceSession p=CPUE dim=(10, 10, 10): 8%|8 | 18/216 [00:05<00:42, 4.67it/s]

t=6 e=InferenceSession p=CPUE dim=(10, 10, 10): 28%|##8 | 61/216 [00:06<00:04, 35.02it/s]

t=6 e=InferenceSession p=CPUE dim=(61, 62, 63): 28%|##8 | 61/216 [00:06<00:04, 35.02it/s]

t=6 e=InferenceSession p=CPUE dim=(64, 64, 64): 28%|##8 | 61/216 [00:06<00:04, 35.02it/s]

t=6 e=InferenceSession p=CPUE dim=(65, 66, 67): 28%|##8 | 61/216 [00:06<00:04, 35.02it/s]

t=6 e=InferenceSession p=CPUE dim=(100, 100, 100): 28%|##8 | 61/216 [00:07<00:04, 35.02it/s]

t=6 e=InferenceSession p=CPUE dim=(128, 128, 128): 28%|##8 | 61/216 [00:09<00:04, 35.02it/s]

t=6 e=InferenceSession p=CPUE dim=(128, 128, 128): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=5 e=InferenceSession p=CPUE dim=(10, 10, 10): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=5 e=InferenceSession p=CPUE dim=(61, 62, 63): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=5 e=InferenceSession p=CPUE dim=(64, 64, 64): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=5 e=InferenceSession p=CPUE dim=(65, 66, 67): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=5 e=InferenceSession p=CPUE dim=(100, 100, 100): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=5 e=InferenceSession p=CPUE dim=(128, 128, 128): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=3 e=InferenceSession p=CPUE dim=(10, 10, 10): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=3 e=InferenceSession p=CPUE dim=(61, 62, 63): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=3 e=InferenceSession p=CPUE dim=(64, 64, 64): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=3 e=InferenceSession p=CPUE dim=(65, 66, 67): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=3 e=InferenceSession p=CPUE dim=(100, 100, 100): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=3 e=InferenceSession p=CPUE dim=(128, 128, 128): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=(10, 10, 10): 34%|###4 | 74/216 [00:13<00:24, 5.87it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=(10, 10, 10): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=(61, 62, 63): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=(64, 64, 64): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=(65, 66, 67): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=(100, 100, 100): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=CReferenceEvaluator p=CPUE dim=(128, 128, 128): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=InferenceSession p=CPUE dim=(10, 10, 10): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=InferenceSession p=CPUE dim=(61, 62, 63): 56%|#####6 | 121/216 [00:13<00:06, 13.65it/s]

t=10 e=InferenceSession p=CPUE dim=(61, 62, 63): 62%|######2 | 134/216 [00:14<00:05, 14.24it/s]

t=10 e=InferenceSession p=CPUE dim=(64, 64, 64): 62%|######2 | 134/216 [00:14<00:05, 14.24it/s]

t=10 e=InferenceSession p=CPUE dim=(65, 66, 67): 62%|######2 | 134/216 [00:14<00:05, 14.24it/s]

t=10 e=InferenceSession p=CPUE dim=(100, 100, 100): 62%|######2 | 134/216 [00:14<00:05, 14.24it/s]

t=10 e=InferenceSession p=CPUE dim=(128, 128, 128): 62%|######2 | 134/216 [00:14<00:05, 14.24it/s]

t=10 e=InferenceSession p=CPUE dim=(128, 128, 128): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=16 e=InferenceSession p=CPUE dim=(10, 10, 10): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=16 e=InferenceSession p=CPUE dim=(61, 62, 63): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=16 e=InferenceSession p=CPUE dim=(64, 64, 64): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=16 e=InferenceSession p=CPUE dim=(65, 66, 67): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=16 e=InferenceSession p=CPUE dim=(100, 100, 100): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=16 e=InferenceSession p=CPUE dim=(128, 128, 128): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=17 e=InferenceSession p=CPUE dim=(10, 10, 10): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=17 e=InferenceSession p=CPUE dim=(61, 62, 63): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=17 e=InferenceSession p=CPUE dim=(64, 64, 64): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=17 e=InferenceSession p=CPUE dim=(65, 66, 67): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=17 e=InferenceSession p=CPUE dim=(100, 100, 100): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=17 e=InferenceSession p=CPUE dim=(128, 128, 128): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=19 e=InferenceSession p=CPUE dim=(10, 10, 10): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=19 e=InferenceSession p=CPUE dim=(61, 62, 63): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=19 e=InferenceSession p=CPUE dim=(64, 64, 64): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=19 e=InferenceSession p=CPUE dim=(65, 66, 67): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=19 e=InferenceSession p=CPUE dim=(100, 100, 100): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=19 e=InferenceSession p=CPUE dim=(128, 128, 128): 67%|######6 | 144/216 [00:15<00:05, 13.07it/s]

t=19 e=InferenceSession p=CPUE dim=(128, 128, 128): 100%|##########| 216/216 [00:15<00:00, 14.17it/s]

The errors.

[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. Type Error: Type 'tensor(float8e4m3fn)' of input parameter (A) of operator (MatMul) in node () is invalid.

[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. Type Error: Type 'tensor(float8e5m2)' of input parameter (A) of operator (MatMul) in node () is invalid.

[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. Type Error: Type 'tensor(int16)' of input parameter (A) of operator (MatMul) in node () is invalid.

[ONNXRuntimeError] : 10 : INVALID_GRAPH : This is an invalid model. Type Error: Type 'tensor(int8)' of input parameter (A) of operator (MatMul) in node () is invalid.

[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for Add(14) node with name ''

Plots#

piv = pivot_table(

df, index=["cost"], columns=["engine", "type", "provider"], values="average"

)

piv.reset_index(drop=False).to_excel("plot_bench_gemm_summary.xlsx")

piv.reset_index(drop=False).to_csv("plot_bench_gemm_summary.csv")

print(piv)

piv

engine np ort

type f16 f32 f16 f32 i32

provider cpu cpu cpu cpu cpu

cost

4000 0.000152 0.000098 0.000023 0.000070 0.000030

953064 0.007516 0.000135 0.000105 0.000071 0.000338

1048576 0.008402 0.000133 0.000113 0.000065 0.000344

1149720 0.008053 0.001358 0.000110 0.000070 0.000388

4000000 0.029565 0.001290 0.000229 0.000117 0.001401

8388608 0.058163 0.000941 0.000334 0.000225 0.003157

With the dimensions.

pivs = pivot_table(

df, index=["cost_s"], columns=["engine", "type", "provider"], values="average"

)

print(pivs)

engine np ort

type f16 f32 f16 f32 i32

provider cpu cpu cpu cpu cpu

cost_s

1048576-64x64x64 0.008402 0.000133 0.000113 0.000065 0.000344

1149720-65x66x67 0.008053 0.001358 0.000110 0.000070 0.000388

4000-10x10x10 0.000152 0.000098 0.000023 0.000070 0.000030

4000000-100x100x100 0.029565 0.001290 0.000229 0.000117 0.001401

8388608-128x128x128 0.058163 0.000941 0.000334 0.000225 0.003157

953064-61x62x63 0.007516 0.000135 0.000105 0.000071 0.000338

plot

dfi = df[

df.type.isin({"f32", "f16", "bf16", "f8e4m3", "f8e5m2"}) & df.engine.isin({"ort"})

]

pivi = pivot_table(

dfi, index=["cost"], columns=["engine", "type", "provider"], values="average"

)

fig, ax = plt.subplots(1, 2, figsize=(12, 6))

piv.plot(ax=ax[0], title="Gemm performance\nlower is better", logx=True, logy=True)

if pivi.shape[0] > 0:

pivi.plot(

ax=ax[1],

title=f"Gemm performance ORT\n{platform.processor()}",

logx=True,

logy=True,

)

fig.tight_layout()

fig.savefig("plot_bench_gemm.png")

Total running time of the script: ( 0 minutes 16.194 seconds)