Note

Go to the end to download the full example code.

Measuring CPU performance¶

Processor caches must be taken into account when writing an algorithm, see Memory part 2: CPU caches from Ulrich Drepper.

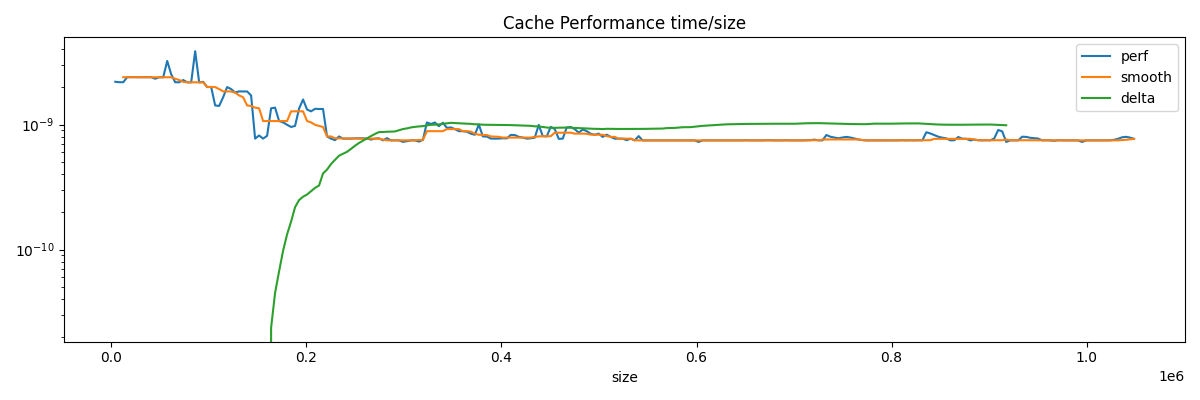

Cache Performance¶

Code of benchmark_cache.

obs = []

step = 2**12

for i in tqdm(range(step, 2**20 + step, step)):

res = min(

[

benchmark_cache(i, False),

benchmark_cache(i, False),

benchmark_cache(i, False),

]

)

if res < 0:

# overflow

continue

obs.append(dict(size=i, perf=res))

df = DataFrame(obs)

mean = df.perf.mean()

lag = 32

for i in range(2, df.shape[0]):

df.loc[i, "smooth"] = df.loc[i - 8 : i + 8, "perf"].median()

if i > lag and i < df.shape[0] - lag:

df.loc[i, "delta"] = (

mean

+ df.loc[i : i + lag, "perf"].mean()

- df.loc[i - lag + 1 : i + 1, "perf"]

).mean()

0%| | 0/256 [00:00<?, ?it/s]

48%|████▊ | 122/256 [00:00<00:00, 1218.76it/s]

95%|█████████▌| 244/256 [00:00<00:00, 709.15it/s]

100%|██████████| 256/256 [00:00<00:00, 723.88it/s]

Cache size estimator¶

cache_size_index = int(df.delta.argmax())

cache_size = df.loc[cache_size_index, "size"] * 2

print(f"L2 cache size estimation is {cache_size / 2 ** 20:1.3f} Mb.")

L2 cache size estimation is 0.664 Mb.

Verification¶

try:

out, err = run_cmd("lscpu", wait=True)

print("\n".join(_ for _ in out.split("\n") if "cache:" in _))

except Exception as e:

print(f"failed due to {e}")

df = df.set_index("size")

fig, ax = plt.subplots(1, 1, figsize=(12, 4))

df.plot(ax=ax, title="Cache Performance time/size", logy=True)

fig.tight_layout()

fig.savefig("plot_benchmark_cpu_array.png")

L1d cache: 480 KiB (10 instances)

L1i cache: 320 KiB (10 instances)

L2 cache: 12.5 MiB (10 instances)

L3 cache: 24 MiB (1 instance)

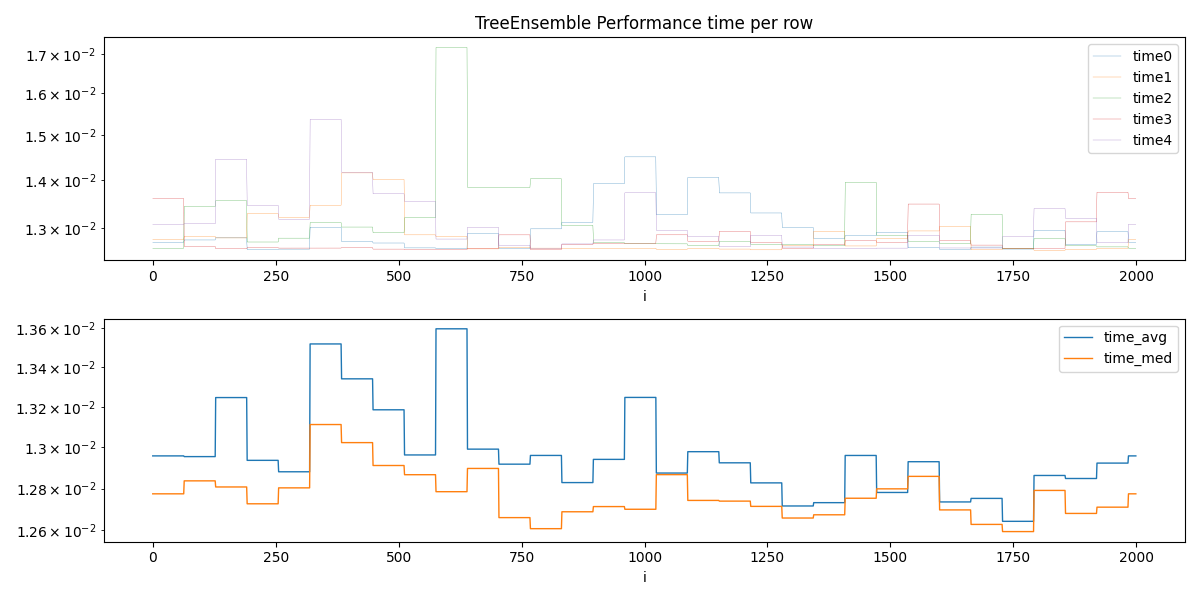

TreeEnsemble Performance¶

We simulate the computation of a TreeEnsemble

of 50 features, 100 trees and depth of 10

(so nodes.)

The code of benchmark_cache_tree

dfs = []

cols = []

drop = []

for n in tqdm(range(2 if unit_test_going() else 5)):

res = benchmark_cache_tree(

n_rows=2000,

n_features=50,

n_trees=100,

tree_size=1024,

max_depth=10,

search_step=64,

)

res = [[max(r.row, i), r.time] for i, r in enumerate(res)]

df = DataFrame(res)

df.columns = [f"i{n}", f"time{n}"]

dfs.append(df)

cols.append(df.columns[-1])

drop.append(df.columns[0])

df = concat(dfs, axis=1).reset_index(drop=True)

df["i"] = df["i0"]

df = df.drop(drop, axis=1)

df["time_avg"] = df[cols].mean(axis=1)

df["time_med"] = df[cols].median(axis=1)

df.head()

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:01, 2.46it/s]

40%|████ | 2/5 [00:00<00:01, 2.48it/s]

60%|██████ | 3/5 [00:01<00:00, 2.46it/s]

80%|████████ | 4/5 [00:01<00:00, 2.48it/s]

100%|██████████| 5/5 [00:02<00:00, 2.46it/s]

100%|██████████| 5/5 [00:02<00:00, 2.47it/s]

Estimation¶

Optimal batch size is among:

i time_med time_avg

0 1728 0.012594 0.012643

1 768 0.012608 0.012961

2 1664 0.012628 0.012753

3 1280 0.012659 0.012716

4 704 0.012661 0.012918

5 1344 0.012674 0.012732

6 1856 0.012681 0.012849

7 832 0.012688 0.012829

8 1600 0.012697 0.012736

9 960 0.012700 0.013247

One possible estimation

Estimation: 1152.523905725562

Plots.

cols_time = ["time_avg", "time_med"]

fig, ax = plt.subplots(2, 1, figsize=(12, 6))

df.set_index("i").drop(cols_time, axis=1).plot(

ax=ax[0], title="TreeEnsemble Performance time per row", logy=True, linewidth=0.2

)

df.set_index("i")[cols_time].plot(ax=ax[1], linewidth=1.0, logy=True)

fig.tight_layout()

fig.savefig("plot_bench_cpu.png")

Total running time of the script: (0 minutes 4.262 seconds)