Note

Go to the end to download the full example code.

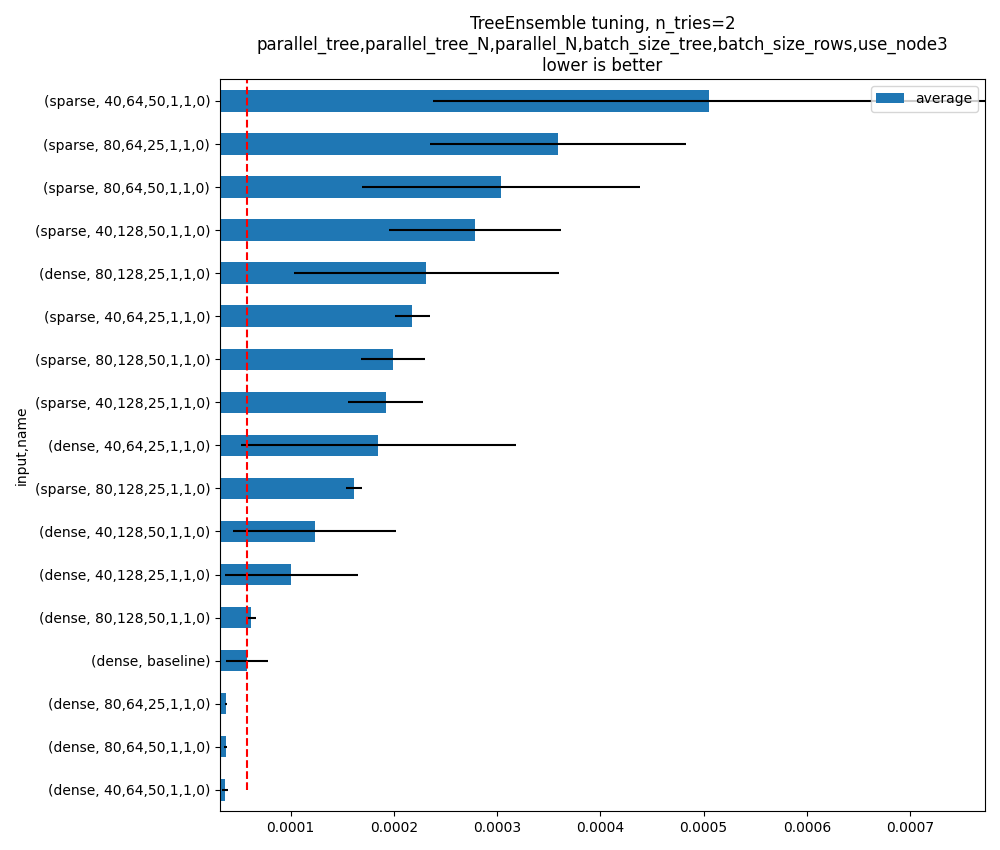

TreeEnsemble, dense, and sparse¶

The example benchmarks the sparse implementation for TreeEnsemble. The default set of optimized parameters is very short and is meant to be executed fast. Many more parameters can be tried.

python plot_op_tree_ensemble_sparse --scenario=LONG

To change the training parameters:

python plot_op_tree_ensemble_sparse.py

--n_trees=100

--max_depth=10

--n_features=50

--sparsity=0.9

--batch_size=100000

Another example with a full list of parameters:

- python plot_op_tree_ensemble_sparse.py

–n_trees=100 –max_depth=10 –n_features=50 –batch_size=100000 –sparsity=0.9 –tries=3 –scenario=CUSTOM –parallel_tree=80,40 –parallel_tree_N=128,64 –parallel_N=50,25 –batch_size_tree=1,2 –batch_size_rows=1,2 –use_node3=0

Another example:

python plot_op_tree_ensemble_sparse.py

--n_trees=100 --n_features=10 --batch_size=10000 --max_depth=8 -s SHORT

import logging

import os

import timeit

from typing import Tuple

import numpy

import onnx

from onnx import ModelProto, TensorProto

from onnx.helper import make_graph, make_model, make_tensor_value_info

from pandas import DataFrame, concat

from sklearn.datasets import make_regression

from sklearn.ensemble import RandomForestRegressor

from skl2onnx import to_onnx

from onnxruntime import InferenceSession, SessionOptions

from onnx_array_api.plotting.text_plot import onnx_simple_text_plot

from onnx_extended.ortops.optim.cpu import get_ort_ext_libs

from onnx_extended.ortops.optim.optimize import (

change_onnx_operator_domain,

get_node_attribute,

optimize_model,

)

from onnx_extended.tools.onnx_nodes import multiply_tree

from onnx_extended.validation.cpu._validation import dense_to_sparse_struct

from onnx_extended.plotting.benchmark import hhistograms

from onnx_extended.args import get_parsed_args

from onnx_extended.ext_test_case import unit_test_going

logging.getLogger("matplotlib.font_manager").setLevel(logging.ERROR)

script_args = get_parsed_args(

"plot_op_tree_ensemble_sparse",

description=__doc__,

scenarios={

"SHORT": "short optimization (default)",

"LONG": "test more options",

"CUSTOM": "use values specified by the command line",

},

sparsity=(0.99, "input sparsity"),

n_features=(2 if unit_test_going() else 500, "number of features to generate"),

n_trees=(3 if unit_test_going() else 10, "number of trees to train"),

max_depth=(2 if unit_test_going() else 10, "max_depth"),

batch_size=(100 if unit_test_going() else 1000, "batch size"),

parallel_tree=("80,160,40", "values to try for parallel_tree"),

parallel_tree_N=("256,128,64", "values to try for parallel_tree_N"),

parallel_N=("100,50,25", "values to try for parallel_N"),

batch_size_tree=("2,4,8", "values to try for batch_size_tree"),

batch_size_rows=("2,4,8", "values to try for batch_size_rows"),

use_node3=("0,1", "values to try for use_node3"),

expose="",

n_jobs=("-1", "number of jobs to train the RandomForestRegressor"),

)

Training a model¶

def train_model(

batch_size: int, n_features: int, n_trees: int, max_depth: int, sparsity: float

) -> Tuple[str, numpy.ndarray, numpy.ndarray]:

filename = (

f"plot_op_tree_ensemble_sparse-f{n_features}-{n_trees}-"

f"d{max_depth}-s{sparsity}.onnx"

)

if not os.path.exists(filename):

X, y = make_regression(

batch_size + max(batch_size, 2 ** (max_depth + 1)),

n_features=n_features,

n_targets=1,

)

mask = numpy.random.rand(*X.shape) <= sparsity

X[mask] = 0

X, y = X.astype(numpy.float32), y.astype(numpy.float32)

print(f"Training to get {filename!r} with X.shape={X.shape}")

# To be faster, we train only 1 tree.

model = RandomForestRegressor(

1, max_depth=max_depth, verbose=2, n_jobs=int(script_args.n_jobs)

)

model.fit(X[:-batch_size], y[:-batch_size])

onx = to_onnx(model, X[:1], target_opset={"": 18, "ai.onnx.ml": 3})

# And wd multiply the trees.

node = multiply_tree(onx.graph.node[0], n_trees)

onx = make_model(

make_graph([node], onx.graph.name, onx.graph.input, onx.graph.output),

domain=onx.domain,

opset_imports=onx.opset_import,

ir_version=onx.ir_version,

)

with open(filename, "wb") as f:

f.write(onx.SerializeToString())

else:

X, y = make_regression(batch_size, n_features=n_features, n_targets=1)

mask = numpy.random.rand(*X.shape) <= sparsity

X[mask] = 0

X, y = X.astype(numpy.float32), y.astype(numpy.float32)

Xb, yb = X[-batch_size:].copy(), y[-batch_size:].copy()

return filename, Xb, yb

def measure_sparsity(x):

f = x.flatten()

return float((f == 0).astype(numpy.int64).sum()) / float(x.size)

batch_size = script_args.batch_size

n_features = script_args.n_features

n_trees = script_args.n_trees

max_depth = script_args.max_depth

sparsity = script_args.sparsity

print(f"batch_size={batch_size}")

print(f"n_features={n_features}")

print(f"n_trees={n_trees}")

print(f"max_depth={max_depth}")

print(f"sparsity={sparsity}")

batch_size=1000

n_features=500

n_trees=10

max_depth=10

sparsity=0.99

training

Training to get 'plot_op_tree_ensemble_sparse-f500-10-d10-s0.99.onnx' with X.shape=(3048, 500)

[Parallel(n_jobs=-1)]: Using backend ThreadingBackend with 20 concurrent workers.

building tree 1 of 1

Xb.shape=(1000, 500)

yb.shape=(1000,)

measured sparsity=0.990094

Rewrite the onnx file to use a different kernel¶

The custom kernel is mapped to a custom operator with the same name the attributes and domain = “onnx_extended.ortops.optim.cpu”. We call a function to do that replacement. First the current model.

opset: domain='ai.onnx.ml' version=1

opset: domain='' version=18

opset: domain='' version=18

input: name='X' type=dtype('float32') shape=['', 500]

TreeEnsembleRegressor(X, n_targets=1, nodes_falsenodeids=790:[78,77,60...0,0,0], nodes_featureids=790:[42,285,196...0,101,0], nodes_hitrates=790:[1.0,1.0...1.0,1.0], nodes_missing_value_tracks_true=790:[0,0,0...0,0,0], nodes_modes=790:[b'BRANCH_LEQ',b'BRANCH_LEQ'...b'LEAF',b'LEAF'], nodes_nodeids=790:[0,1,2...76,77,78], nodes_treeids=790:[0,0,0...9,9,9], nodes_truenodeids=790:[1,2,3...0,0,0], nodes_values=790:[1.1106555461883545,1.1359018087387085...-0.4278838038444519,0.0], post_transform=b'NONE', target_ids=400:[0,0,0...0,0,0], target_nodeids=400:[5,8,10...76,77,78], target_treeids=400:[0,0,0...9,9,9], target_weights=400:[83.8045883178711,-158.5025634765625...461.4992980957031,399.7672119140625]) -> variable

output: name='variable' type=dtype('float32') shape=['', 1]

And then the modified model.

def transform_model(model, use_sparse=False, **kwargs):

onx = ModelProto()

onx.ParseFromString(model.SerializeToString())

att = get_node_attribute(onx.graph.node[0], "nodes_modes")

modes = ",".join([s.decode("ascii") for s in att.strings]).replace("BRANCH_", "")

if use_sparse and "new_op_type" not in kwargs:

kwargs["new_op_type"] = "TreeEnsembleRegressorSparse"

if use_sparse:

# with sparse tensor, missing value means 0

att = get_node_attribute(onx.graph.node[0], "nodes_values")

thresholds = numpy.array(att.floats, dtype=numpy.float32)

missing_true = (thresholds >= 0).astype(numpy.int64)

kwargs["nodes_missing_value_tracks_true"] = missing_true

new_onx = change_onnx_operator_domain(

onx,

op_type="TreeEnsembleRegressor",

op_domain="ai.onnx.ml",

new_op_domain="onnx_extended.ortops.optim.cpu",

nodes_modes=modes,

**kwargs,

)

if use_sparse:

del new_onx.graph.input[:]

new_onx.graph.input.append(

make_tensor_value_info("X", TensorProto.FLOAT, (None,))

)

return new_onx

print("Tranform model to add a custom node.")

onx_modified = transform_model(onx)

print(f"Save into {filename + 'modified.onnx'!r}.")

with open(filename + "modified.onnx", "wb") as f:

f.write(onx_modified.SerializeToString())

print("done.")

print(onnx_simple_text_plot(onx_modified))

Tranform model to add a custom node.

Save into 'plot_op_tree_ensemble_sparse-f500-10-d10-s0.99.onnxmodified.onnx'.

done.

opset: domain='ai.onnx.ml' version=1

opset: domain='' version=18

opset: domain='' version=18

opset: domain='onnx_extended.ortops.optim.cpu' version=1

input: name='X' type=dtype('float32') shape=['', 500]

TreeEnsembleRegressor[onnx_extended.ortops.optim.cpu](X, nodes_modes=b'LEQ,LEQ,LEQ,LEQ,LEQ,LEAF,LEQ,LEQ,LEAF,...LEAF,LEAF', n_targets=1, nodes_falsenodeids=790:[78,77,60...0,0,0], nodes_featureids=790:[42,285,196...0,101,0], nodes_hitrates=790:[1.0,1.0...1.0,1.0], nodes_missing_value_tracks_true=790:[0,0,0...0,0,0], nodes_nodeids=790:[0,1,2...76,77,78], nodes_treeids=790:[0,0,0...9,9,9], nodes_truenodeids=790:[1,2,3...0,0,0], nodes_values=790:[1.1106555461883545,1.1359018087387085...-0.4278838038444519,0.0], post_transform=b'NONE', target_ids=400:[0,0,0...0,0,0], target_nodeids=400:[5,8,10...76,77,78], target_treeids=400:[0,0,0...9,9,9], target_weights=400:[83.8045883178711,-158.5025634765625...461.4992980957031,399.7672119140625]) -> variable

output: name='variable' type=dtype('float32') shape=['', 1]

Same with sparse.

print("Same transformation but with sparse.")

onx_modified_sparse = transform_model(onx, use_sparse=True)

print(f"Save into {filename + 'modified.sparse.onnx'!r}.")

with open(filename + "modified.sparse.onnx", "wb") as f:

f.write(onx_modified_sparse.SerializeToString())

print("done.")

print(onnx_simple_text_plot(onx_modified_sparse))

Same transformation but with sparse.

Save into 'plot_op_tree_ensemble_sparse-f500-10-d10-s0.99.onnxmodified.sparse.onnx'.

done.

opset: domain='ai.onnx.ml' version=1

opset: domain='' version=18

opset: domain='' version=18

opset: domain='onnx_extended.ortops.optim.cpu' version=1

input: name='X' type=dtype('float32') shape=['']

TreeEnsembleRegressorSparse[onnx_extended.ortops.optim.cpu](X, nodes_missing_value_tracks_true=790:[1,1,1...1,0,1], nodes_modes=b'LEQ,LEQ,LEQ,LEQ,LEQ,LEAF,LEQ,LEQ,LEAF,...LEAF,LEAF', n_targets=1, nodes_falsenodeids=790:[78,77,60...0,0,0], nodes_featureids=790:[42,285,196...0,101,0], nodes_hitrates=790:[1.0,1.0...1.0,1.0], nodes_nodeids=790:[0,1,2...76,77,78], nodes_treeids=790:[0,0,0...9,9,9], nodes_truenodeids=790:[1,2,3...0,0,0], nodes_values=790:[1.1106555461883545,1.1359018087387085...-0.4278838038444519,0.0], post_transform=b'NONE', target_ids=400:[0,0,0...0,0,0], target_nodeids=400:[5,8,10...76,77,78], target_treeids=400:[0,0,0...9,9,9], target_weights=400:[83.8045883178711,-158.5025634765625...461.4992980957031,399.7672119140625]) -> variable

output: name='variable' type=dtype('float32') shape=['', 1]

Comparing onnxruntime and the custom kernel¶

print(f"Loading {filename!r}")

sess_ort = InferenceSession(filename, providers=["CPUExecutionProvider"])

r = get_ort_ext_libs()

print(f"Creating SessionOptions with {r!r}")

opts = SessionOptions()

if r is not None:

opts.register_custom_ops_library(r[0])

print(f"Loading modified {filename!r}")

sess_cus = InferenceSession(

onx_modified.SerializeToString(), opts, providers=["CPUExecutionProvider"]

)

print(f"Loading modified sparse {filename!r}")

sess_cus_sparse = InferenceSession(

onx_modified_sparse.SerializeToString(), opts, providers=["CPUExecutionProvider"]

)

print(f"Running once with shape {Xb.shape}.")

base = sess_ort.run(None, {"X": Xb})[0]

print(f"Running modified with shape {Xb.shape}.")

got = sess_cus.run(None, {"X": Xb})[0]

print("done.")

Xb_sp = dense_to_sparse_struct(Xb)

print(f"Running modified sparse with shape {Xb_sp.shape}.")

got_sparse = sess_cus_sparse.run(None, {"X": Xb_sp})[0]

print("done.")

Loading 'plot_op_tree_ensemble_sparse-f500-10-d10-s0.99.onnx'

Creating SessionOptions with ['~/github/onnx-extended/onnx_extended/ortops/optim/cpu/libortops_optim_cpu.so']

Loading modified 'plot_op_tree_ensemble_sparse-f500-10-d10-s0.99.onnx'

Loading modified sparse 'plot_op_tree_ensemble_sparse-f500-10-d10-s0.99.onnx'

Running once with shape (1000, 500).

Running modified with shape (1000, 500).

done.

Running modified sparse with shape (9962,).

done.

Discrepancies?

Discrepancies: 0.0001220703125

Discrepancies sparse: 0.0001220703125

Simple verification¶

Baseline with onnxruntime.

t1 = timeit.timeit(lambda: sess_ort.run(None, {"X": Xb}), number=50)

print(f"baseline: {t1}")

baseline: 0.005446884999400936

The custom implementation.

t2 = timeit.timeit(lambda: sess_cus.run(None, {"X": Xb}), number=50)

print(f"new time: {t2}")

new time: 0.2242279670026619

The custom sparse implementation.

t3 = timeit.timeit(lambda: sess_cus_sparse.run(None, {"X": Xb_sp}), number=50)

print(f"new time sparse: {t3}")

new time sparse: 0.02018368199787801

Time for comparison¶

The custom kernel supports the same attributes as TreeEnsembleRegressor plus new ones to tune the parallelization. They can be seen in tree_ensemble.cc. Let’s try out many possibilities. The default values are the first ones.

if unit_test_going():

optim_params = dict(

parallel_tree=[40], # default is 80

parallel_tree_N=[128], # default is 128

parallel_N=[50, 25], # default is 50

batch_size_tree=[1], # default is 1

batch_size_rows=[1], # default is 1

use_node3=[0], # default is 0

)

elif script_args.scenario in (None, "SHORT"):

optim_params = dict(

parallel_tree=[80, 40], # default is 80

parallel_tree_N=[128, 64], # default is 128

parallel_N=[50, 25], # default is 50

batch_size_tree=[1], # default is 1

batch_size_rows=[1], # default is 1

use_node3=[0], # default is 0

)

elif script_args.scenario == "LONG":

optim_params = dict(

parallel_tree=[80, 160, 40],

parallel_tree_N=[256, 128, 64],

parallel_N=[100, 50, 25],

batch_size_tree=[1, 2, 4, 8],

batch_size_rows=[1, 2, 4, 8],

use_node3=[0, 1],

)

elif script_args.scenario == "CUSTOM":

optim_params = dict(

parallel_tree=[int(i) for i in script_args.parallel_tree.split(",")],

parallel_tree_N=[int(i) for i in script_args.parallel_tree_N.split(",")],

parallel_N=[int(i) for i in script_args.parallel_N.split(",")],

batch_size_tree=[int(i) for i in script_args.batch_size_tree.split(",")],

batch_size_rows=[int(i) for i in script_args.batch_size_rows.split(",")],

use_node3=[int(i) for i in script_args.use_node3.split(",")],

)

else:

raise ValueError(

f"Unknown scenario {script_args.scenario!r}, use --help to get them."

)

cmds = []

for att, value in optim_params.items():

cmds.append(f"--{att}={','.join(map(str, value))}")

print("Full list of optimization parameters:")

print(" ".join(cmds))

Full list of optimization parameters:

--parallel_tree=80,40 --parallel_tree_N=128,64 --parallel_N=50,25 --batch_size_tree=1 --batch_size_rows=1 --use_node3=0

Then the optimization for dense

def create_session(onx):

opts = SessionOptions()

r = get_ort_ext_libs()

if r is None:

raise RuntimeError("No custom implementation available.")

opts.register_custom_ops_library(r[0])

return InferenceSession(

onx.SerializeToString(), opts, providers=["CPUExecutionProvider"]

)

res = optimize_model(

onx,

feeds={"X": Xb},

transform=transform_model,

session=create_session,

baseline=lambda onx: InferenceSession(

onx.SerializeToString(), providers=["CPUExecutionProvider"]

),

params=optim_params,

verbose=True,

number=script_args.number,

repeat=script_args.repeat,

warmup=script_args.warmup,

sleep=script_args.sleep,

n_tries=script_args.tries,

)

0%| | 0/16 [00:00<?, ?it/s]

i=1/16 TRY=0 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 0%| | 0/16 [00:00<?, ?it/s]

i=1/16 TRY=0 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 6%|▋ | 1/16 [00:00<00:03, 4.52it/s]

i=2/16 TRY=0 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=0.65x: 6%|▋ | 1/16 [00:00<00:03, 4.52it/s]

i=2/16 TRY=0 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=0.65x: 12%|█▎ | 2/16 [00:00<00:02, 5.59it/s]

i=3/16 TRY=0 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=0.65x: 12%|█▎ | 2/16 [00:00<00:02, 5.59it/s]

i=3/16 TRY=0 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=0.65x: 19%|█▉ | 3/16 [00:00<00:02, 6.41it/s]

i=4/16 TRY=0 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 19%|█▉ | 3/16 [00:00<00:02, 6.41it/s]

i=4/16 TRY=0 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 25%|██▌ | 4/16 [00:00<00:01, 6.95it/s]

i=5/16 TRY=0 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 25%|██▌ | 4/16 [00:00<00:01, 6.95it/s]

i=5/16 TRY=0 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 31%|███▏ | 5/16 [00:00<00:01, 7.29it/s]

i=6/16 TRY=0 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 31%|███▏ | 5/16 [00:00<00:01, 7.29it/s]

i=6/16 TRY=0 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 38%|███▊ | 6/16 [00:00<00:01, 7.52it/s]

i=7/16 TRY=0 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 38%|███▊ | 6/16 [00:00<00:01, 7.52it/s]

i=7/16 TRY=0 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 44%|████▍ | 7/16 [00:00<00:01, 7.68it/s]

i=8/16 TRY=0 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 44%|████▍ | 7/16 [00:00<00:01, 7.68it/s]

i=8/16 TRY=0 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 50%|█████ | 8/16 [00:01<00:01, 7.50it/s]

i=9/16 TRY=1 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 50%|█████ | 8/16 [00:01<00:01, 7.50it/s]

i=9/16 TRY=1 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 56%|█████▋ | 9/16 [00:01<00:01, 6.45it/s]

i=10/16 TRY=1 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 56%|█████▋ | 9/16 [00:01<00:01, 6.45it/s]

i=10/16 TRY=1 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 62%|██████▎ | 10/16 [00:01<00:01, 5.52it/s]

i=11/16 TRY=1 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 62%|██████▎ | 10/16 [00:01<00:01, 5.52it/s]

i=11/16 TRY=1 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 69%|██████▉ | 11/16 [00:01<00:00, 6.00it/s]

i=12/16 TRY=1 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 69%|██████▉ | 11/16 [00:01<00:00, 6.00it/s]

i=12/16 TRY=1 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 75%|███████▌ | 12/16 [00:01<00:00, 6.48it/s]

i=13/16 TRY=1 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 75%|███████▌ | 12/16 [00:01<00:00, 6.48it/s]

i=13/16 TRY=1 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 81%|████████▏ | 13/16 [00:01<00:00, 6.50it/s]

i=14/16 TRY=1 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 81%|████████▏ | 13/16 [00:01<00:00, 6.50it/s]

i=14/16 TRY=1 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 88%|████████▊ | 14/16 [00:02<00:00, 6.08it/s]

i=15/16 TRY=1 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 88%|████████▊ | 14/16 [00:02<00:00, 6.08it/s]

i=15/16 TRY=1 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0 ~=1.04x: 94%|█████████▍| 15/16 [00:02<00:00, 6.37it/s]

i=16/16 TRY=1 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.12x: 94%|█████████▍| 15/16 [00:02<00:00, 6.37it/s]

i=16/16 TRY=1 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.12x: 100%|██████████| 16/16 [00:02<00:00, 6.35it/s]

i=16/16 TRY=1 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0 ~=1.12x: 100%|██████████| 16/16 [00:02<00:00, 6.45it/s]

Then the optimization for sparse

res_sparse = optimize_model(

onx,

feeds={"X": Xb_sp},

transform=lambda *args, **kwargs: transform_model(*args, use_sparse=True, **kwargs),

session=create_session,

params=optim_params,

verbose=True,

number=script_args.number,

repeat=script_args.repeat,

warmup=script_args.warmup,

sleep=script_args.sleep,

n_tries=script_args.tries,

)

0%| | 0/16 [00:00<?, ?it/s]

i=1/16 TRY=0 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 0%| | 0/16 [00:00<?, ?it/s]

i=1/16 TRY=0 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 6%|▋ | 1/16 [00:00<00:02, 5.49it/s]

i=2/16 TRY=0 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 6%|▋ | 1/16 [00:00<00:02, 5.49it/s]

i=2/16 TRY=0 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 12%|█▎ | 2/16 [00:00<00:02, 6.43it/s]

i=3/16 TRY=0 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 12%|█▎ | 2/16 [00:00<00:02, 6.43it/s]

i=3/16 TRY=0 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 19%|█▉ | 3/16 [00:00<00:02, 5.98it/s]

i=4/16 TRY=0 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 19%|█▉ | 3/16 [00:00<00:02, 5.98it/s]

i=4/16 TRY=0 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 25%|██▌ | 4/16 [00:00<00:02, 5.32it/s]

i=5/16 TRY=0 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 25%|██▌ | 4/16 [00:00<00:02, 5.32it/s]

i=5/16 TRY=0 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 31%|███▏ | 5/16 [00:00<00:01, 5.72it/s]

i=6/16 TRY=0 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 31%|███▏ | 5/16 [00:00<00:01, 5.72it/s]

i=6/16 TRY=0 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 38%|███▊ | 6/16 [00:01<00:01, 5.91it/s]

i=7/16 TRY=0 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 38%|███▊ | 6/16 [00:01<00:01, 5.91it/s]

i=7/16 TRY=0 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 44%|████▍ | 7/16 [00:01<00:01, 5.58it/s]

i=8/16 TRY=0 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 44%|████▍ | 7/16 [00:01<00:01, 5.58it/s]

i=8/16 TRY=0 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 50%|█████ | 8/16 [00:01<00:01, 5.85it/s]

i=9/16 TRY=1 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 50%|█████ | 8/16 [00:01<00:01, 5.85it/s]

i=9/16 TRY=1 //tree=80 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 56%|█████▋ | 9/16 [00:01<00:01, 6.10it/s]

i=10/16 TRY=1 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 56%|█████▋ | 9/16 [00:01<00:01, 6.10it/s]

i=10/16 TRY=1 //tree=80 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 62%|██████▎ | 10/16 [00:01<00:00, 6.43it/s]

i=11/16 TRY=1 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 62%|██████▎ | 10/16 [00:01<00:00, 6.43it/s]

i=11/16 TRY=1 //tree=80 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 69%|██████▉ | 11/16 [00:01<00:00, 6.61it/s]

i=12/16 TRY=1 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 69%|██████▉ | 11/16 [00:01<00:00, 6.61it/s]

i=12/16 TRY=1 //tree=80 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 75%|███████▌ | 12/16 [00:01<00:00, 6.55it/s]

i=13/16 TRY=1 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 75%|███████▌ | 12/16 [00:01<00:00, 6.55it/s]

i=13/16 TRY=1 //tree=40 //tree_N=128 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 81%|████████▏ | 13/16 [00:02<00:00, 6.49it/s]

i=14/16 TRY=1 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 81%|████████▏ | 13/16 [00:02<00:00, 6.49it/s]

i=14/16 TRY=1 //tree=40 //tree_N=128 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 88%|████████▊ | 14/16 [00:02<00:00, 6.31it/s]

i=15/16 TRY=1 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 88%|████████▊ | 14/16 [00:02<00:00, 6.31it/s]

i=15/16 TRY=1 //tree=40 //tree_N=64 //N=50 bs_tree=1 batch_size_rows=1 n3=0: 94%|█████████▍| 15/16 [00:02<00:00, 5.75it/s]

i=16/16 TRY=1 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 94%|█████████▍| 15/16 [00:02<00:00, 5.75it/s]

i=16/16 TRY=1 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 100%|██████████| 16/16 [00:02<00:00, 6.01it/s]

i=16/16 TRY=1 //tree=40 //tree_N=64 //N=25 bs_tree=1 batch_size_rows=1 n3=0: 100%|██████████| 16/16 [00:02<00:00, 6.04it/s]

And the results.

df_dense = DataFrame(res)

df_dense["input"] = "dense"

df_sparse = DataFrame(res_sparse)

df_sparse["input"] = "sparse"

df = concat([df_dense, df_sparse], axis=0)

df.to_csv("plot_op_tree_ensemble_sparse.csv", index=False)

df.to_excel("plot_op_tree_ensemble_sparse.xlsx", index=False)

print(df.columns)

print(df.head(5))

Index(['average', 'deviation', 'min_exec', 'max_exec', 'repeat', 'number',

'ttime', 'context_size', 'warmup_time', 'n_exp', 'n_exp_name',

'short_name', 'TRY', 'name', 'parallel_tree', 'parallel_tree_N',

'parallel_N', 'batch_size_tree', 'batch_size_rows', 'use_node3',

'input'],

dtype='object')

average deviation min_exec ... batch_size_rows use_node3 input

0 0.000037 0.000003 0.000034 ... NaN NaN dense

1 0.000058 0.000018 0.000043 ... 1.0 0.0 dense

2 0.000103 0.000112 0.000037 ... 1.0 0.0 dense

3 0.000036 0.000004 0.000032 ... 1.0 0.0 dense

4 0.000036 0.000002 0.000034 ... 1.0 0.0 dense

[5 rows x 21 columns]

Sorting¶

small_df = df.drop(

[

"min_exec",

"max_exec",

"repeat",

"number",

"context_size",

"n_exp_name",

],

axis=1,

).sort_values("average")

print(small_df.head(n=10))

average deviation ttime ... batch_size_rows use_node3 input

15 0.000033 0.000002 0.000333 ... 1.0 0.0 dense

3 0.000036 0.000004 0.000358 ... 1.0 0.0 dense

4 0.000036 0.000002 0.000360 ... 1.0 0.0 dense

6 0.000036 0.000003 0.000361 ... 1.0 0.0 dense

0 0.000037 0.000003 0.000374 ... NaN NaN dense

11 0.000038 0.000003 0.000384 ... 1.0 0.0 dense

12 0.000039 0.000003 0.000387 ... 1.0 0.0 dense

7 0.000040 0.000003 0.000396 ... 1.0 0.0 dense

5 0.000044 0.000003 0.000443 ... 1.0 0.0 dense

8 0.000052 0.000012 0.000518 ... 1.0 0.0 dense

[10 rows x 15 columns]

Worst¶

print(small_df.tail(n=10))

average deviation ttime ... batch_size_rows use_node3 input

0 0.000230 0.000173 0.002303 ... 1.0 0.0 sparse

7 0.000235 0.000138 0.002350 ... 1.0 0.0 sparse

11 0.000235 0.000178 0.002351 ... 1.0 0.0 sparse

14 0.000238 0.000164 0.002376 ... 1.0 0.0 sparse

16 0.000318 0.000560 0.003184 ... 1.0 0.0 dense

10 0.000360 0.000980 0.003600 ... 1.0 0.0 dense

4 0.000362 0.000050 0.003616 ... 1.0 0.0 sparse

2 0.000439 0.000476 0.004387 ... 1.0 0.0 sparse

3 0.000483 0.000421 0.004832 ... 1.0 0.0 sparse

6 0.000773 0.001455 0.007726 ... 1.0 0.0 sparse

[10 rows x 15 columns]

Plot¶

skeys = ",".join(optim_params.keys())

title = f"TreeEnsemble tuning, n_tries={script_args.tries}\n{skeys}\nlower is better"

ax = hhistograms(df, title=title, keys=("input", "name"))

fig = ax.get_figure()

fig.savefig("plot_op_tree_ensemble_sparse.png")

Total running time of the script: (0 minutes 6.312 seconds)