Note

Go to the end to download the full example code.

Evaluating random access for sparse¶

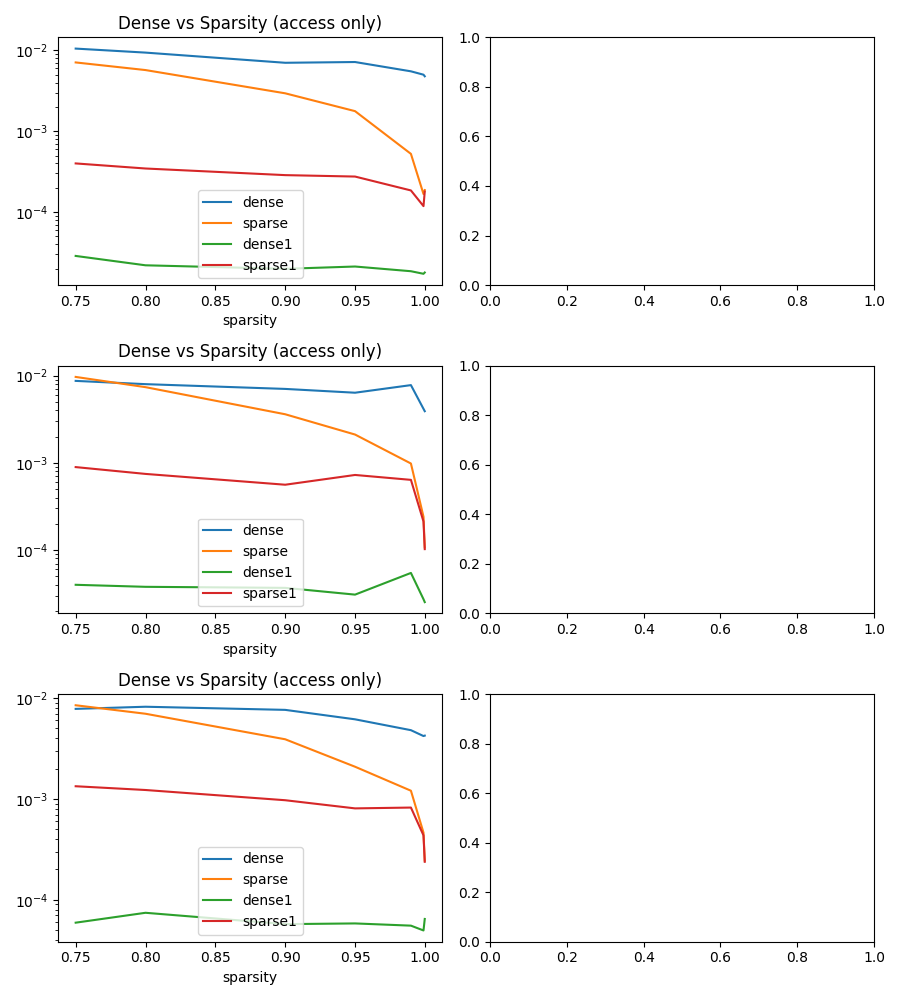

Whenever computing the prediction of a tree with a sparse tensor, is it faster to density first and then to compute the prediction or to keep the tensor in its sparse representation and do look up? The parameter nrnd can be seen as the depth of a tree.

import itertools

import numpy as np

from tqdm import tqdm

import matplotlib.pyplot as plt

from pandas import DataFrame

from onnx_extended.ext_test_case import unit_test_going

from onnx_extended.args import get_parsed_args

from onnx_extended.validation.cpu._validation import evaluate_sparse

expose = "repeat,warmup,nrows,ncols,sparsity,nrnd,ntimes"

script_args = get_parsed_args(

"plot_bench_sparse_access",

description=__doc__,

nrows=(10 if unit_test_going() else 100, "number of rows"),

ncols=(10 if unit_test_going() else 100000, "number of columns"),

ntimes=(

"1" if unit_test_going() else "2,4,8",

"number of times to do nrnd random accesses per row",

),

sparsity=(

"0.1,0.2" if unit_test_going() else "0.75,0.8,0.9,0.95,0.99,0.999,0.9999",

"sparsities to try",

),

repeat=2 if unit_test_going() else 5,

warmup=1 if unit_test_going() else 3,

nrnd=(10, "number of random features to access"),

expose=expose,

)

for att in sorted(expose.split(",")):

print(f"{att}={getattr(script_args, att)}")

ncols=100000

nrnd=10

nrows=100

ntimes=2,4,8

repeat=5

sparsity=0.75,0.8,0.9,0.95,0.99,0.999,0.9999

warmup=3

Sparse tensor¶

def make_sparse_random_tensor(n_rows: int, n_cols: int, sparsity: float):

t = np.random.rand(n_rows, n_cols).astype(np.float32)

m = np.random.rand(n_rows, n_cols).astype(np.float32)

t[m <= sparsity] = 0

return t

sparsity = list(map(float, script_args.sparsity.split(",")))

ntimes = list(map(int, script_args.ntimes.split(",")))

t = make_sparse_random_tensor(script_args.nrows, script_args.ncols, sparsity[0])

ev = evaluate_sparse(t, script_args.nrnd, ntimes[0], script_args.repeat, 3)

print(f"dense: initialization:{ev[0][0]:1.3g}")

print(f" access:{ev[0][1]:1.3g}")

print(f"sparse: initialization:{ev[1][0]:1.3g}")

print(f" access:{ev[1][1]:1.3g}")

print(f"Ratio sparse/dense: {ev[1][1] / ev[0][1]}")

dense: initialization:0.00855

access:2.11e-05

sparse: initialization:0.00913

access:0.000433

Ratio sparse/dense: 20.504117992313077

If > 1, sparse is slower.

Try sparsity¶

tries = list(itertools.product(ntimes, sparsity))

data = []

for nt, sp in tqdm(tries):

t = make_sparse_random_tensor(script_args.nrows, script_args.ncols, sp)

ev = evaluate_sparse(t, script_args.nrnd, nt, script_args.repeat, 3)

obs = dict(

dense0=ev[0][0],

dense1=ev[0][1],

dense=ev[0][0] + ev[0][1],

sparse0=ev[1][0],

sparse1=ev[1][1],

sparse=ev[1][0] + ev[1][1],

sparsity=sp,

rows=t.shape[0],

cols=t.shape[1],

repeat=script_args.repeat,

random=script_args.nrnd,

ntimes=nt,

)

data.append(obs)

df = DataFrame(data)

print(df)

0%| | 0/21 [00:00<?, ?it/s]

5%|▍ | 1/21 [00:00<00:07, 2.53it/s]

10%|▉ | 2/21 [00:00<00:07, 2.38it/s]

14%|█▍ | 3/21 [00:01<00:06, 2.79it/s]

19%|█▉ | 4/21 [00:01<00:06, 2.83it/s]

24%|██▍ | 5/21 [00:01<00:05, 3.08it/s]

29%|██▊ | 6/21 [00:01<00:04, 3.29it/s]

33%|███▎ | 7/21 [00:02<00:04, 3.42it/s]

38%|███▊ | 8/21 [00:02<00:04, 3.00it/s]

43%|████▎ | 9/21 [00:03<00:04, 2.90it/s]

48%|████▊ | 10/21 [00:03<00:03, 2.87it/s]

52%|█████▏ | 11/21 [00:03<00:03, 3.08it/s]

57%|█████▋ | 12/21 [00:03<00:02, 3.28it/s]

62%|██████▏ | 13/21 [00:04<00:02, 3.58it/s]

67%|██████▋ | 14/21 [00:04<00:01, 3.79it/s]

71%|███████▏ | 15/21 [00:04<00:01, 3.40it/s]

76%|███████▌ | 16/21 [00:05<00:01, 3.16it/s]

81%|████████ | 17/21 [00:05<00:01, 3.26it/s]

86%|████████▌ | 18/21 [00:05<00:00, 3.42it/s]

90%|█████████ | 19/21 [00:05<00:00, 3.65it/s]

95%|█████████▌| 20/21 [00:06<00:00, 3.86it/s]

100%|██████████| 21/21 [00:06<00:00, 4.09it/s]

100%|██████████| 21/21 [00:06<00:00, 3.31it/s]

dense0 dense1 dense sparse0 ... cols repeat random ntimes

0 0.010487 0.000029 0.010515 0.006689 ... 100000 5 10 2

1 0.009358 0.000022 0.009380 0.005358 ... 100000 5 10 2

2 0.007000 0.000020 0.007019 0.002656 ... 100000 5 10 2

3 0.007152 0.000021 0.007173 0.001495 ... 100000 5 10 2

4 0.005485 0.000019 0.005504 0.000339 ... 100000 5 10 2

5 0.004981 0.000017 0.004998 0.000049 ... 100000 5 10 2

6 0.004759 0.000018 0.004777 0.000008 ... 100000 5 10 2

7 0.008675 0.000040 0.008714 0.008783 ... 100000 5 10 4

8 0.007958 0.000038 0.007996 0.006639 ... 100000 5 10 4

9 0.007013 0.000037 0.007050 0.003048 ... 100000 5 10 4

10 0.006345 0.000031 0.006376 0.001386 ... 100000 5 10 4

11 0.007740 0.000055 0.007794 0.000345 ... 100000 5 10 4

12 0.004137 0.000027 0.004164 0.000028 ... 100000 5 10 4

13 0.003888 0.000025 0.003913 0.000005 ... 100000 5 10 4

14 0.007753 0.000059 0.007813 0.007152 ... 100000 5 10 8

15 0.008131 0.000074 0.008205 0.005766 ... 100000 5 10 8

16 0.007581 0.000057 0.007639 0.002933 ... 100000 5 10 8

17 0.006099 0.000058 0.006157 0.001280 ... 100000 5 10 8

18 0.004748 0.000055 0.004804 0.000385 ... 100000 5 10 8

19 0.004152 0.000050 0.004202 0.000028 ... 100000 5 10 8

20 0.004179 0.000064 0.004244 0.000006 ... 100000 5 10 8

[21 rows x 12 columns]

Plots

nts = list(sorted(set(df.ntimes)))

fig, ax = plt.subplots(len(nts), 2, figsize=(3 * len(nts), 10))

for i, nt in enumerate(nts):

sub = df[df.ntimes == nt]

sub[["sparsity", "dense", "sparse"]].set_index("sparsity").plot(

title=f"Dense vs Sparsity, ntimes={nt}",

logy=True,

ax=ax[0] if len(ax.shape) == 1 else ax[i, 0],

)

sub[["sparsity", "dense1", "sparse1"]].set_index("sparsity").plot(

title="Dense vs Sparsity (access only)",

logy=True,

ax=ax[1] if len(ax.shape) == 1 else ax[i, 0],

)

fig.tight_layout()

fig.savefig("plot_bench_sparse_access.png")

Total running time of the script: (0 minutes 8.575 seconds)